Extending Oracle Enterprise Manager (EM) monitoring. 29 January 2012

Posted by David Alejo Marcos in Grid Control, Oracle 11.2, SQL - PL/SQL.Tags: Grid Control, Oracle 11.2, SQL, SQL - PL/SQL

comments closed

I always found Oracle Enterprise Manager (EM) to be an interesting tool for different reasons. The only thing I missed was an easy way to create my own alerts.

It is very simple to create a KSH, Perl, etc script to do some customised monitoring and notify you by email, Nagios, NetCool, etc.

By integrating your scripts with OEM, you will have an easy way to enhance your monitoring and still have notification by email, SNMP traps, etc. as you would currently have if your company is using OEM for monitoring your systems.

The problem:

Develop an easy way to integrate your monitoring scripts with OEM.

The solution:

I decided to use an Oracle type and an Oracle function to accomplish this goal. Using the steps described below, we can monitor pretty much whatever aspect of the database providing you can put the logic into a function.

As example, I had added the steps to create two new User-Defined SQL Metrics, as Oracle calls them:

1.- Long Running Sessions (LRS).

2.- Tablespace Monitoring.

The reason to have my own TBS monitoring is to enhance the existing as it has “hard-coded” thresholds. I might have tablespaces in your database which are 6TBS in size and other with only 2Gb, so raising an alert at 95% for both of them is, in my opinion, not adequate.

You can find more about the query I developed here.

The steps to create a script to monitor long running sessions (LRS) are:

1.- create types

CREATE OR REPLACE TYPE lrs_obj as OBJECT ( user_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE lrs_array AS TABLE OF lrs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION lrs RETURN lrs_array IS long_running_data lrs_array := lrs_array(); ln_seconds_active number := 300; BEGIN SELECT lrs_obj(username||' Sec: '||sec_running, ', Inst_id: '||inst_id||', SID: '||sid||', Serial: '|| serial||', Logon: '||session_logon_time||', sql_id: '||sql_id) BULK COLLECT INTO long_running_data FROM (SELECT /*+ FIRST_ROWS USE_NL(S,SQ,P) */ s.inst_id inst_id, s.sid sid, s.serial# serial, s.last_call_et sec_running, NVL(s.username, '(oracle)') AS username, to_char(s.logon_time, 'DD-MM-YYYY HH24:MI:SS') session_logon_time, s.machine, NVL(s.osuser, 'n/a') AS osuser, NVL(s.program, 'n/a') AS program, s.event, s.seconds_in_wait, s.sql_id sql_id, sq.sql_text from gv$session s, gv$sqlarea sq where s.sql_id = sq.sql_id and s.inst_id = sq.inst_id and s.status = 'ACTIVE' and s.last_call_et > ln_seconds_active and s.paddr not in ( select paddr from gv$bgprocess where paddr != '00' ) and s.type != 'BACKGROUND' and s.username not in ( 'SYSTEM', 'SYS' ) AND s.event != 'SQL*Net break/reset to client' ) CUSTOMER_QUERY; RETURN long_running_data; END lrs; /

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on lrs_obj to public; grant execute on lrs_array to public; grant execute on lrs to public;

4.- create synonyms

Create public synonym lrs_obj for lrs_obj; Create public synonym lrs_array for lrs_array; Create public synonym lrs for lrs;

5.- Query to monitor

SELECT user_name, error_message FROM TABLE(CAST(lrs as lrs_array));

Once we are satisfied with the thresholds (300 seconds on the script), we are ready to add it to EM.

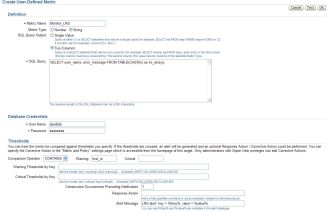

1.- Navigate to User-Defined SQL Metrics (you need to navigate to your database and you will find the link at bottom).

2.- Create new User-Defined SQL Metric and fill the gaps (I have attached some values for reference). The most important thing right now is to make sure metric_Type = String, Sql Query Output = two columns, comparison Operator = CONTAINS and warning has a value returned by the query (i did decide to go for Inst_id).

The only thing left now is to add this monitoring to your templates and to your rules so notifications are being sent.

Once all has been configure, you should start seeing alerts like this:

Target Name=lab01 Target type=Cluster Database Host=mylab Occurred At=Jan 22, 2012 14:35:47 PM GMT Message=LRS alert: key = DAVIDLAB Sec: 530, value = , Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric=StrValue Metric value=, Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric ID=lrs Key=DAVIDLAB Sec: 530 Severity=Warning Acknowledged=No Notification Rule Name=david alerts Notification Rule Owner=DAVIDLAB

For Tablespace monitoring the steps will be the same as described above:

1.- create types

CREATE OR REPLACE TYPE tbs_obj as OBJECT ( tablespace_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE tbs_array AS TABLE OF tbs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION calc_tbs_free_mb RETURN tbs_array IS

tablespace_data tbs_array := tbs_array();

BEGIN

SELECT tbs_obj(tablespace_name, alert||', Used_MB: '||space_used_mb||', PCT_Free: '||pct_free||', FreeMB: '|| free_space_mb) BULK COLLECT

INTO tablespace_data

FROM

(SELECT (CASE

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents THEN 'CRITICAL'

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20 THEN 'WARNING'

ELSE 'N/A'

END)

alert,

tablespace_name,

space_used_mb,

ROUND (free_space/power(1024,3), 2) free_gb,

free_space_mb,

pct_free,

ROUND (extend_bytes/power(1024,3), 2) extend_gb,

free_extents,

max_size_gb,

maxextent

FROM (SELECT c.tablespace_name,

NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) pct_free,

NVL ( ROUND ( (a.extend_bytes + b.free_space) / 1024 / 1024, 2), 0) free_space_mb,

(CASE

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 30 THEN 60

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 100 THEN 120

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 300 THEN 200

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 800 THEN 300

ELSE 340

END) free_extents,

a.extend_bytes, b.free_space,

ROUND (maxbytes /power(1024,3), 2) max_size_gb,

nvl (round(a.bytes - b.free_space ,2) /1024/1024, 0) space_used_mb,

c.allocation_type,

GREATEST (c.min_extlen / 1024 / 1024, 64) min_extlen,

64 maxextent

FROM ( SELECT tablespace_name,

SUM(DECODE (SIGN (maxbytes - BYTES), -1, 0, maxbytes - BYTES)) AS extend_bytes,

SUM (BYTES) AS BYTES,

SUM (maxbytes) maxbytes

FROM DBA_DATA_FILES

GROUP BY tablespace_name) A,

( SELECT tablespace_name,

SUM (BYTES) AS free_space,

MAX (BYTES) largest

FROM DBA_FREE_SPACE

GROUP BY tablespace_name) b,

dba_tablespaces c

WHERE c.contents not in ('UNDO','TEMPORARY') and

b.tablespace_name(+) = c.tablespace_name

AND a.tablespace_name = c.tablespace_name

)

) CUSTOMER_QUERY;

RETURN tablespace_data;

END calc_tbs_free_mb;

/

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on tbs_obj to public; grant execute on tbs_array to public; grant execute on calc_tbs_free_mb to public;

4.- create synonyms

Create public synonym tbs_obj for tbs_obj; Create public synonym tbs_array for tbs_array; Create public synonym calc_tbs_free_mb for calc_tbs_free_mb;

5.- Query to monitor

SELECT * FROM TABLE(CAST(calc_tbs_free_mb as tbs_array));

Please, remember to use comparison operator = CONTAINS, warning = WARNING and critical=CRITICAL

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Enterprise Manager Grid Control does not send emails 20 October 2010

Posted by David Alejo Marcos in Enterprise Manager, Grid Control, Oracle 11.2.Tags: Enterprise Manager, Grid Control, Oracle 11.2

comments closed

Have you ever wondered why EM Grid Control is not sending you email notifications for your alerts?

The problem:

I have been working with EM Grid Control for some time. We have some rules and templates created to monitor our databases and we normally receive emails when a Warning or Critical threshold have been crossed.

We did upgrade our OEM repository to 11g 1 month ago and I noticed we stopped receiving emails. I did review the alerts, tested emails and all was working fine, we just did not receive emails from our alerts.

The solution:

Quite simple, but it took me some time to find it.

As part of the upgrade, the parameter JOB_QUEUE_PROCESSES was set to 0.

SQL> show parameter job_queue_processes

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

job_queue_processes integer 0

as soon as I changed to a different number (please, bear in mind 10 is the minimum recommended by Oracle)

SQL> alter system set job_queue_processes=15 scope=memory;

I started to receive emails.

Notes:

1.- I have not tested SNMP Traps to feed our Nagios as it is still in development, but I will assume the fix is the same.

2.- Remember to change the parameter on your spfile.ora or init.ora file.

3.- As soon as you change the parameter you will start receiving all alerts (including old), I did receive over 1300 emails in 20 minutes, so you might consider doing the change on Friday by EOD and clear your inbox on Monday.

As always, comments are welcome.

High CPU caused by SYS (E000/EMNC background process) 15 July 2010

Posted by David Alejo Marcos in Grid Control, Oracle 11.2, Tuning.Tags: Grid Control, Oracle 11.2, Tuning

comments closed

One thing I have learned about being a production DBA is that our SLAs to fix problems are pretty much non-existent and troubleshooting skills are a “good thing to have”.

Today we had a problem on one of our most critical systems. This is a real-time database used by many clients and poor performance means our clients misses their own SLAs.

The problem:

Several calls and many alerts regarding high CPU on the production box indicated something was not running as expected on production. I had a quick look and it did not look healthy:

Note.- the lower load was after I solved the problem.

I did also have a look to the sys session (E000) to see the load and gather as much information as I could:

Note.- the decrease on the workload is after the problem was fixed.

Note.- the decrease on the workload is after the problem was fixed.

EMON is used by streams, bearing in mind we do not use streams technology as a such, the only explanation was Oracle AQ.

Another clue to the solution was the ASH report, indicating Oracle was spending too much time on the following event “Wait for EMON to process ntfns”:

The solution:

The EMON process was spinning, consuming resources needed for other processes. The only solution we have at this point in time (at least until Oracle releases the new PSU) is to kill the process.

The background process responsible is called EMNC, and this process spawns other process labelled E000,…

First of all, we need the PID of the process running on the server

[oracle@xxxxxx ~]$ ps -ef | grep -i emn

oracle 5952 1 0 Jul03 ? 00:00:00 ora_emnc_MFE

oracle 6667 5963 0 08:03 pts/8 00:00:00 grep -i emn

once we have the process, we proceed to kill it:

[oracle@xxxxx ~]$ kill -9 5952

and we check it re-spawns:

[oracle@xxxxxxx ~]$ ps -ef | grep -i emn

oracle 6706 5963 0 08:04 pts/8 00:00:00 grep -i emn

[oracle@xxxxx ~]$ ps -ef | grep -i emn

oracle 6739 1 2 08:04 ? 00:00:00 ora_emnc_MFE

oracle 6955 5963 0 08:04 pts/8 00:00:00 grep -i emn

Once the process was killed the load on the database decreased dramatically and our alerts cleared.

Note.- Sometimes killing emnc process is not enough and spawned process will also need to be terminated:

The rule of thumb is to check emnc process has restarted, if after 1 min it has not, the proceed to terminal e00? processes:

[oracle@ellison ~]$ ps -ef | grep -i e00

oracle 23537 1 7 07:34 ? 00:00:00 ora_e000_MFE

oracle 23539 1 7 07:34 ? 00:00:00 ora_e001_MFE

oracle 23541 1 32 07:34 ? 00:00:00 ora_e002_MFE

oracle 23543 1 13 07:34 ? 00:00:00 ora_e003_MFE

oracle 23545 1 54 07:34 ? 00:00:00 ora_e004_MFE

oracle 23547 5963 0 07:34 pts/8 00:00:00 grep -i e00

[oracle@xxxxxxx ~]$ kill -9 23537 23539 23541 23543 23545

as always, comments are welcome.

/usr/bin/diff: /etc/localtime: No such file or directory when executing emctl 10 June 2010

Posted by David Alejo Marcos in Enterprise Manager, Grid Control.Tags: emctl, Enterprise Manager, Grid Control

comments closed

I had to deploy a new agent on a DR server. The deployment went smooth, the agent registered with the repository.

I wanted to check we were uploading data from the target server as it normally takes some time for the repository to show information on the Grid Control webpage and forcing an upload normally helps to speed up when I had close to 60 lines showing:

/usr/bin/diff: /etc/localtime: No such file or directory

The problem:

I tried to check the status of the agent and to upload data using emctl:

– emctl status agent

– emctl upload agent.

The output showed it was fine, the only “annoying” thing was close to 60 lines of, to some extend, unrelated errors:

[oracle@xxxx ~]$ emctl status agent

/usr/bin/diff: /etc/localtime: No such file or directory

.......

/usr/bin/diff: /etc/localtime: No such file or directory

/usr/bin/diff: /etc/localtime: No such file or directory

Oracle Enterprise Manager 10g Release 5 Grid Control 10.2.0.5.0.

Copyright (c) 1996, 2009 Oracle Corporation. All rights reserved.

---------------------------------------------------------------

Agent Version : 10.2.0.5.0

OMS Version : 10.2.0.5.0

Protocol Version : 10.2.0.5.0

Agent Home : /opt/oracle/product/10.2.0/agent10g

Agent binaries : /opt/oracle/product/10.2.0/agent10g

Agent Process ID : 26229

Parent Process ID : 26203

Agent URL : https://xxxxx:xx/emd/main/

Repository URL : https://xxxxx:xx/em/upload

Started at : 2010-06-05 00:44:52

Started by user : oracle

Last Reload : 2010-06-05 00:50:40

Last successful upload : 2010-06-10 02:21:31

Total Megabytes of XML files uploaded so far : 82.70

Number of XML files pending upload : 1

Size of XML files pending upload(MB) : 0.00

Available disk space on upload filesystem : 50.90%

Last successful heartbeat to OMS : 2010-06-10 02:21:22

---------------------------------------------------------------

Agent is Running and Ready

The solution:

After a bit of investigation I found out that the problem is relating to a missing file (or link). I talked with different SA’s and some of them told me it was better to have a soft link, others to have a copy of the file.

I opted for a copy of the file.

The missing file is /etc/localtime, this file is a copy (of soft link) to the file representing the local timezone of the machine.

[oracle@xxxxxx ~]$ ls -la /etc/localtime

/etc/localtime: No such file or directory

I asked an SA to copy the file corresponding to our timezone (UTC) /usr/share/zoneinfo/UTC with the right privileges and the “error” was gone:

[oracle@xxxxxx ~]$ ls -la /etc/localtime

-rw-r--r-- 1 root root 118 Apr 17 11:49 /etc/localtime

[oracle@xxxxx ~]$ emctl status agent

Oracle Enterprise Manager 10g Release 5 Grid Control 10.2.0.5.0.

Copyright (c) 1996, 2009 Oracle Corporation. All rights reserved.

---------------------------------------------------------------

Agent Version : 10.2.0.5.0

OMS Version : 10.2.0.5.0

Protocol Version : 10.2.0.5.0

Agent Home : /opt/oracle/product/10.2.0/agent10g

Agent binaries : /opt/oracle/product/10.2.0/agent10g

Agent Process ID : 26229

Parent Process ID : 26203

Agent URL : https://xxxxxxxxx:xx/emd/main/

Repository URL : https://xxxxxxxxxxx:xx/em/upload

Started at : 2010-06-05 00:44:52

Started by user : oracle

Last Reload : 2010-06-05 00:50:40

Last successful upload : 2010-06-10 03:03:46

Total Megabytes of XML files uploaded so far : 83.17

Number of XML files pending upload : 0

Size of XML files pending upload(MB) : 0.00

Available disk space on upload filesystem : 50.90%

Last successful heartbeat to OMS : 2010-06-10 03:03:28

---------------------------------------------------------------

Agent is Running and Ready

As always, comments are welcome.

change archivelog destination using Dataguard broker 20 May 2010

Posted by David Alejo Marcos in Dataguard Broker, Grid Control, Standby.Tags: Dataguar broker, Standby

comments closed

We have recently taken over some systems from a sister company and I have been working on them.

One of those systems is using dataguard with dataguard broker. It is a very interesting configuration as the primary and one of the standby database are on the same country while the second standby is in a different country.

I am used to work without the broker, so simple task as disable recovering manager or the archivelog destination because something like a “mini challege”.

In this post I will explain who to change the archivelog destination on primary and standby databases using DGMGRL.

The problem:

We have tree standby databases, Ellison (primary), Larry (physical standby) and Veltman (physical standby). We want to change the archivelog destination from /u03/oradata/arch to /u07/oradata/arch on all three environments.

Databases are being monitored using DataGuard Broker.

The solution:

if we decide to change the property StandbyArchiveLocation on the broker using DGMGRL. If the value of the string on StandbyArchiveLocation is different from log_archive_dest_1, DGMgRL will update the value of log_archive_dest_2.

With the above in mind, we execute the following using SQLPLUS on all three databases:

SQL> ALTER SYSTEM SET log_archive_dest_1='location="/u07/oradata/arch", valid_for=(ONLINE_LOGFILE,ALL_ROLES)' scope=both sid='*';

Once we have changed the parameter on all three database, we are ready to proceed with the next step.

Now we have to change the property on DGMGRL. We connect to the server running the primary database and execute:

[oracle@ellison ~]$ dgmgrl

DGMGRL for Linux: Version 11.1.0.7.0 - 64bit Production

Copyright (c) 2000, 2005, Oracle. All rights reserved.

Welcome to DGMGRL, type "help" for information.

DGMGRL> connect /

Connected.

DGMGRL> show database larry standbyarchivelocation

StandbyArchiveLocation = '/u03/oradata/arch'

DGMGRL> show database ellison standbyarchivelocation

StandbyArchiveLocation = '/u03/oradata/arch'

Now that we have checked the current value, we execute the command to ammend it:

DGMGRL> edit database larry set property StandbyArchiveLocation = '/u07/oradata/arch';

DGMGRL> edit database ellison set property StandbyArchiveLocation = '/u07/oradata/arch';

And we check again to verify it:

DGMGRL> show database larry standbyarchivelocation

StandbyArchiveLocation = '/u07/oradata/arch'

DGMGRL> show database ellison standbyarchivelocation

StandbyArchiveLocation = '/u07/oradata/arch'

It is recommended to disable and enable the configuration to enforce the new parameters:

DGMGRL> disable configuration;

Disabled.

DGMGRL> enable configuration;

Enabled.

and to check alert.log files on all 3 servers.

I normally execute a couple of “alter system switch logfile;” on primary and check that the archivelogs are being applied.

Note:

Our standby databases are running in open read only mode. These changes did to work until i disabled the managed recovery process, performed a shutdown immediate and opened them for read only again. Not sure if there is a bug, it is recommended to stop the standby databases or I was just unlucky.

As always, comments are welcome.

ORA-06502: character string buffer too small while re/creating dbconsole 18 December 2009

Posted by David Alejo Marcos in Enterprise Manager, Grid Control, SQL - PL/SQL.Tags: Enterprise Manager, Grid Control, SQL - PL/SQL

comments closed

wow,

we are getting close to the end of this year. This may be the last post for 2009, we will have to think about next year…

The problem:

OK, the last thing we faced here was the following error while re-creating the dbconsole using the standard emca -config dbcontrol db -repos recreate command:

CONFIG: ORA-06502: PL/SQL: numeric or value error: character string buffer too small

ORA-06512: at line 259

oracle.sysman.assistants.util.sqlEngine.SQLFatalErrorException: ORA-06502: PL/SQL: numeric or value error: character string buffer too small

ORA-06512: at line 259

followed for some nasty Java alerts and:

SEVERE: Error creating the repository

….

oracle.sysman.emcp.exception.EMConfigException: Error creating the repository

The solution:

Well, the first thing to check is that you are on the right ORACLE_HOME, this is not going to solve the problem, but it will help us to modify the script on the right ORACLE_HOME the first time.

The problem is located on a variable used to store the name of the host.

Normally this variable is defined for 32 characters. This is normally right for most systems, but we had a problem with one of ours as the domain of the servers plus the name of the database had a length of 33 characters.

How can we check the length of our host_name?, quite simple:

SYS@XXXXXXX> col host_name for a40

SYS@databee> select host_name, length(host_name) length from v$instance;

HOST_NAME LENGTH

————————————————————————– ———-

hhhhhhhhhhhhhhhhh.dddddd.dddddddd 33

Elapsed: 00:00:00.09

I had to change the names for security reasons.

Ok, the problem is down to a single character, the length is 33 characters and the variable has been defined as:

l_host_name VARCHAR2(32);

so it can only store 32.

the fix is quite simple.

Steps:

1.- Check you are on the right ORACLE_HOME

echo $ORACLE_HOME

2.- cd $ORACLE_HOME/sysman/admin/emdrep/sql/core/latest/self_monitor

3.- cp self_monitor_post_creation.sql self_monitor_post_creation.sql_bk

4.- Edit self_monitor_post_creation.sql and replace

l_host_name VARCHAR2(32);

for a length that can store your host_name

l_host_name VARCHAR2(35);

NOTE.- there are two places where this variable is defined on the script, remember to modify the definition on both places.

Once the script has been changed, you can rerun the command to re/create the dbconsole:

emca -config dbcontrol db -repos recreate

Perfect.

As always, comments are welcome.

Merry Xmas.

You must be logged in to post a comment.