Enterprise Manager Grid Control does not send emails 20 October 2010

Posted by David Alejo Marcos in Enterprise Manager, Grid Control, Oracle 11.2.Tags: Enterprise Manager, Grid Control, Oracle 11.2

comments closed

Have you ever wondered why EM Grid Control is not sending you email notifications for your alerts?

The problem:

I have been working with EM Grid Control for some time. We have some rules and templates created to monitor our databases and we normally receive emails when a Warning or Critical threshold have been crossed.

We did upgrade our OEM repository to 11g 1 month ago and I noticed we stopped receiving emails. I did review the alerts, tested emails and all was working fine, we just did not receive emails from our alerts.

The solution:

Quite simple, but it took me some time to find it.

As part of the upgrade, the parameter JOB_QUEUE_PROCESSES was set to 0.

SQL> show parameter job_queue_processes

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

job_queue_processes integer 0

as soon as I changed to a different number (please, bear in mind 10 is the minimum recommended by Oracle)

SQL> alter system set job_queue_processes=15 scope=memory;

I started to receive emails.

Notes:

1.- I have not tested SNMP Traps to feed our Nagios as it is still in development, but I will assume the fix is the same.

2.- Remember to change the parameter on your spfile.ora or init.ora file.

3.- As soon as you change the parameter you will start receiving all alerts (including old), I did receive over 1300 emails in 20 minutes, so you might consider doing the change on Friday by EOD and clear your inbox on Monday.

As always, comments are welcome.

/usr/bin/diff: /etc/localtime: No such file or directory when executing emctl 10 June 2010

Posted by David Alejo Marcos in Enterprise Manager, Grid Control.Tags: emctl, Enterprise Manager, Grid Control

comments closed

I had to deploy a new agent on a DR server. The deployment went smooth, the agent registered with the repository.

I wanted to check we were uploading data from the target server as it normally takes some time for the repository to show information on the Grid Control webpage and forcing an upload normally helps to speed up when I had close to 60 lines showing:

/usr/bin/diff: /etc/localtime: No such file or directory

The problem:

I tried to check the status of the agent and to upload data using emctl:

– emctl status agent

– emctl upload agent.

The output showed it was fine, the only “annoying” thing was close to 60 lines of, to some extend, unrelated errors:

[oracle@xxxx ~]$ emctl status agent

/usr/bin/diff: /etc/localtime: No such file or directory

.......

/usr/bin/diff: /etc/localtime: No such file or directory

/usr/bin/diff: /etc/localtime: No such file or directory

Oracle Enterprise Manager 10g Release 5 Grid Control 10.2.0.5.0.

Copyright (c) 1996, 2009 Oracle Corporation. All rights reserved.

---------------------------------------------------------------

Agent Version : 10.2.0.5.0

OMS Version : 10.2.0.5.0

Protocol Version : 10.2.0.5.0

Agent Home : /opt/oracle/product/10.2.0/agent10g

Agent binaries : /opt/oracle/product/10.2.0/agent10g

Agent Process ID : 26229

Parent Process ID : 26203

Agent URL : https://xxxxx:xx/emd/main/

Repository URL : https://xxxxx:xx/em/upload

Started at : 2010-06-05 00:44:52

Started by user : oracle

Last Reload : 2010-06-05 00:50:40

Last successful upload : 2010-06-10 02:21:31

Total Megabytes of XML files uploaded so far : 82.70

Number of XML files pending upload : 1

Size of XML files pending upload(MB) : 0.00

Available disk space on upload filesystem : 50.90%

Last successful heartbeat to OMS : 2010-06-10 02:21:22

---------------------------------------------------------------

Agent is Running and Ready

The solution:

After a bit of investigation I found out that the problem is relating to a missing file (or link). I talked with different SA’s and some of them told me it was better to have a soft link, others to have a copy of the file.

I opted for a copy of the file.

The missing file is /etc/localtime, this file is a copy (of soft link) to the file representing the local timezone of the machine.

[oracle@xxxxxx ~]$ ls -la /etc/localtime

/etc/localtime: No such file or directory

I asked an SA to copy the file corresponding to our timezone (UTC) /usr/share/zoneinfo/UTC with the right privileges and the “error” was gone:

[oracle@xxxxxx ~]$ ls -la /etc/localtime

-rw-r--r-- 1 root root 118 Apr 17 11:49 /etc/localtime

[oracle@xxxxx ~]$ emctl status agent

Oracle Enterprise Manager 10g Release 5 Grid Control 10.2.0.5.0.

Copyright (c) 1996, 2009 Oracle Corporation. All rights reserved.

---------------------------------------------------------------

Agent Version : 10.2.0.5.0

OMS Version : 10.2.0.5.0

Protocol Version : 10.2.0.5.0

Agent Home : /opt/oracle/product/10.2.0/agent10g

Agent binaries : /opt/oracle/product/10.2.0/agent10g

Agent Process ID : 26229

Parent Process ID : 26203

Agent URL : https://xxxxxxxxx:xx/emd/main/

Repository URL : https://xxxxxxxxxxx:xx/em/upload

Started at : 2010-06-05 00:44:52

Started by user : oracle

Last Reload : 2010-06-05 00:50:40

Last successful upload : 2010-06-10 03:03:46

Total Megabytes of XML files uploaded so far : 83.17

Number of XML files pending upload : 0

Size of XML files pending upload(MB) : 0.00

Available disk space on upload filesystem : 50.90%

Last successful heartbeat to OMS : 2010-06-10 03:03:28

---------------------------------------------------------------

Agent is Running and Ready

As always, comments are welcome.

Creating a 3-node standby database. Part I 10 March 2010

Posted by David Alejo Marcos in ASM, Enterprise Manager, RAC, Standby.Tags: ASM, Enterprise Manager, RAC

comments closed

As I promised, I will be writing on how I did create a 3-node standby database.

The goal is to move from a single instance database to a 3-node RAC. The steps we are following, as the project is still going, are:

1.- Create a 3-node standby database in the UK. This will be our primary database.

2.- Create a 3-node standby database in the US. This will be our standby database.

3.- Keep current standby up to date.

4.- Test switchover from current single instance production database to 3-node RAC in the UK, then to the 3-node RAC in the US, back to 3-node RAC in the UK and, finally, back to current primary.

5.- Do the switchover for real.

After the switchover, we will keep current production and current DR up to date for rollback.

In this post I will cover the first point. The installation of the software and configuration as standby in the US is quite similar to the configuration we followed for the UK.

I did not have to install CRS as our target servers have a 3-node RAC database currently running. We will be sharing those servers for 2 different databases.

The first step is to download the software from oracle. Our target was 10.2.0.4.1 for Linux X86-64.

Steps to follow:

Installation of Software:

1.- Install Oracle 10.2.0.1 (p4679769_10201_Linux-x86-64.zip).

If you choose the Advance Installation, the installer will identify the server as part of CRS and will prompt you to installed on all servers. My suggestion is to accept, will save you time and headaches.

2.- Install Oracle 10.2.0.4 (p6079224_10204_Linux-x86-64.zip).

3.- Install Opatch 11, necessary for patches and PSU.

4.- Install PSU 10.2.0.4.1 (p8576156_10204_Linux-x86-64.zip).

Please, bear in mind that the PSU will be installed in rolling mode (all nodes on the CRS).

Once we have the required software on all three nodes we need the storage:

ASM:

We use ASM and ASMLib as standard for our Oracle databases. Once we have checked that we have the required LUNs we need to add them to ASMLib:

ls -lrt /dev/mapper to check the names and sizes:

ls -lr /dev/mapper:

…….

brw-rw—- 1 root disk 253, 16 Nov 28 11:34 bvm_fra

brw-rw—- 1 root disk 253, 15 Nov 28 11:34 bvm_data

brw-rw—- 1 root disk 253, 17 Nov 28 11:34 bvm_ctl_log

For ASMLib we need to connect as Root. The first step is to create Disks:

# cd /etc/init.d

# ./oracleasm createdisk DATA_BVM_1 /dev/mapper/bvm_data_1

Marking disk “DATA_BVM_1” as an ASM disk: [ OK ]

# ./oracleasm createdisk DATA_BVM_2 /dev/mapper/bvm_data_2

Marking disk “DATA_BVM_2” as an ASM disk: [ OK ]

# ./oracleasm createdisk DATA_BVM_3 /dev/mapper/bvm_data_3

Marking disk “DATA_BVM_3” as an ASM disk: [ OK ]

# ./oracleasm createdisk FRA_BVM /dev/mapper/bvm_fra

Marking disk “FRA_BVM” as an ASM disk: [ OK ]

# ./oracleasm createdisk LOGCTL_BVM_1 /dev/mapper/bvm_ctl_log_1

Marking disk “LOGCTL_BVM_1” as an ASM disk: [ OK ]

Then we check those disks are visible from ALL 3 nodes. I prefer to execute the following commands on all 3 nodes to avoid problems:

# ./oracleasm scandisks

# ./oracleasm listdisks

If you prefer, you can list the contents of /dev/oracleasm/disks.

Now we need to create the diskgroups.

For this we need to connect as sys on the ASM instance. We use external redundancy for our databases:

1.- Set the environment to ASM using oraenv and connect as sys.

2.- Create diskgroups:

SQL> create diskgroup DATA_BVM external redundancy disk ‘ORCL:DATA_BVM_1′,’ORCL:DATA_BVM_2′,’ORCL:DATA_BVM_3’;

Diskgroup created.

SQL> create diskgroup FRA_BVM external redundancy disk ‘ORCL:FRA_BVM’;

Diskgroup created.

SQL> create diskgroup LOGCTL_BVM external redundancy disk ‘ORCL:LOGCTL_BVM_1′;

Diskgroup created.

set linesize 180

col path form a50

select name, header_status, state, path from v$asm_disk;

NAME HEADER_STATU STATE PATH

———————- ———— ——– ————————————————–

……….

DATA_BVM MEMBER NORMAL ORCL:DATA_BVM

FRA_BVM MEMBER NORMAL ORCL:FRA_BVM

LOGCTL_BVM MEMBER NORMAL ORCL:LOGCTL_BVM

17 rows selected.

3.- Mount diskgroups on ALL ASM instances on the CRS.

SQL> alter diskgroup LOGCTL_BVM mount;

….

Now we can create the database.

There are different ways to create a database, DBCA, export-import, RMAN, etc.

The way I decided to create this standby database was using RMAN (duplicate target command); it is very straight forward.

the syntax I used is:

rman target sys/xxxx@prod

connect auxiliary /

run {

set until time “to_date(’06-MAR-2010 08:00:00′,’DD-MON-YYYY HH24:MI:SS’)” ;

ALLOCATE AUXILIARY CHANNEL c1 DEVICE TYPE disk;

ALLOCATE AUXILIARY CHANNEL c2 DEVICE TYPE disk;

duplicate target database to preprod nofilenamecheck;

}

you will need to have an entry on the tnsnames.ora to connect to production. Full backup was copied from production to one of the nodes.

On Part II, I will start with the dataguard configuration and the DR test.

LGWR is blocking sessions (or running very slow). 5 February 2010

Posted by David Alejo Marcos in Enterprise Manager, Tuning.Tags: Tuning

comments closed

I am aware this is a very generic subject or a very specific problem to every system or database, so I am going to write about the problem we had with one of our databases at the end of last week.

The problem:

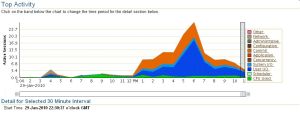

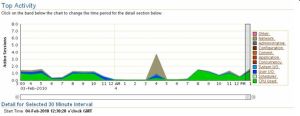

Last Friday, at lunchtime, we had a huge spike of orange looking wait event related to Commit (see fig_1) on Enterprise Manager. After digging a bit on some v$ views and Enterprise Manager we found that the LGWR was blocking several user sessions.

We raised a Severity 1 SR with oracle as the symptoms where the same as the ones described on Bug 5230765 – LGWR spins while waiting for ‘log file parallel write’ using ASM [ID 5230765.8. This bug was technically solved on 10.2.0.4 but it is always good to check things.

After uploading AWRs for the time the database was affected and the previous day when it was fine, some screen prints from Enterprise Manager, ASH for a short period of time and different log files (traces, alert log, etc); the best they could come up with was to resize the redo logs.

I must say the way they approached the problem was not right in my opinion.

Those redolog files never changed for the last 1+ year and we did not have the problem before. The problem went away at midnight and manifested itself on Monday and Tuesday, always around midday.

The solution:

The database is running on a single instance, Oracle + ASM 10.2.0.4, and Linux x86-64.

One of the trends I noticed (or lack of trend) was on the dml being executed. Some times it was an insert, sometimes and update on different objects and different schemas, so a hot block was unlikely to be the problem.

Another big clue was the number of waits in Buffer Gets and Disk Reads where well above average for both per execution and per session.

The Storage manager and the SAs checked the system several times while it was performing really bad and both said that it did not look different from previous days or weeks, so not much on their side.

Business also confirmed that the workload was not different from the previous day. We also have to bear in mind that the first time we had the problem was on the 31st; but the second and third time was on the 3rd and 4th, so we could discard any month end batch performing worst than before.

We decided to add two more redo log groups and increase the size by 2.5 times as this is an online operation with little impact on the business if the time frame is the right one, making a little different as the I/O and CPU was still very high.

The next change was to increase the SGA to solve the wait events on the buffer gets and disk reads. The reason I proposed to increase the SGA was that I thought we did not have enough space on the buffer cache to hold all the data the business was selecting plus inserts/updates.

As soon as the buffer cache was getting full, the LGWR was calling the DBWR to flush changes out of the memory to free space, over killing the server.

This change was not easy to get approved as requires downtime and the system is critical, but after missing couple of SLAs it was a go-go situation.

Once the change was done (increase the SGA_TARGET and SGA_MAX_SIZE), we monitored the system closely. All processes and batches finished on time and the load was much less (see fig_2 below).

Note.- the grey spike is due to a network problem between the primary site and the DR.

Conclusion:

You have to know your business and your databases. Oracle support came with an out of the book solution of increasing the redo logs but, as mentioned before, we did not change the size, neither the number for over 1 year.

We can get sometimes to focus on a single point (LGWR). Sometimes is good to make a step backwards and check the system. Different segments, different schemas, different dml and a huge amount buffer gets and disk read were the clue, not just LGWR blocking sessions.

We all have the rule of not switching redo logs more than x amount of times per hour, but sometimes we need to know why we have reached that number and not just increase the size as this may mask an underlying problem.

As always, comments are welcome.

ORA-06502: character string buffer too small while re/creating dbconsole 18 December 2009

Posted by David Alejo Marcos in Enterprise Manager, Grid Control, SQL - PL/SQL.Tags: Enterprise Manager, Grid Control, SQL - PL/SQL

comments closed

wow,

we are getting close to the end of this year. This may be the last post for 2009, we will have to think about next year…

The problem:

OK, the last thing we faced here was the following error while re-creating the dbconsole using the standard emca -config dbcontrol db -repos recreate command:

CONFIG: ORA-06502: PL/SQL: numeric or value error: character string buffer too small

ORA-06512: at line 259

oracle.sysman.assistants.util.sqlEngine.SQLFatalErrorException: ORA-06502: PL/SQL: numeric or value error: character string buffer too small

ORA-06512: at line 259

followed for some nasty Java alerts and:

SEVERE: Error creating the repository

….

oracle.sysman.emcp.exception.EMConfigException: Error creating the repository

The solution:

Well, the first thing to check is that you are on the right ORACLE_HOME, this is not going to solve the problem, but it will help us to modify the script on the right ORACLE_HOME the first time.

The problem is located on a variable used to store the name of the host.

Normally this variable is defined for 32 characters. This is normally right for most systems, but we had a problem with one of ours as the domain of the servers plus the name of the database had a length of 33 characters.

How can we check the length of our host_name?, quite simple:

SYS@XXXXXXX> col host_name for a40

SYS@databee> select host_name, length(host_name) length from v$instance;

HOST_NAME LENGTH

————————————————————————– ———-

hhhhhhhhhhhhhhhhh.dddddd.dddddddd 33

Elapsed: 00:00:00.09

I had to change the names for security reasons.

Ok, the problem is down to a single character, the length is 33 characters and the variable has been defined as:

l_host_name VARCHAR2(32);

so it can only store 32.

the fix is quite simple.

Steps:

1.- Check you are on the right ORACLE_HOME

echo $ORACLE_HOME

2.- cd $ORACLE_HOME/sysman/admin/emdrep/sql/core/latest/self_monitor

3.- cp self_monitor_post_creation.sql self_monitor_post_creation.sql_bk

4.- Edit self_monitor_post_creation.sql and replace

l_host_name VARCHAR2(32);

for a length that can store your host_name

l_host_name VARCHAR2(35);

NOTE.- there are two places where this variable is defined on the script, remember to modify the definition on both places.

Once the script has been changed, you can rerun the command to re/create the dbconsole:

emca -config dbcontrol db -repos recreate

Perfect.

As always, comments are welcome.

Merry Xmas.

Listener status is down (or pending) in Enterprise Manager 24 November 2009

Posted by David Alejo Marcos in Enterprise Manager.Tags: Agent, Grid Control

comments closed

This solution works for both Agents uploading data to a Grid Control Repository or to DBConsole.

We have a 3-nodes RAC and we are using dbconsole to monitor our production systems. We will be deploying Grid Control any time soon (providing Oracle fixes couple of bugs we discovered some time ago).

Until then, dbconsole is good enough.

Our configuration is as follows:

1.- Linux x86-64

2.- Oracle ASM (using ASMLib) 10.2.0.4

3.- Oracle Enterprise Edition 10.2.0.4.1

As you may know, Oracle recommends to run the listener from the ASM home instead of the database home and to have a different set of binaries for ASM in case you need to upgrade ASM (or you have more than one database running on the same server using different Oracle versions).

We run dbconsole from the Database Oracle home. This is as expected, but this leads us towards our problem, as the default location for SQL*Net configuration on RAC is the Oracle Home for the database.

For some time we have to deal with not knowing if the listener was down (as displayed on dbconsole) or pending (which normally indicates the agent is not uploading information to the repository.

Steps to deal with the problem are:

1.- Stop dbconsole/agent on all nodes.

2.- localte the target.xml file (normally under $ORACLE_HOME/sysman/emd/targets.xml if using dbconsole or $ORACLE_HOME/agent/sysman/emd/targets.xml if using Grid Control).

3.- make a backup of the file (always good practice)

4.- edit the file:

you need to search for an entry with the following format:

<Target TYPE=”oracle_listener” NAME=”LISTENER_xxxxxx”>

then you need to change the path on two properties:

<Property NAME=”ListenerOraDir” VALUE=”xxxxxxxxxx”/>

<Property NAME=”OracleHome” VALUE=”yyyyyyyyyyy”/>

where xxxxx should be your $ORACLE_HOME/network/admin

and yyyyyyy should be your $ORACLE_HOME

ie: /u01/app/oracle/admin/product/10.2.0/asm_1

5.- start dbconsole/agent on all nodes

6.- check the status of those listeners are up.

I read some documents on Oracle Support (old Metalink) and none work for us.

One of them was interesting by the fact of suggesting to add an environment variable (TNS_ADMIN) on the server. This is not recommended (at least on our systems) as we run ASM and DB and, hopefully one day, Grid Control Agent, and we like to keep things separated.

As always, comments are welcome.

You must be logged in to post a comment.