SQL Monitor details for later tuning. 29 March 2012

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL, Tuning.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL, Tuning

comments closed

Tuning has always being good fun and something like a challenge for me.

From time to time we are being asked to find out why something did run slow while you are sleeping; answering this question is, in most cases, a challenge.

The problem:

My batch did run slow last night, can you let us know why? Or why did this query run slow? Are questions we, as DBAs, have to answer from time to time.

The solution:

Oracle has provided us with many tools to dig out information about past operations. We have EM, AWR, ASH, dba_hist_* tables, scripts all over internet, etc.

I must admit I do use sql_monitor quite often, but on a really busy environment, Oracle will only keep, with any luck, couple of hours of SQLs.

V$SQL_MONITOR and dbms_sqltune.report_sql_monitor have become tools I use most frequently.

The only problem I have is, as mentioned earlier, the number of SQLs stored on v$sql_monitor or, rather, the length of time being kept there.

Oracle will keep a certain number of SQLs (defined by a hidden parameter) and start recycling them so, by the time I am in the office, any SQL executed during the batch is long gone.

For this reason I came up with the following. I must admit it is not rocket science, but it does help me quite a lot.

It is like my small collection of “Bad Running Queries”. And I only need another DBA or an operator with certain privileges to execute a simple procedure to allow me to try to find out what did happen.

We need the following objects:

1.- A table to store the data:

CREATE TABLE perflog ( asof DATE, userid VARCHAR2(30), sql_id VARCHAR2 (30), monitor_list CLOB, monitor CLOB, monitor_detail CLOB ); /

2.- A procedure to insert the data I need for tuning:

CREATE OR REPLACE PROCEDURE perflog_pr (p_sql_id VARCHAR2 DEFAULT 'N/A')

AS

BEGIN

IF p_sql_id = 'N/A'

THEN

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

NULL,

NULL

FROM DUAL;

ELSE

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_monitor (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_detail (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL')

FROM DUAL;

END IF;

COMMIT;

END;

/

3.- Grant necessary permissions:

grant select, insert on perflog to public / create public synonym perflog for perflog / grant execute on perflog_pr to public / create public synonym perflog_pr for perflog_pr / grant select any table, select any dictionary to <owner_code> /

The way it works is as follows:

– If business complains regarding a specific query, the DBA or operator can call the procedure with the sql_id:

exec perflog_pr ('1f52b50sq59q');

This will store the datetime, sql_id, DBA/operator name and most important the status of the instance at that time, general view of the sql and a detailed view of the sql running slow.

– If business complains regarding slowness but does not provide a specific query, we execute the following:

exec perflog_pr;

This will store the datetime, sql_id, DBA/operator name and a general view of the instance.

Please, give it a go and let me know any thoughts.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

ORA-01555 caused by SQL statement below… on Active Dataguard after upgrade to 11.2.0.2 25 January 2011

Posted by David Alejo Marcos in Oracle 11.2, Standby, Tuning.Tags: Oracle 11.2, Standby, Tuning

comments closed

Sometimes I wonder why we do upgrade our databases…

The problem:

after upgrading from 11.2.0.1 to 11.2.0.2, our standby databases (we are using active dataguard) was recording ORA-01555 on the alert log file after being running for some time.

We actively use our standby databases to run some queries for reporting.

The solution:

We have two standby databases, one local (less than 50km away) and another one on a remote location (250km) from primary.

The first thing we did was to raise a ticket with Oracle and change one of the standby databases from “open read only” to “mounted” (I had experience with problems on active dataguard in the past).

This proved useful in several ways:

1.- We narrowed the problem to active dataguard. The database in mount status was applying log files without issues and not logging any errors. The other standby started to log errors after a few hours of running (seems time depends on the workload the database is under).

2.- Once we stop the database and open it in read only, it will work for some time (not very elegant but still useful).

3.- Oracle Support came with a bug and a fix.

The bug number is 10320455 and the new patch is 10018789 (to be applied on top of 11.2.0.2).

The problem itself seems to be a hang (lock) in the library cache. This lock will cause the workload to accumulate and do not release resources and logging ORA-01555 after some time.

As the patch only updates binaries (546 kb in size) we might apply it to DR and, once we secure some downtime over the weekend, apply it to production. This will help us to minimize the time we impact business by having the standby databases in mounted status.

As always, comments are welcome.

Saving current statistics before gathering. 24 January 2011

Posted by David Alejo Marcos in Oracle 11.2, Tuning.Tags: Oracle 11.2, Tuning

comments closed

One of the things I like the most about my job is the constant learning. Does not matter for how long you have been working with Oracle, there is always something new to learn, even from old releases.

The problem:

As part of our upgrade to 11.2.0.2 and subsequent performance degradation, it was decided to gather statistics at schema level.

As precaution, I wanted to perform a backup of the current statistics, in case the new statistics did not perform as well as expected.

In the past, I would have used DBMS_STATS.EXPORT_xxx_STATS to create a backup, where xxx is the object (database, schema, table, index, etc.)

DBMS_STATS.EXPORT_SCHEMA_STATS ( ownname VARCHAR2, stattab VARCHAR2, statid VARCHAR2 DEFAULT NULL, statown VARCHAR2 DEFAULT NULL);

But that was in the past.

The solution:

Reading the 11g documentation, I noticed a couple of parameters I never used before (From Oracle doc):

1.- stabtab: User statistics table identifier describing where to save the current statistics.

2.- statid: Identifier (optional) to associate with these statistics within stattab.

3.- statown: Schema containing stattab (if different from ownname).

To backup current statistics to a table will be as simple as:

1.- Create backup table:

SYS@xxx> begin 2 dbms_stats.create_stat_table( 3 ownname => 'MYTEST', 4 stattab => 'DAVID_STATS', 5 tblspace => 'USERS' 6 ); 7 end; 8 / PL/SQL procedure successfully completed. Elapsed: 00:00:00.11 SYS@xxx>

2.- Gather stats:

SYS@xxx> BEGIN 2 DBMS_STATS.GATHER_SCHEMA_STATS ( 3 ownname =>'TEST', 4 degree => 12, 5 cascade => true, 6 method_opt=>"for all column size repeat", 7 stattab => 'DAVID_STATS', 8 statid =>'JANUARY_110122', 9 statown =>'MYTEST', 10 no_invalidate =>false); 11 END; 12 / PL/SQL procedure successfully completed. Elapsed: 00:44:09.44 SYS@xxx>

if you decide to rollback statistics using your backup table as source, you will need to execute:

begin dbms_stats.import_schema_stats(ownname=>'TEST', stattab=>'DAVID_STATS', statown=>'MYTEST', statid=>'JANUARY_110122'); end;

These parameters have been there for some time now; I just never used them before as I always did a dbms_stats.import_xxx_stats and dbms_stats.export_xxx_stats. This shows me, yet again, there are always different ways to achieve the same goal.

Note.- We are all aware whenever statistics in dictionary are modified, old statistics are automatically being saved for future restoring. But having statistics being backed up on your own table will help you to keep track of them and will not be automatically deleted unless I decide to do it.

As always, comments are welcome.

How to copy SQL Profile from one database to another one. 21 October 2010

Posted by David Alejo Marcos in Oracle 11.2, Tuning.Tags: Oracle 11.2, Tuning

comments closed

This is a very straight forward procedure.

The problem:

I need to copy SQL Profiles from one database to another database for stability and testing.

The solution:

6 simple steps:

1.- Create staging table to store the SQL Profiles to be copied on Source database:

MYUSER@MYDB> EXEC DBMS_SQLTUNE.CREATE_STGTAB_SQLPROF (table_name => 'PROFILE_STGTAB');

2.- Copy SQL Profiles from SYS to the staging table:

MYUSER@MYDB> EXEC DBMS_SQLTUNE.PACK_STGTAB_SQLPROF (profile_category => '%', staging_table_name => 'PROFILE_STGTAB');

As I needed to copy all SQL Profiles on my database ‘%’ value for profile_category was the best option.

3.- Export staging table.

4.- Create staging table on Destination Database:

MYUSER@MYDB> EXEC DBMS_SQLTUNE.CREATE_STGTAB_SQLPROF (table_name => 'PROFILE_STGTAB');

5.- Import data on Destination database.

6.- Create SQL Profiles on Destination database using data stored on staging table:

MYUSER@MYDB> EXEC DBMS_SQLTUNE.UNPACK_STGTAB_SQLPROF(replace => TRUE, staging_table_name => 'PROFILE_STGTAB');

I used replace = TRUE as I needed to have the same SQL Profiles on both databases.

Note.- Please, refer to http://download.oracle.com/docs/cd/B19306_01/appdev.102/b14258/d_sqltun.htm#CACFCAEC for a full list of parameters and options.

As always, comments are welcome.

High CPU caused by SYS (E000/EMNC background process) 15 July 2010

Posted by David Alejo Marcos in Grid Control, Oracle 11.2, Tuning.Tags: Grid Control, Oracle 11.2, Tuning

comments closed

One thing I have learned about being a production DBA is that our SLAs to fix problems are pretty much non-existent and troubleshooting skills are a “good thing to have”.

Today we had a problem on one of our most critical systems. This is a real-time database used by many clients and poor performance means our clients misses their own SLAs.

The problem:

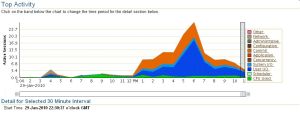

Several calls and many alerts regarding high CPU on the production box indicated something was not running as expected on production. I had a quick look and it did not look healthy:

Note.- the lower load was after I solved the problem.

I did also have a look to the sys session (E000) to see the load and gather as much information as I could:

Note.- the decrease on the workload is after the problem was fixed.

Note.- the decrease on the workload is after the problem was fixed.

EMON is used by streams, bearing in mind we do not use streams technology as a such, the only explanation was Oracle AQ.

Another clue to the solution was the ASH report, indicating Oracle was spending too much time on the following event “Wait for EMON to process ntfns”:

The solution:

The EMON process was spinning, consuming resources needed for other processes. The only solution we have at this point in time (at least until Oracle releases the new PSU) is to kill the process.

The background process responsible is called EMNC, and this process spawns other process labelled E000,…

First of all, we need the PID of the process running on the server

[oracle@xxxxxx ~]$ ps -ef | grep -i emn

oracle 5952 1 0 Jul03 ? 00:00:00 ora_emnc_MFE

oracle 6667 5963 0 08:03 pts/8 00:00:00 grep -i emn

once we have the process, we proceed to kill it:

[oracle@xxxxx ~]$ kill -9 5952

and we check it re-spawns:

[oracle@xxxxxxx ~]$ ps -ef | grep -i emn

oracle 6706 5963 0 08:04 pts/8 00:00:00 grep -i emn

[oracle@xxxxx ~]$ ps -ef | grep -i emn

oracle 6739 1 2 08:04 ? 00:00:00 ora_emnc_MFE

oracle 6955 5963 0 08:04 pts/8 00:00:00 grep -i emn

Once the process was killed the load on the database decreased dramatically and our alerts cleared.

Note.- Sometimes killing emnc process is not enough and spawned process will also need to be terminated:

The rule of thumb is to check emnc process has restarted, if after 1 min it has not, the proceed to terminal e00? processes:

[oracle@ellison ~]$ ps -ef | grep -i e00

oracle 23537 1 7 07:34 ? 00:00:00 ora_e000_MFE

oracle 23539 1 7 07:34 ? 00:00:00 ora_e001_MFE

oracle 23541 1 32 07:34 ? 00:00:00 ora_e002_MFE

oracle 23543 1 13 07:34 ? 00:00:00 ora_e003_MFE

oracle 23545 1 54 07:34 ? 00:00:00 ora_e004_MFE

oracle 23547 5963 0 07:34 pts/8 00:00:00 grep -i e00

[oracle@xxxxxxx ~]$ kill -9 23537 23539 23541 23543 23545

as always, comments are welcome.

Two or more Threads on an Oracle single instance 9 March 2010

Posted by David Alejo Marcos in RAC, Tuning.Tags: Dataguard, RAC, Tuning

comments closed

Have you ever move from a RAC configuration to a single instance?, this is one of the scenarios were you may finish with more than one thread on a single instance database.

The problem is that having more that one thread is for RAC, not single instances and will give you problems like restoring the database or alerts if you have scripts to monitor standby databases being kept up to date.

The problem:

having two threads on a single instance.

Thread 2 and 3 where closed on our primary database:

SQL> select thread#, status, enabled, sequence#’, checkpoint_time from v$thread;

THREAD# STATUS ENABLED SEQUENCE# CHECKPOIN

———- —— ——– ———- ———

1 OPEN PUBLIC 26956 09-MAR-10

2 CLOSED PRIVATE 31 06-MAR-10

3 CLOSED PRIVATE 104 09-MAR-10

but as you can see on the following query, Oracle was still using one of them (thread 3 in our case):

SQL> select thread#, sequence#, status from v$log;

THREAD# SEQUENCE# STATUS

———- ———- —————-

1 26947 INACTIVE

1 26948 INACTIVE

1 26949 CURRENT

1 26945 INACTIVE

1 26946 INACTIVE

3 100 INACTIVE

3 101 CURRENT

2 0 UNUSED

2 0 UNUSED

9 rows selected.

this was problematic once we tried to restore backups from that database, among other things.

The solution:

it is quite simple, we need to remove thread 2 and thread 3 from our database. The steps to follow are:

1.- disable unwanted threads:

ALTER DATABASE DISABLE THREAD 2;

ALTER DATABASE DISABLE THREAD 3;

2.- archive the thread that is in “CURRENT” or “INACTIVE” status from threads 2 or 3:

select group#, thread#, sequence#, status from v$log where status = ‘CURRENT’ and thread# in (2,3);

ALTER SYSTEM ARCHIVE LOG GROUP ????;

replace ???? with any group# returned.

3.- Drop logfile groups belonging to threads 2 and 3:

ALTER DATABASE DROP LOGFILE GROUP 6;

ALTER DATABASE DROP LOGFILE GROUP 7;

ALTER DATABASE DROP LOGFILE GROUP 8;

ALTER DATABASE DROP LOGFILE GROUP 9;

The reason:

The reason behind this problem may differ from system to system.

Some people have this problem because they copied the spfile (or init.ora) file from a different RAC system, and use it on a single instance database without removing RAC parameters.

Other people (like us) is because our primary database is RAC and the standby is single instance.

The init.ora parameter thread has a default value of 0 (zero) meaning that this instance will use any available, enabled public thread.

When we create a database, Oracle creates and enables thread 1 as public by default. This parameter is classified as RAC parameter, but we can use it on a single instance if we decide to use a different public thread that 1 or to fix this problem.

We did our switchover test from node 3. This node has instance 3 from our 3-node RAC running. As soon as we did the switchover to a single instance, Oracle started using thread 1 (default) and thread 3 (the same thread defined from the source database at the time of the switch).

At that time we should have followed the steps above OR modify our init.ora (or spfile) file and specify thread=1 for our single instance database.

From now on, we will add thread=1 on our single instance that have RAC as standby or any single instance with RAC as primary to avoid this problem in the future.

As always, comments are welcome.

LGWR is blocking sessions (or running very slow). 5 February 2010

Posted by David Alejo Marcos in Enterprise Manager, Tuning.Tags: Tuning

comments closed

I am aware this is a very generic subject or a very specific problem to every system or database, so I am going to write about the problem we had with one of our databases at the end of last week.

The problem:

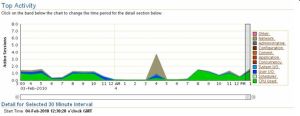

Last Friday, at lunchtime, we had a huge spike of orange looking wait event related to Commit (see fig_1) on Enterprise Manager. After digging a bit on some v$ views and Enterprise Manager we found that the LGWR was blocking several user sessions.

We raised a Severity 1 SR with oracle as the symptoms where the same as the ones described on Bug 5230765 – LGWR spins while waiting for ‘log file parallel write’ using ASM [ID 5230765.8. This bug was technically solved on 10.2.0.4 but it is always good to check things.

After uploading AWRs for the time the database was affected and the previous day when it was fine, some screen prints from Enterprise Manager, ASH for a short period of time and different log files (traces, alert log, etc); the best they could come up with was to resize the redo logs.

I must say the way they approached the problem was not right in my opinion.

Those redolog files never changed for the last 1+ year and we did not have the problem before. The problem went away at midnight and manifested itself on Monday and Tuesday, always around midday.

The solution:

The database is running on a single instance, Oracle + ASM 10.2.0.4, and Linux x86-64.

One of the trends I noticed (or lack of trend) was on the dml being executed. Some times it was an insert, sometimes and update on different objects and different schemas, so a hot block was unlikely to be the problem.

Another big clue was the number of waits in Buffer Gets and Disk Reads where well above average for both per execution and per session.

The Storage manager and the SAs checked the system several times while it was performing really bad and both said that it did not look different from previous days or weeks, so not much on their side.

Business also confirmed that the workload was not different from the previous day. We also have to bear in mind that the first time we had the problem was on the 31st; but the second and third time was on the 3rd and 4th, so we could discard any month end batch performing worst than before.

We decided to add two more redo log groups and increase the size by 2.5 times as this is an online operation with little impact on the business if the time frame is the right one, making a little different as the I/O and CPU was still very high.

The next change was to increase the SGA to solve the wait events on the buffer gets and disk reads. The reason I proposed to increase the SGA was that I thought we did not have enough space on the buffer cache to hold all the data the business was selecting plus inserts/updates.

As soon as the buffer cache was getting full, the LGWR was calling the DBWR to flush changes out of the memory to free space, over killing the server.

This change was not easy to get approved as requires downtime and the system is critical, but after missing couple of SLAs it was a go-go situation.

Once the change was done (increase the SGA_TARGET and SGA_MAX_SIZE), we monitored the system closely. All processes and batches finished on time and the load was much less (see fig_2 below).

Note.- the grey spike is due to a network problem between the primary site and the DR.

Conclusion:

You have to know your business and your databases. Oracle support came with an out of the book solution of increasing the redo logs but, as mentioned before, we did not change the size, neither the number for over 1 year.

We can get sometimes to focus on a single point (LGWR). Sometimes is good to make a step backwards and check the system. Different segments, different schemas, different dml and a huge amount buffer gets and disk read were the clue, not just LGWR blocking sessions.

We all have the rule of not switching redo logs more than x amount of times per hour, but sometimes we need to know why we have reached that number and not just increase the size as this may mask an underlying problem.

As always, comments are welcome.

You must be logged in to post a comment.