SQL Monitor details for later tuning. 29 March 2012

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL, Tuning.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL, Tuning

comments closed

Tuning has always being good fun and something like a challenge for me.

From time to time we are being asked to find out why something did run slow while you are sleeping; answering this question is, in most cases, a challenge.

The problem:

My batch did run slow last night, can you let us know why? Or why did this query run slow? Are questions we, as DBAs, have to answer from time to time.

The solution:

Oracle has provided us with many tools to dig out information about past operations. We have EM, AWR, ASH, dba_hist_* tables, scripts all over internet, etc.

I must admit I do use sql_monitor quite often, but on a really busy environment, Oracle will only keep, with any luck, couple of hours of SQLs.

V$SQL_MONITOR and dbms_sqltune.report_sql_monitor have become tools I use most frequently.

The only problem I have is, as mentioned earlier, the number of SQLs stored on v$sql_monitor or, rather, the length of time being kept there.

Oracle will keep a certain number of SQLs (defined by a hidden parameter) and start recycling them so, by the time I am in the office, any SQL executed during the batch is long gone.

For this reason I came up with the following. I must admit it is not rocket science, but it does help me quite a lot.

It is like my small collection of “Bad Running Queries”. And I only need another DBA or an operator with certain privileges to execute a simple procedure to allow me to try to find out what did happen.

We need the following objects:

1.- A table to store the data:

CREATE TABLE perflog ( asof DATE, userid VARCHAR2(30), sql_id VARCHAR2 (30), monitor_list CLOB, monitor CLOB, monitor_detail CLOB ); /

2.- A procedure to insert the data I need for tuning:

CREATE OR REPLACE PROCEDURE perflog_pr (p_sql_id VARCHAR2 DEFAULT 'N/A')

AS

BEGIN

IF p_sql_id = 'N/A'

THEN

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

NULL,

NULL

FROM DUAL;

ELSE

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_monitor (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_detail (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL')

FROM DUAL;

END IF;

COMMIT;

END;

/

3.- Grant necessary permissions:

grant select, insert on perflog to public / create public synonym perflog for perflog / grant execute on perflog_pr to public / create public synonym perflog_pr for perflog_pr / grant select any table, select any dictionary to <owner_code> /

The way it works is as follows:

– If business complains regarding a specific query, the DBA or operator can call the procedure with the sql_id:

exec perflog_pr ('1f52b50sq59q');

This will store the datetime, sql_id, DBA/operator name and most important the status of the instance at that time, general view of the sql and a detailed view of the sql running slow.

– If business complains regarding slowness but does not provide a specific query, we execute the following:

exec perflog_pr;

This will store the datetime, sql_id, DBA/operator name and a general view of the instance.

Please, give it a go and let me know any thoughts.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Extending Oracle Enterprise Manager (EM) monitoring. 29 January 2012

Posted by David Alejo Marcos in Grid Control, Oracle 11.2, SQL - PL/SQL.Tags: Grid Control, Oracle 11.2, SQL, SQL - PL/SQL

comments closed

I always found Oracle Enterprise Manager (EM) to be an interesting tool for different reasons. The only thing I missed was an easy way to create my own alerts.

It is very simple to create a KSH, Perl, etc script to do some customised monitoring and notify you by email, Nagios, NetCool, etc.

By integrating your scripts with OEM, you will have an easy way to enhance your monitoring and still have notification by email, SNMP traps, etc. as you would currently have if your company is using OEM for monitoring your systems.

The problem:

Develop an easy way to integrate your monitoring scripts with OEM.

The solution:

I decided to use an Oracle type and an Oracle function to accomplish this goal. Using the steps described below, we can monitor pretty much whatever aspect of the database providing you can put the logic into a function.

As example, I had added the steps to create two new User-Defined SQL Metrics, as Oracle calls them:

1.- Long Running Sessions (LRS).

2.- Tablespace Monitoring.

The reason to have my own TBS monitoring is to enhance the existing as it has “hard-coded” thresholds. I might have tablespaces in your database which are 6TBS in size and other with only 2Gb, so raising an alert at 95% for both of them is, in my opinion, not adequate.

You can find more about the query I developed here.

The steps to create a script to monitor long running sessions (LRS) are:

1.- create types

CREATE OR REPLACE TYPE lrs_obj as OBJECT ( user_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE lrs_array AS TABLE OF lrs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION lrs RETURN lrs_array IS long_running_data lrs_array := lrs_array(); ln_seconds_active number := 300; BEGIN SELECT lrs_obj(username||' Sec: '||sec_running, ', Inst_id: '||inst_id||', SID: '||sid||', Serial: '|| serial||', Logon: '||session_logon_time||', sql_id: '||sql_id) BULK COLLECT INTO long_running_data FROM (SELECT /*+ FIRST_ROWS USE_NL(S,SQ,P) */ s.inst_id inst_id, s.sid sid, s.serial# serial, s.last_call_et sec_running, NVL(s.username, '(oracle)') AS username, to_char(s.logon_time, 'DD-MM-YYYY HH24:MI:SS') session_logon_time, s.machine, NVL(s.osuser, 'n/a') AS osuser, NVL(s.program, 'n/a') AS program, s.event, s.seconds_in_wait, s.sql_id sql_id, sq.sql_text from gv$session s, gv$sqlarea sq where s.sql_id = sq.sql_id and s.inst_id = sq.inst_id and s.status = 'ACTIVE' and s.last_call_et > ln_seconds_active and s.paddr not in ( select paddr from gv$bgprocess where paddr != '00' ) and s.type != 'BACKGROUND' and s.username not in ( 'SYSTEM', 'SYS' ) AND s.event != 'SQL*Net break/reset to client' ) CUSTOMER_QUERY; RETURN long_running_data; END lrs; /

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on lrs_obj to public; grant execute on lrs_array to public; grant execute on lrs to public;

4.- create synonyms

Create public synonym lrs_obj for lrs_obj; Create public synonym lrs_array for lrs_array; Create public synonym lrs for lrs;

5.- Query to monitor

SELECT user_name, error_message FROM TABLE(CAST(lrs as lrs_array));

Once we are satisfied with the thresholds (300 seconds on the script), we are ready to add it to EM.

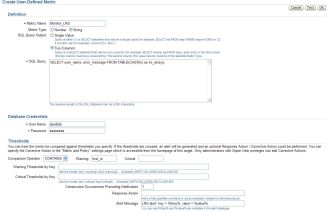

1.- Navigate to User-Defined SQL Metrics (you need to navigate to your database and you will find the link at bottom).

2.- Create new User-Defined SQL Metric and fill the gaps (I have attached some values for reference). The most important thing right now is to make sure metric_Type = String, Sql Query Output = two columns, comparison Operator = CONTAINS and warning has a value returned by the query (i did decide to go for Inst_id).

The only thing left now is to add this monitoring to your templates and to your rules so notifications are being sent.

Once all has been configure, you should start seeing alerts like this:

Target Name=lab01 Target type=Cluster Database Host=mylab Occurred At=Jan 22, 2012 14:35:47 PM GMT Message=LRS alert: key = DAVIDLAB Sec: 530, value = , Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric=StrValue Metric value=, Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric ID=lrs Key=DAVIDLAB Sec: 530 Severity=Warning Acknowledged=No Notification Rule Name=david alerts Notification Rule Owner=DAVIDLAB

For Tablespace monitoring the steps will be the same as described above:

1.- create types

CREATE OR REPLACE TYPE tbs_obj as OBJECT ( tablespace_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE tbs_array AS TABLE OF tbs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION calc_tbs_free_mb RETURN tbs_array IS

tablespace_data tbs_array := tbs_array();

BEGIN

SELECT tbs_obj(tablespace_name, alert||', Used_MB: '||space_used_mb||', PCT_Free: '||pct_free||', FreeMB: '|| free_space_mb) BULK COLLECT

INTO tablespace_data

FROM

(SELECT (CASE

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents THEN 'CRITICAL'

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20 THEN 'WARNING'

ELSE 'N/A'

END)

alert,

tablespace_name,

space_used_mb,

ROUND (free_space/power(1024,3), 2) free_gb,

free_space_mb,

pct_free,

ROUND (extend_bytes/power(1024,3), 2) extend_gb,

free_extents,

max_size_gb,

maxextent

FROM (SELECT c.tablespace_name,

NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) pct_free,

NVL ( ROUND ( (a.extend_bytes + b.free_space) / 1024 / 1024, 2), 0) free_space_mb,

(CASE

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 30 THEN 60

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 100 THEN 120

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 300 THEN 200

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 800 THEN 300

ELSE 340

END) free_extents,

a.extend_bytes, b.free_space,

ROUND (maxbytes /power(1024,3), 2) max_size_gb,

nvl (round(a.bytes - b.free_space ,2) /1024/1024, 0) space_used_mb,

c.allocation_type,

GREATEST (c.min_extlen / 1024 / 1024, 64) min_extlen,

64 maxextent

FROM ( SELECT tablespace_name,

SUM(DECODE (SIGN (maxbytes - BYTES), -1, 0, maxbytes - BYTES)) AS extend_bytes,

SUM (BYTES) AS BYTES,

SUM (maxbytes) maxbytes

FROM DBA_DATA_FILES

GROUP BY tablespace_name) A,

( SELECT tablespace_name,

SUM (BYTES) AS free_space,

MAX (BYTES) largest

FROM DBA_FREE_SPACE

GROUP BY tablespace_name) b,

dba_tablespaces c

WHERE c.contents not in ('UNDO','TEMPORARY') and

b.tablespace_name(+) = c.tablespace_name

AND a.tablespace_name = c.tablespace_name

)

) CUSTOMER_QUERY;

RETURN tablespace_data;

END calc_tbs_free_mb;

/

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on tbs_obj to public; grant execute on tbs_array to public; grant execute on calc_tbs_free_mb to public;

4.- create synonyms

Create public synonym tbs_obj for tbs_obj; Create public synonym tbs_array for tbs_array; Create public synonym calc_tbs_free_mb for calc_tbs_free_mb;

5.- Query to monitor

SELECT * FROM TABLE(CAST(calc_tbs_free_mb as tbs_array));

Please, remember to use comparison operator = CONTAINS, warning = WARNING and critical=CRITICAL

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

How to list files on a directory from Oracle Database. 13 September 2011

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL

comments closed

Couple of days ago I had an interesting request, “How can I see the contents of nfs_dir”?

The problem:

We were using DBFS to store our exports. This was the perfect solution as the business could “see” the files on the destination folder, but it did not meet our requirements performance wise on our Exadata.

We have decided to mount NFS and performance did improve, but we had a different problem. NFS is mounted on the database server and business do not have access for security reasons and segregation of duties.

Since then, the export jobs run, but business could not “see” what files were created, so the question was asked.

The solution:

After some research I came across with the following package:

SYS.DBMS_BACKUP_RESTORE.searchFiles

I did have to play a little bit, and I finished with the following script:

1.- Create an Oracle type

create type file_array as table of varchar2(100) /

2.- Create the function as SYS:

CREATE OR REPLACE FUNCTION LIST_FILES (lp_string IN VARCHAR2 default null) RETURN file_array pipelined AS lv_pattern VARCHAR2(1024); lv_ns VARCHAR2(1024); BEGIN SELECT directory_path INTO lv_pattern FROM dba_directories WHERE directory_name = 'NFS_DIR'; SYS.DBMS_BACKUP_RESTORE.SEARCHFILES(lv_pattern, lv_ns); FOR file_list IN (SELECT FNAME_KRBMSFT AS file_name FROM X$KRBMSFT WHERE FNAME_KRBMSFT LIKE '%'|| NVL(lp_string, FNAME_KRBMSFT)||'%' ) LOOP PIPE ROW(file_list.file_name); END LOOP; END; /

3.- Grant necessary permissions:

grant execute on LIST_FILES to public; create public synonym list_files for sys.LIST_FILES;

4.- Test without WHERE clause:

TESTDB> select * from table(list_files); COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_09092011.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_12092011.log 8 rows selected. Elapsed: 00:00:00.10

5.- Test with WHERE clause:

TESTDB> select * from table(list_files) where column_value like '%dmp%'; COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old Elapsed: 00:00:00.12

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Sysdate returns “wrong” time (time with timezone). 25 February 2011

Posted by David Alejo Marcos in Oracle 11.2, SQL - PL/SQL.Tags: Oracle 11.2, SQL, SQL - PL/SQL

comments closed

We are all used to execute sysdate to have the date + time of the database, what we are not so used to is to retrieve different values (depending of your timezone) from Oracle.

Sysdate, per Oracle documentation will do the following:

“SYSDATE returns the current date and time set for the operating system on which the database server resides. The data type of the returned value is DATE, and the format returned depends on the value of the NLS_DATE_FORMAT initialization parameter. The function requires no arguments. In distributed SQL statements, this function returns the date and time set for the operating system of your local database.”

Most people only reads the first line, where it says, it will return the date and time set for the operating system on which the database server resides; but right at the end, there is something we are all very familiar with, distributed SQL statements.

It is very hard to find a database not being accessed from different timezones than the server hosting it. Either because hosting is cheaper or because we have business in different countries.

The Problem:

If I execute a simple query to return the date and time from our US server:

SQL> alter session set nls_date_format='dd/mm/yyyy hh24:mi:ss'; Session altered. SQL> select sysdate from dual; SYSDATE ------------------- 24/02/2011 14:12:18 SQL> exit Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production With the Partitioning and Automatic Storage Management options

I receive the date and time in UTC:

[oracle@sssssssss ~]$ date Thu Feb 24 14:14:18 GMT 2011

From sqlplus in the UK:

C:\Documents and Settings\david.marcos>sqlplus daviddba@dddd SQL*Plus: Release 10.2.0.4.0 - Production on Thu Feb 24 14:14:30 2011 Copyright (c) 1982, 2007, Oracle. All Rights Reserved. Enter password: Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production With the Partitioning and Automatic Storage Management options DAVIDDBA@dddd> DAVIDDBA@dddd> alter session set nls_date_format='dd/mm/yyyy hh24:mi:ss'; Session altered. Elapsed: 00:00:00.14 DAVIDDBA@dddd> select sysdate from dual; SYSDATE ------------------- 24/02/2011 09:14:55 Elapsed: 00:00:00.20 DAVIDDBA@dddd>

It returns the date and time in NYC.

The Solution:

Quite simple, we have a file on the server called /etc/sysconfig/clock. This file configures the system clock to Universal or Local time.

[oracle@ssss ~]$ cat /etc/sysconfig/clock ZONE="America/New_York" UTC=true ARC=false

Zone should be :

[oracle@sssssss ~]$ cat /etc/sysconfig/clock ZONE="UTC" UTC=true ARC=false

So clients also uses UTC. After amending the value we were back to business.

There is another quick way to fix the problem, providing you do not have local_listener configured on your system as you can manipulate the timezone for your sessions using your listeners. Let me explain, if you connect from the server, you will probably not use the listener, so sysdate should be the same than your servers date and time.

Starting the listener with different timezone (for example, using TZ OS environment variable) you will be able to have two different sysdate results (one if you select from the server and a different one if you connect using the listener).

So, to fix your problem you will need to do the following:

1.- Configure TZ variable on your server (i.e. export TZ=”UTC”)

2.- Stop listener

3.- Start listener

Done.

If you have local_listener, you will need to stop/start your database with the right TZ environment variable to fix the issue.

As always, questions are welcome.

Monitoring tablespaces with dynamic thresholds 13 December 2010

Posted by David Alejo Marcos in SQL - PL/SQL.Tags: SQL, SQL - PL/SQL

comments closed

One of the problems we face while monitoring tablespaces is the difference in size. Some of our tablespaces are 10-50 GB while other are close to TB.

10% free space on a 10GB tablespace is not much on a fast growing system, while 10% on a 1TB tablespace is enough to keep us going for 1 month.

The problem:

We need a query that performs really quick, it is not heavy on the system and is clever enough to alert us if 20% is critical, warning or can wait.

The solution:

I came up with the following query:

SELECT (CASE

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents THEN 'CRITICAL'

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20 THEN 'WARNING'

ELSE 'N/A'

END)

alert,

tablespace_name,

space_used_mb,

extend_bytes/1024/1024 extend_mb,

free_space/1024/1024 free_mb,

pct_free,

free_extents,

free_space_mb,

max_size_mb,

maxextent

FROM (SELECT c.tablespace_name,

NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) pct_free,

NVL ( ROUND ( (a.extend_bytes + b.free_space) / 1024 / 1024, 2), 0) free_space_mb,

(CASE

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 30 THEN 60

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 100 THEN 120

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 300 THEN 200

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 800 THEN 300

ELSE 340

END) free_extents,

a.extend_bytes, b.free_space,

ROUND (maxbytes / 1024 / 1024, 2) max_size_mb,

nvl (round(a.bytes - b.free_space ,2) /1024/1024, 0) space_used_mb,

c.allocation_type,

GREATEST (c.min_extlen / 1024 / 1024, 64) min_extlen,

-- (SELECT NVL(MAX (bytes) / 1024 / 1024, 64) maxextent

-- FROM dba_extents

-- WHERE tablespace_name = a.tablespace_name) maxextent

64 maxextent

FROM ( SELECT tablespace_name,

SUM(DECODE (SIGN (maxbytes - BYTES), -1, 0, maxbytes - BYTES)) AS extend_bytes,

SUM (BYTES) AS BYTES,

SUM (maxbytes) maxbytes

FROM DBA_DATA_FILES

GROUP BY tablespace_name) A,

( SELECT tablespace_name,

SUM (BYTES) AS free_space,

MAX (BYTES) largest

FROM DBA_FREE_SPACE

GROUP BY tablespace_name) b,

dba_tablespaces c

WHERE c.contents not in ('UNDO','TEMPORARY')

AND b.tablespace_name(+) = c.tablespace_name

AND a.tablespace_name = c.tablespace_name

AND NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) < 20

) A

WHERE free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20;

and this is how it works:

1.- Line 43. We do not raise alerts for Undo or Temp tablespaces.

2.- Line 44. Outer join between dba_tablespaces and dba_free_space is necessary or you will not receive alerts once you do not have any free space.

3.- Line 46. Only evaluate tablespaces with less than 20% free space.

4.- Line 30. I started monitoring the largest extent for the tablespace being monitored (query below), but we hit some bugs with dba_extents not being able to have statistics, generating a bad plan and running for up to 2 minutes. If you are using tablespaces with Extent Allocation = Automatic, the biggest extent will be 64 MB, so I decided to use a hard-coded value instead.

(SELECT NVL(MAX (bytes) / 1024 / 1024, 64) maxextent

FROM dba_extents

WHERE tablespace_name = a.tablespace_name) maxextent

5.- Lines 18 to 24. This is where the logic happens. Depending of the size of the tablespace (including max autoextend), we define thresholds under free_extents. This values might need to be adjusted to your systems.

6.- Lines 1 to 6. Depending of the results on point 5, we define alerts as N/A (Not Applicable, Warning or Critical). Worth mentioning the distinction between Extent Allocation Uniform or Automatic. I assume a max extent of 64 MB for both to have consistency.

Please, share your thoughts about this query, there is always more than one way to achieve the same goal using SQL.

As always, comments are welcome.

Importance to choose a good name for a variable 5 December 2009

Posted by David Alejo Marcos in SQL - PL/SQL.Tags: archivelog, pl/sql, redolog, SQL, tune, variable

comments closed

After a very busy week, here we are again.

Last week was a busy one for different reasons. I was in Birmingham on Tuesday for the UKOUG. I will post my impressions regarding the presentations I went to.

The other reason is daily work.

On Monday we notices a huge amount of redo log being generated between 04:00 and 07:00. When I mean huge it really means huge. We moved from having 3 archive logs (500MB each) to 17 archivelogs.

We saw this increase not as one off (it could have been our batches trying to process an unusual volume of data) but it wasn’t.

So, lets see how we did troubleshoot the problem:

Symptoms:

Archivelog increase from 3 files to 17 in one hour.

Problem:

Standby database in US was lagging behind, this generated tickets and phone calls to the on call DBA at 04:15 am.

Analysis:

We started the analysis using a simple query to show how many log switches the database has performed per hour.

It was clear we had a dramatic increased in switches.We also noticed that this increased started on Monday at 04:00. Our batches are very light on Monday morning as we do not receive any data during the weekend. Data arrives to our systems from Monday to Friday.

Using this knowledge we could almost discard batches as the source of the problem.

At 06:00 we noticed that a specific Oracle job (AUTO_SPACE_ADVISOR_JOB) was taking longer than necessary and performing more inserts than last week.

The decision was made of disable the job for one night, to see it that helped. It was a shot in the dark, but worth trying.

Next day we noticed a decrease in the number of archivelogs, but only by 2 (still 1GB). After enabling the job, we decided to attach the problem from a different direction.

Oracle has some very cool tables so see what happened in the past. Those views start with dba_hist_*. I created the following query to find out which user was generating more redo on the database:

SELECT COUNT(*),

a.sql_id,

c.username

FROM dba_hist_active_sess_history a,

dba_hist_sqltext b,

dba_users c

WHERE a.sample_time BETWEEN

to_date ('26/11/2009 04','dd/mm/yyyy hh24')

AND to_date ('26/11/2009 05','dd/mm/yyyy hh24')

AND a.sql_id = b.sql_id

AND (UPPER(b.sql_text) like '%INSERT%'

OR UPPER(b.sql_text) LIKE '%UPDATE%'

OR UPPER(b.sql_text) LIKE '%DELETE%'

)

AND a.user_id = c.user_id

GROUP BY a.sql_id, c.username

ORDER BY 1 DESC;

This query showed me who executed more inserts/updates/deletes during the window defined.

I run the query for November 26th and December 3rd and different windows (04:00 – 05:00 and 06:00-07:00).

The query proved very useful and identified the user and the sql_id that run the most (for security reasons, I have amended the username):

COUNT(*) SQL_ID USERNAME -------- ------------- ---------- 58 0h9cf9v0d1bt0 batch1 9 a8gj2w0at9xjp batch2 6 c8wust7dkbqzy user1 4 4sr1q0ryvy6zn batch3 4 9aywg0c0wzmxv batch3

for December 3rd, this was the output:

COUNT(*) SQL_ID USERNAME ------- ------------- ----------- 168 9bmbf5t7580d8 User11 50 0h9cf9v0d1bt0 batch1 4 cg8dxqb0s8z7j user2

Wow, the number of executions for that single command was unexpected. Lets see the code:

SELECT *

FROM dba_hist_sqltext

WHERE sql_id = '9bmbf5t7580d8';

DBID SQL_ID SQL_TEXT

------- ------------- ----------------------------------

XXXXXX 9bmbf5t7580d8 UPDATE TABLE SET FIELD3= FIELD3,

FIELD4 = FIELD4, FIELD5= FIELD5

WHERE DATE = DATE AND

CURVE = CURVE AND

INSTRUMENT = INSTRUMENT

A quick word with the developers confirmed that the previous weekend a new feature was deployed. It was a new code that performed inserts if the data was not there or updates if the data was already on the database (I did not want to explain what MERGE command did at that time as this was a production problem).

I managed to have a copy of the procedure. The developers mentioned that the inserts were running very fast, but if the data was already on the table, the same procedure was very slow, so they run it in 2 threads from the application server.

I created a simple test on our QA environment and was able to reproduce the problem. The code is below:

procedure mypackage(

date table.date%TYPE,

curve table.CURVE%TYPE,

instrument table.INSTRUMENT%TYPE,

field3 table.field3%TYPE,

field4 table.field4%TYPE,

field5 table.field5%TYPE) IS

BEGIN

......

BEGIN

insert into table (date, CURVE, INSTRUMENT,

field3, field4, field5)

VALUES (date, CURVE, INSTRUMENT,

field3, field4, field5);

EXCEPTION

WHEN DUP_VAL_ON_INDEX THEN

UPDATE table SET

field3 = field3,

field4 = field4,

field5 = field5

WHERE date = date and curve = curve

and instrument = instrument;

END;

END mypackage;

Ok, even with the modified version of the code, you should be able to spot the problem.

Basically, the developer has decide to use the same name for the variable and the column to insert/update. The variable curve was storing the value to insert in the column curve of the table.

For the Insert, this is fine. Oracle will check the value of the variable, but for the update, the rules are different. Oracle was scanning and updating any single row of the table (8.9 million) as the condition:

WHERE date = date AND curve = curve AND instrument = instrument

was similar to do:

WHERE 1=1.

The second thread was updating every single row of the table (8.9 million) after every single insert done by the 1st thread…. No wonder we were generating so many archivelog files.

Solution:

The first step to fix the problem has been to disable one of the threads. We are lucky that the application is new, so it mostly performs inserts.

Developers are working now in renaming all variables and parameter to use a different name convention, something like p_<parameter>, l_<variable>, etc.

As always, comments are welcome.

Split Strings in Oracle 4 November 2009

Posted by David Alejo Marcos in SQL - PL/SQL.Tags: SQL

comments closed

I received an email from one of our developers asking the following question:

Do you know how to transform a horrid legacy column consisting of semicolon-separated strings into a view containing one row per (originally semicolon-separated) value? For example:

Existing table:

Row1: Value1; Value2;

Row2: Value3; Value4; Value5;

Desired view based on the above:

Row1: Value1

Row2: Value2

Row3: Value3

Row4: Value4

Row5: Value5

I thought it was quite an interesting question, so I created a simple test:

davidmarcos@devdb> create table mytest (pos number, description varchar2(1000));

Table created.

Elapsed: 00:00:00.40

davidmarcos@devdb> insert into mytest values (1,’value1;value2;value3′);

1 row created.

Elapsed: 00:00:00.01

davidmarcos@devdb> insert into mytest values (2,’value4;value5′);

1 row created.

Elapsed: 00:00:00.03

davidmarcos@devdb> insert into mytest values (3,’value6′);

1 row created.

Elapsed: 00:00:00.04

davidmarcos@devdb> insert into mytest values (4,’value7;value8;value9;value10;value11′);

1 row created.

Elapsed: 00:00:00.28

davidmarcos@devdb> commit;

Commit complete.

Elapsed: 00:00:00.03

davidmarcos@devdb> select * from mytest;

POS DESCRIPTION

——- ————————————

1 value1;value2;value3

2 value4;value5

3 value6

4 value7;value8;value9;value10;value11

Elapsed: 00:00:00.06

The first thing I noticed is that the number of values are variable and the column can be null. The easiest way I found to find out how many different values we had per row was counting the number of “;” and adding 1 (last value does not have semicolon):

davidmarcos@devdb>select description,

2 pos,

3 (length(description)-length(replace(description,’;’,”)))+1 cnt

4 from mytest

5 where description is not null;

DESCRIPTION POS CNT

—————————————— ——- ———-

value1;value2;value3 1 3

value4;value5 2 2

value6 3 1

value7;value8;value9;value10;value11 4 5

Elapsed: 00:00:00.03

This query will become the core of my select.

The next step was to split the string. I decide to use regular expressions (regexp_substr to be more specific).

To split the string I used the following:

regexp_substr(description,'[^;]+’,1,level)

Where:

description is the field.

^; indicates the string beginning with “;”

1 indicates where the search should start (in this case, the first string after “;”)

Level tells oracle which occurrence to search for.

The query looks like:

with data

as

(

select description,

pos,

(length(description)-length(replace(description,’;’,”)))+1 cnt

from mytest

where description is not null

)

select distinct ltrim(regexp_substr(description,'[^;]+’,1,level)) result, pos

from data

connect by level <= cnt

order by pos, result

Note:

– ltrim was used to remove any blanks at the beginning of the string.

davidmarcos@devdb > with data

2 as

3 (

4 select description,

5 pos,

6 (length(description)-length(replace(description,’;’,”)))+1 cnt

7 from mytest

8 where description is not null

9 )

10 select distinct ltrim(regexp_substr(description,'[^;]+’,1,level)) result, pos

11 from data

12 connect by level <= cnt

13 order by pos, result

14 /

RESULT POS

————————————— ———-

value1 1

value2 1

value3 1

value4 2

value5 2

value6 3

value10 4

value11 4

value7 4

value8 4

value9 4

11 rows selected.

Elapsed: 00:00:00.37

So far, so good. The only missing detail is to order the columns in a proper manner. I decided to order by level instead of description and hide it from the user (he is not really interested on the level, but on having the information ordered in the same way it was inserted on the column):

davidmarcos@devdb> with data

2 as

3 (

4 select description,

5 pos,

6 (length(description)-length(replace(description,’;’,”)))+1 cnt

7 from mytest

8 where description is not null

9 ) select result, pos

10 from (

11 select distinct ltrim(regexp_substr(description,'[^;]+’,1,level)) result, pos, level

12 from data

13 connect by level <= cnt

14 order by pos, level

15 )

16 /

RESULT POS

—————————————- ———-

value1 1

value2 1

value3 1

value4 2

value5 2

value6 3

value7 4

value8 4

value9 4

value10 4

value11 4

11 rows selected.

Elapsed: 00:00:00.17

You must be logged in to post a comment.