SQL Monitor details for later tuning. 29 March 2012

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL, Tuning.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL, Tuning

comments closed

Tuning has always being good fun and something like a challenge for me.

From time to time we are being asked to find out why something did run slow while you are sleeping; answering this question is, in most cases, a challenge.

The problem:

My batch did run slow last night, can you let us know why? Or why did this query run slow? Are questions we, as DBAs, have to answer from time to time.

The solution:

Oracle has provided us with many tools to dig out information about past operations. We have EM, AWR, ASH, dba_hist_* tables, scripts all over internet, etc.

I must admit I do use sql_monitor quite often, but on a really busy environment, Oracle will only keep, with any luck, couple of hours of SQLs.

V$SQL_MONITOR and dbms_sqltune.report_sql_monitor have become tools I use most frequently.

The only problem I have is, as mentioned earlier, the number of SQLs stored on v$sql_monitor or, rather, the length of time being kept there.

Oracle will keep a certain number of SQLs (defined by a hidden parameter) and start recycling them so, by the time I am in the office, any SQL executed during the batch is long gone.

For this reason I came up with the following. I must admit it is not rocket science, but it does help me quite a lot.

It is like my small collection of “Bad Running Queries”. And I only need another DBA or an operator with certain privileges to execute a simple procedure to allow me to try to find out what did happen.

We need the following objects:

1.- A table to store the data:

CREATE TABLE perflog ( asof DATE, userid VARCHAR2(30), sql_id VARCHAR2 (30), monitor_list CLOB, monitor CLOB, monitor_detail CLOB ); /

2.- A procedure to insert the data I need for tuning:

CREATE OR REPLACE PROCEDURE perflog_pr (p_sql_id VARCHAR2 DEFAULT 'N/A')

AS

BEGIN

IF p_sql_id = 'N/A'

THEN

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

NULL,

NULL

FROM DUAL;

ELSE

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_monitor (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_detail (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL')

FROM DUAL;

END IF;

COMMIT;

END;

/

3.- Grant necessary permissions:

grant select, insert on perflog to public / create public synonym perflog for perflog / grant execute on perflog_pr to public / create public synonym perflog_pr for perflog_pr / grant select any table, select any dictionary to <owner_code> /

The way it works is as follows:

– If business complains regarding a specific query, the DBA or operator can call the procedure with the sql_id:

exec perflog_pr ('1f52b50sq59q');

This will store the datetime, sql_id, DBA/operator name and most important the status of the instance at that time, general view of the sql and a detailed view of the sql running slow.

– If business complains regarding slowness but does not provide a specific query, we execute the following:

exec perflog_pr;

This will store the datetime, sql_id, DBA/operator name and a general view of the instance.

Please, give it a go and let me know any thoughts.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Extending Oracle Enterprise Manager (EM) monitoring. 29 January 2012

Posted by David Alejo Marcos in Grid Control, Oracle 11.2, SQL - PL/SQL.Tags: Grid Control, Oracle 11.2, SQL, SQL - PL/SQL

comments closed

I always found Oracle Enterprise Manager (EM) to be an interesting tool for different reasons. The only thing I missed was an easy way to create my own alerts.

It is very simple to create a KSH, Perl, etc script to do some customised monitoring and notify you by email, Nagios, NetCool, etc.

By integrating your scripts with OEM, you will have an easy way to enhance your monitoring and still have notification by email, SNMP traps, etc. as you would currently have if your company is using OEM for monitoring your systems.

The problem:

Develop an easy way to integrate your monitoring scripts with OEM.

The solution:

I decided to use an Oracle type and an Oracle function to accomplish this goal. Using the steps described below, we can monitor pretty much whatever aspect of the database providing you can put the logic into a function.

As example, I had added the steps to create two new User-Defined SQL Metrics, as Oracle calls them:

1.- Long Running Sessions (LRS).

2.- Tablespace Monitoring.

The reason to have my own TBS monitoring is to enhance the existing as it has “hard-coded” thresholds. I might have tablespaces in your database which are 6TBS in size and other with only 2Gb, so raising an alert at 95% for both of them is, in my opinion, not adequate.

You can find more about the query I developed here.

The steps to create a script to monitor long running sessions (LRS) are:

1.- create types

CREATE OR REPLACE TYPE lrs_obj as OBJECT ( user_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE lrs_array AS TABLE OF lrs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION lrs RETURN lrs_array IS long_running_data lrs_array := lrs_array(); ln_seconds_active number := 300; BEGIN SELECT lrs_obj(username||' Sec: '||sec_running, ', Inst_id: '||inst_id||', SID: '||sid||', Serial: '|| serial||', Logon: '||session_logon_time||', sql_id: '||sql_id) BULK COLLECT INTO long_running_data FROM (SELECT /*+ FIRST_ROWS USE_NL(S,SQ,P) */ s.inst_id inst_id, s.sid sid, s.serial# serial, s.last_call_et sec_running, NVL(s.username, '(oracle)') AS username, to_char(s.logon_time, 'DD-MM-YYYY HH24:MI:SS') session_logon_time, s.machine, NVL(s.osuser, 'n/a') AS osuser, NVL(s.program, 'n/a') AS program, s.event, s.seconds_in_wait, s.sql_id sql_id, sq.sql_text from gv$session s, gv$sqlarea sq where s.sql_id = sq.sql_id and s.inst_id = sq.inst_id and s.status = 'ACTIVE' and s.last_call_et > ln_seconds_active and s.paddr not in ( select paddr from gv$bgprocess where paddr != '00' ) and s.type != 'BACKGROUND' and s.username not in ( 'SYSTEM', 'SYS' ) AND s.event != 'SQL*Net break/reset to client' ) CUSTOMER_QUERY; RETURN long_running_data; END lrs; /

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on lrs_obj to public; grant execute on lrs_array to public; grant execute on lrs to public;

4.- create synonyms

Create public synonym lrs_obj for lrs_obj; Create public synonym lrs_array for lrs_array; Create public synonym lrs for lrs;

5.- Query to monitor

SELECT user_name, error_message FROM TABLE(CAST(lrs as lrs_array));

Once we are satisfied with the thresholds (300 seconds on the script), we are ready to add it to EM.

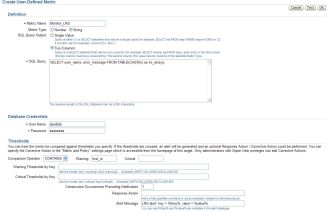

1.- Navigate to User-Defined SQL Metrics (you need to navigate to your database and you will find the link at bottom).

2.- Create new User-Defined SQL Metric and fill the gaps (I have attached some values for reference). The most important thing right now is to make sure metric_Type = String, Sql Query Output = two columns, comparison Operator = CONTAINS and warning has a value returned by the query (i did decide to go for Inst_id).

The only thing left now is to add this monitoring to your templates and to your rules so notifications are being sent.

Once all has been configure, you should start seeing alerts like this:

Target Name=lab01 Target type=Cluster Database Host=mylab Occurred At=Jan 22, 2012 14:35:47 PM GMT Message=LRS alert: key = DAVIDLAB Sec: 530, value = , Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric=StrValue Metric value=, Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric ID=lrs Key=DAVIDLAB Sec: 530 Severity=Warning Acknowledged=No Notification Rule Name=david alerts Notification Rule Owner=DAVIDLAB

For Tablespace monitoring the steps will be the same as described above:

1.- create types

CREATE OR REPLACE TYPE tbs_obj as OBJECT ( tablespace_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE tbs_array AS TABLE OF tbs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION calc_tbs_free_mb RETURN tbs_array IS

tablespace_data tbs_array := tbs_array();

BEGIN

SELECT tbs_obj(tablespace_name, alert||', Used_MB: '||space_used_mb||', PCT_Free: '||pct_free||', FreeMB: '|| free_space_mb) BULK COLLECT

INTO tablespace_data

FROM

(SELECT (CASE

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents THEN 'CRITICAL'

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20 THEN 'WARNING'

ELSE 'N/A'

END)

alert,

tablespace_name,

space_used_mb,

ROUND (free_space/power(1024,3), 2) free_gb,

free_space_mb,

pct_free,

ROUND (extend_bytes/power(1024,3), 2) extend_gb,

free_extents,

max_size_gb,

maxextent

FROM (SELECT c.tablespace_name,

NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) pct_free,

NVL ( ROUND ( (a.extend_bytes + b.free_space) / 1024 / 1024, 2), 0) free_space_mb,

(CASE

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 30 THEN 60

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 100 THEN 120

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 300 THEN 200

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 800 THEN 300

ELSE 340

END) free_extents,

a.extend_bytes, b.free_space,

ROUND (maxbytes /power(1024,3), 2) max_size_gb,

nvl (round(a.bytes - b.free_space ,2) /1024/1024, 0) space_used_mb,

c.allocation_type,

GREATEST (c.min_extlen / 1024 / 1024, 64) min_extlen,

64 maxextent

FROM ( SELECT tablespace_name,

SUM(DECODE (SIGN (maxbytes - BYTES), -1, 0, maxbytes - BYTES)) AS extend_bytes,

SUM (BYTES) AS BYTES,

SUM (maxbytes) maxbytes

FROM DBA_DATA_FILES

GROUP BY tablespace_name) A,

( SELECT tablespace_name,

SUM (BYTES) AS free_space,

MAX (BYTES) largest

FROM DBA_FREE_SPACE

GROUP BY tablespace_name) b,

dba_tablespaces c

WHERE c.contents not in ('UNDO','TEMPORARY') and

b.tablespace_name(+) = c.tablespace_name

AND a.tablespace_name = c.tablespace_name

)

) CUSTOMER_QUERY;

RETURN tablespace_data;

END calc_tbs_free_mb;

/

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on tbs_obj to public; grant execute on tbs_array to public; grant execute on calc_tbs_free_mb to public;

4.- create synonyms

Create public synonym tbs_obj for tbs_obj; Create public synonym tbs_array for tbs_array; Create public synonym calc_tbs_free_mb for calc_tbs_free_mb;

5.- Query to monitor

SELECT * FROM TABLE(CAST(calc_tbs_free_mb as tbs_array));

Please, remember to use comparison operator = CONTAINS, warning = WARNING and critical=CRITICAL

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Executing sql on all Exadata nodes. 22 September 2011

Posted by David Alejo Marcos in Exadata, SQL - PL/SQL.Tags: Exadata, SQL - PL/SQL

comments closed

From time to time, I have to run scripts or single commands on all nodes for Exadata. This can take some time.

The problem:

We have a request from our developers to flush the shared pool on all nodes on our UAT Exadata. This is due to a bug we are still experiencing.

The solution:

This is a typical request for my team, were we have to run something on all our nodes. Flushing shared pool can be one of them.

Connecting and executing the same command 8 times, if you have a full rack, can be time-consuming and it is, probably, not the most exciting task to do.

For this reason I came up with the following script. It is something to help me with, so please, test it before using on production.

#!/bin/ksh

###############################

##

## Script to execute sql commands on all Exadata nodes

##

## Owner: David Alejo-Marcos

##

## usage: Exadata_sql_exec.ksh

## i.e.: Exadata_sql_exec.ksh MYTESTDB flush_shared_pool.sql

##

## Example of test.sql:

##

## oracle@hostname1 (MYTESTDB1)$ cat test.sql

## select sysdate from dual;

## select 'this is my test' from dual;

##

## alter system switch logfile;

##

###############################

NO_OF_ARGS=$#

typeset -u DB_SRC=$1

typeset -l DB_SCRIPT1=$2

typeset -l DB_SCRIPT=$2_tmp

if [[ "$NO_OF_ARGS" = "2" ]]; then

echo "Parameters passed: OK"

else

echo "usage: Exadata_sql_exec.ksh"

echo "i.e.: Exadata_sql_exec.ksh MYTESTDB flush_shared_pool.sql"

exit 1

fi

echo "sqlplus -s '/as sysdba'" >/tmp/${DB_SCRIPT}

echo "set linesize 500" >>/tmp/${DB_SCRIPT}

echo "set serveroutput on" >>/tmp/${DB_SCRIPT}

echo "select host_name, instance_name from v\\\$instance;" >>/tmp/${DB_SCRIPT}

echo "@/tmp/${DB_SCRIPT1}" >>/tmp/${DB_SCRIPT}

echo "exit;" >>/tmp/${DB_SCRIPT}

echo "EOF" >>/tmp/${DB_SCRIPT}

# Copy both scripts to all Exadata DB Servers.

dcli -l oracle -g ~/dbs_group -f /tmp/${DB_SCRIPT} -d /tmp

dcli -l oracle -g ~/dbs_group -f ${DB_SCRIPT1} -d /tmp

# Execute command on all Exadata DB

dcli -l oracle -g ~/dbs_group " (chmod 700 /tmp/${DB_SCRIPT} ; /tmp/${DB_SCRIPT} ${DB_SRC} ; rm /tmp/${DB_SCRIPT} ; rm /tmp/${DB_SCRIPT1})"

The sql file to be executed can be anything you would normally run from sqlplus.

Please, bear in mind this script will be executed as many times as nodes you have on your Exadata, so if you create a table of you perform inserts, it will be done 8 times…

Below is an output from a very simple test:

test.sql:

oracle@hostname1 (MYTESTDB1)$ cat test.sql select sysdate from dual; select 'this is my test' "ramdom_text" from dual; alter system switch logfile;

Output:

oracle@hostname1 (MYTESTDB1)$ ./Exadata_sql_exec.ksh MYTESTDB test.sql Parameters passed: OK hostname1: hostname1: Connect to instance MYTESTDB1 on hostname1 hostname1: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname1: hostname1: hostname1: HOST_NAME INSTANCE_NAME hostname1: --------- ---------------- hostname1: hostname1 MYTESTDB1 hostname1: hostname1: hostname1: SYSDATE hostname1: --------- hostname1: 22-SEP-11 hostname1: hostname1: hostname1: ramdom_text hostname1: --------------- hostname1: this is my test hostname1: hostname1: hostname1: System altered. hostname1: hostname2: hostname2: Connect to instance MYTESTDB2 on hostname2 hostname2: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname2: hostname2: hostname2: HOST_NAME INSTANCE_NAME hostname2: --------- ---------------- hostname2: hostname2 MYTESTDB2 hostname2: hostname2: hostname2: SYSDATE hostname2: --------- hostname2: 22-SEP-11 hostname2: hostname2: hostname2: ramdom_text hostname2: --------------- hostname2: this is my test hostname2: hostname2: hostname2: System altered. hostname2: hostname3: hostname3: Connect to instance MYTESTDB3 on hostname3 hostname3: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname3: hostname3: hostname3: HOST_NAME INSTANCE_NAME hostname3: --------- ---------------- hostname3: hostname3 MYTESTDB3 hostname3: hostname3: hostname3: SYSDATE hostname3: --------- hostname3: 22-SEP-11 hostname3: hostname3: hostname3: ramdom_text hostname3: --------------- hostname3: this is my test hostname3: hostname3: hostname3: System altered. hostname3: hostname4: hostname4: Connect to instance MYTESTDB4 on hostname4 hostname4: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname4: hostname4: hostname4: HOST_NAME INSTANCE_NAME hostname4: --------- ---------------- hostname4: hostname4 MYTESTDB4 hostname4: hostname4: hostname4: SYSDATE hostname4: --------- hostname4: 22-SEP-11 hostname4: hostname4: hostname4: ramdom_text hostname4: --------------- hostname4: this is my test hostname4: hostname4: hostname4: System altered. hostname4: hostname5: hostname5: Connect to instance MYTESTDB5 on hostname5 hostname5: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname5: hostname5: hostname5: HOST_NAME INSTANCE_NAME hostname5: --------- ---------------- hostname5: hostname5 MYTESTDB5 hostname5: hostname5: hostname5: SYSDATE hostname5: --------- hostname5: 22-SEP-11 hostname5: hostname5: hostname5: ramdom_text hostname5: --------------- hostname5: this is my test hostname5: hostname5: hostname5: System altered. hostname5: hostname6: hostname6: Connect to instance MYTESTDB6 on hostname6 hostname6: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname6: hostname6: hostname6: HOST_NAME INSTANCE_NAME hostname6: --------- ---------------- hostname6: hostname6 MYTESTDB6 hostname6: hostname6: hostname6: SYSDATE hostname6: --------- hostname6: 22-SEP-11 hostname6: hostname6: hostname6: ramdom_text hostname6: --------------- hostname6: this is my test hostname6: hostname6: hostname6: System altered. hostname6: hostname7: hostname7: Connect to instance MYTESTDB7 on hostname7 hostname7: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname7: hostname7: hostname7: HOST_NAME INSTANCE_NAME hostname7: --------- ---------------- hostname7: hostname7 MYTESTDB7 hostname7: hostname7: hostname7: SYSDATE hostname7: --------- hostname7: 22-SEP-11 hostname7: hostname7: hostname7: ramdom_text hostname7: --------------- hostname7: this is my test hostname7: hostname7: hostname7: System altered. hostname7: hostname8: hostname8: Connect to instance MYTESTDB8 on hostname8 hostname8: ORACLE_HOME is set to /apps/oracle/server/product/11.2/dbhome_1 hostname8: hostname8: hostname8: HOST_NAME INSTANCE_NAME hostname8: --------- ---------------- hostname8: hostname8 MYTESTDB8 hostname8: hostname8: hostname8: SYSDATE hostname8: --------- hostname8: 22-SEP-11 hostname8: hostname8: hostname8: ramdom_text hostname8: --------------- hostname8: this is my test hostname8: hostname8: hostname8: System altered. hostname8:

I have been told many times to do not use quotes on the following command:

sqlplus '/as sysdba'

but:

1.- I am too used to it

2.- you only save 2 keystrokes at the end of the day 🙂

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

How to list files on a directory from Oracle Database. 13 September 2011

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL

comments closed

Couple of days ago I had an interesting request, “How can I see the contents of nfs_dir”?

The problem:

We were using DBFS to store our exports. This was the perfect solution as the business could “see” the files on the destination folder, but it did not meet our requirements performance wise on our Exadata.

We have decided to mount NFS and performance did improve, but we had a different problem. NFS is mounted on the database server and business do not have access for security reasons and segregation of duties.

Since then, the export jobs run, but business could not “see” what files were created, so the question was asked.

The solution:

After some research I came across with the following package:

SYS.DBMS_BACKUP_RESTORE.searchFiles

I did have to play a little bit, and I finished with the following script:

1.- Create an Oracle type

create type file_array as table of varchar2(100) /

2.- Create the function as SYS:

CREATE OR REPLACE FUNCTION LIST_FILES (lp_string IN VARCHAR2 default null) RETURN file_array pipelined AS lv_pattern VARCHAR2(1024); lv_ns VARCHAR2(1024); BEGIN SELECT directory_path INTO lv_pattern FROM dba_directories WHERE directory_name = 'NFS_DIR'; SYS.DBMS_BACKUP_RESTORE.SEARCHFILES(lv_pattern, lv_ns); FOR file_list IN (SELECT FNAME_KRBMSFT AS file_name FROM X$KRBMSFT WHERE FNAME_KRBMSFT LIKE '%'|| NVL(lp_string, FNAME_KRBMSFT)||'%' ) LOOP PIPE ROW(file_list.file_name); END LOOP; END; /

3.- Grant necessary permissions:

grant execute on LIST_FILES to public; create public synonym list_files for sys.LIST_FILES;

4.- Test without WHERE clause:

TESTDB> select * from table(list_files); COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_09092011.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_12092011.log 8 rows selected. Elapsed: 00:00:00.10

5.- Test with WHERE clause:

TESTDB> select * from table(list_files) where column_value like '%dmp%'; COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old Elapsed: 00:00:00.12

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Sysdate returns “wrong” time (time with timezone). 25 February 2011

Posted by David Alejo Marcos in Oracle 11.2, SQL - PL/SQL.Tags: Oracle 11.2, SQL, SQL - PL/SQL

comments closed

We are all used to execute sysdate to have the date + time of the database, what we are not so used to is to retrieve different values (depending of your timezone) from Oracle.

Sysdate, per Oracle documentation will do the following:

“SYSDATE returns the current date and time set for the operating system on which the database server resides. The data type of the returned value is DATE, and the format returned depends on the value of the NLS_DATE_FORMAT initialization parameter. The function requires no arguments. In distributed SQL statements, this function returns the date and time set for the operating system of your local database.”

Most people only reads the first line, where it says, it will return the date and time set for the operating system on which the database server resides; but right at the end, there is something we are all very familiar with, distributed SQL statements.

It is very hard to find a database not being accessed from different timezones than the server hosting it. Either because hosting is cheaper or because we have business in different countries.

The Problem:

If I execute a simple query to return the date and time from our US server:

SQL> alter session set nls_date_format='dd/mm/yyyy hh24:mi:ss'; Session altered. SQL> select sysdate from dual; SYSDATE ------------------- 24/02/2011 14:12:18 SQL> exit Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production With the Partitioning and Automatic Storage Management options

I receive the date and time in UTC:

[oracle@sssssssss ~]$ date Thu Feb 24 14:14:18 GMT 2011

From sqlplus in the UK:

C:\Documents and Settings\david.marcos>sqlplus daviddba@dddd SQL*Plus: Release 10.2.0.4.0 - Production on Thu Feb 24 14:14:30 2011 Copyright (c) 1982, 2007, Oracle. All Rights Reserved. Enter password: Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production With the Partitioning and Automatic Storage Management options DAVIDDBA@dddd> DAVIDDBA@dddd> alter session set nls_date_format='dd/mm/yyyy hh24:mi:ss'; Session altered. Elapsed: 00:00:00.14 DAVIDDBA@dddd> select sysdate from dual; SYSDATE ------------------- 24/02/2011 09:14:55 Elapsed: 00:00:00.20 DAVIDDBA@dddd>

It returns the date and time in NYC.

The Solution:

Quite simple, we have a file on the server called /etc/sysconfig/clock. This file configures the system clock to Universal or Local time.

[oracle@ssss ~]$ cat /etc/sysconfig/clock ZONE="America/New_York" UTC=true ARC=false

Zone should be :

[oracle@sssssss ~]$ cat /etc/sysconfig/clock ZONE="UTC" UTC=true ARC=false

So clients also uses UTC. After amending the value we were back to business.

There is another quick way to fix the problem, providing you do not have local_listener configured on your system as you can manipulate the timezone for your sessions using your listeners. Let me explain, if you connect from the server, you will probably not use the listener, so sysdate should be the same than your servers date and time.

Starting the listener with different timezone (for example, using TZ OS environment variable) you will be able to have two different sysdate results (one if you select from the server and a different one if you connect using the listener).

So, to fix your problem you will need to do the following:

1.- Configure TZ variable on your server (i.e. export TZ=”UTC”)

2.- Stop listener

3.- Start listener

Done.

If you have local_listener, you will need to stop/start your database with the right TZ environment variable to fix the issue.

As always, questions are welcome.

ORA-00600: internal error code, arguments: [kkpo_rcinfo_defstg:objnotfound], [xxx] after upgrade to 11.2.0.2 18 January 2011

Posted by David Alejo Marcos in Oracle 11.2, SQL - PL/SQL.Tags: Oracle 11.2, SQL - PL/SQL

comments closed

First of all, I would like to say thanks to Coskan (Josh) Gundogar for his invaluable assistance with resolving this problem. You can find more about Coskan here.

On Saturday January 15th we had to upgrade one of our busiest and biggest databases to 11.2.0.2 to fix several critical bugs on 11.2.0.1 that affected our systems.

After upgrading the database and initial test, sign off was given for the upgrade.

On Sunday January 16th (only one day after) I had several emails and couple of phone calls to check the database as we were having ORA-00600.

Please, be aware that ORA-00600 should be raised with Oracle. The steps below worked for us, but you will need to confirm with Oracle, your business and your Developers.

The problem:

The command generating the error was a very simple one:

ERROR at line 1: ORA-00600: internal error code, arguments: [kkpo_rcinfo_defstg:objnotfound], [1002287], [], [], [], [], [], [], [], [], [], [] ORA-06512: at "xxx.DELETE_SOURCE", line 54 ORA-06512: at line 1

The command at line 54 is:

DELETE FROM xxx.SOURCE WHERE SOURCEID = v_source;

I could find very little information on Oracle Support regarding this error.

The solution:

First thing we did was to raise a Sev. 1 with Oracle. Their suggestion was to restore the database from backup, meaning we had to downgrade primary database plus 2 standby databases.

After successfully reproducing the error I tried a different SQL, to find out if it was something wrong with the table.

I did try to execute select, insert and update, and all three worked fine:

SQL> select * FROM xxx.SOURCE WHERE SOURCEID = 49; SOURCEID SOURCENAME ABBREV ---------- ----------- ---------------- 49 xxxxx yyyyy SQL> select count(*) from xxx.source where sourceid = 90000; COUNT(*) ---------- 0 SQL> delete from xxx.source where sourceid = 90000; 0 rows deleted. SQL> rollback; SQL> update xxx.source set sourcename = 'pr newswire' where sourceid = 49; 1 row updated. SQL> rollback; Rollback complete.

But the delete for an existing row failed:

SQL> DELETE FROM xxx.SOURCE WHERE SOURCEID = 49; DELETE FROM xxx.SOURCE WHERE SOURCEID = 49 * ERROR at line 1: ORA-00600: internal error code, arguments: [kkpo_rcinfo_defstg:objnotfound], [1002300], [], [], [], [], [], [], [], [], [], []

After some trial and error and different not very successful solutions we run a trace on the SQL (something to remember for next time, one of the first things to do and well worth to spend time reading).

The trace file showed the following:

===================== PARSING IN CURSOR #47580064284056 len=283 dep=2 uid=0 oct=3 lid=0 tim=1295189617113218 hv=1612870922 ad='a06c7d880' sqlid='gnkrt49h24x8a' select pctfree_stg, pctused_stg, size_stg,initial_stg, next_stg, minext_stg, maxext_stg, maxsiz_stg, lobret_stg,mintim_stg, pctinc_stg, initra_stg, maxtra_stg, optimal_stg, maxins_stg,frlins_stg, flags_stg, bfp_stg, enc_stg, cmpflag_stg, cmplvl_stg from deferred_stg$ where obj# =:1 END OF STMT PARSE #47580064284056:c=1000,e=602,p=0,cr=0,cu=0,mis=1,r=0,dep=2,og=4,plh=0,tim=1295189617113216 BINDS #47580064284056: Bind#0 oacdty=02 mxl=22(22) mxlc=00 mal=00 scl=00 pre=00 oacflg=00 fl2=0001 frm=00 csi=00 siz=24 off=0 kxsbbbfp=2b46190f4a70 bln=22 avl=04 flg=05 value=1002300 EXEC #47580064284056:c=1000,e=1571,p=0,cr=0,cu=0,mis=1,r=0,dep=2,og=4,plh=866645418,tim=1295189617114927 FETCH #47580064284056:c=0,e=34,p=0,cr=1,cu=0,mis=0,r=0,dep=2,og=4,plh=866645418,tim=1295189617114999 STAT #47580064284056 id=1 cnt=0 pid=0 pos=1 obj=1062363 op='TABLE ACCESS BY INDEX ROWID DEFERRED_STG$ (cr=1 pr=0 pw=0 time=49 us cost=1 size=31 card=1)' STAT #47580064284056 id=2 cnt=0 pid=1 pos=1 obj=1062364 op='INDEX UNIQUE SCAN I_DEFERRED_STG1 (cr=1 pr=0 pw=0 time=35 us cost=0 size=0 card=1)' CLOSE #47580064284056:c=0,e=6,dep=2,type=3,tim=1295189617115210 *** 2011-01-16 14:53:37.357 <strong>DDE: Problem Key 'ORA 600 [kkpo_rcinfo_defstg:objnotfound]' was flood controlled (0x6) (incident: 812751)</strong> <strong>ORA-00600: internal error code, arguments: [kkpo_rcinfo_defstg:objnotfound], [1002300], [], [], [], [], [], [], [], [], [], []</strong> ===================== PARSE ERROR#47580064285064:len=92 dep=1 uid=0 oct=3 lid=0 tim=1295189617357412 err=600 select /*+ all_rows */ count(1) from "xxx"."INSTRUMENT_INSTRUMENTTAG" where "SOURCEID" = :1 EXEC #47580064671128:c=4059383,e=4121486,p=5,cr=331742,cu=129,mis=0,r=0,dep=0,og=1,plh=3685465056,tim=1295189617357720 ERROR #14:err=600 tim=1295189617357745 STAT #47580064671128 id=1 cnt=0 pid=0 pos=1 obj=0 op='DELETE SOURCE (cr=0 pr=0 pw=0 time=17 us)' STAT #47580064671128 id=2 cnt=1 pid=1 pos=1 obj=1063845 op='INDEX UNIQUE SCAN PK_SOURCE (cr=1 pr=0 pw=0 time=39 us cost=0 size=12 card=1)' WAIT #47580064671128: nam='SQL*Net break/reset to client' ela= 20 driver id=1413697536 break?=1 p3=0 obj#=-1 tim=1295189617358676 WAIT #47580064671128: nam='SQL*Net break/reset to client' ela= 9860 driver id=1413697536 break?=0 p3=0 obj#=-1 tim=1295189617368572 WAIT #47580064671128: nam='SQL*Net message to client' ela= 4 driver id=1413697536 #bytes=1 p3=0 obj#=-1 tim=1295189617368700 *** 2011-01-16 14:53:48.940 WAIT #47580064671128: nam='SQL*Net message from client' ela= 11571422 driver id=1413697536 #bytes=1 p3=0 obj#=-1 tim=1295189628940169 CLOSE #47580064285064:c=0,e=19,dep=1,type=0,tim=1295189628940357 CLOSE #47580064671128:c=0,e=96,dep=0,type=0,tim=1295189628940417 =====================

Note.- The above is just an extract, the trace file identified a few more tables with the same problem.

The error was occurring on a different table. This was happening as SOURCE is one of our key tables with many references to it.

After performing a select on the failing table we had the following:

select * from xxx.instrumenttag * ERROR at line 1: ORA-00600: internal error code, arguments: [kkpo_rcinfo_defstg:objnotfound], [1002298], [], [], [], [], [], [], [], [], [], []

After a conversation with the business, we confirmed those tables were created on production couple of weekends before the upgrade and they were not being used.

As we only had integrity reference and were not being used, we decided to recreate them using the DDL from QA.

Once all tables (40+) and associate indexes were created successfully, the code worked:

SQL> begin 2 xxx.DELETE_SOURCE(49); 3 end; 4 / PL/SQL procedure successfully completed. SQL>

Thoughts:

1.- This problem was a tough one. Ora-00600 are never good, but this was one of the hardest on production, in my humble opinion, to troubleshoot without restoring and downgrading a primary and 2 standby databases.

2.- It is my believe the second parameter on the error identifies the missing object (I cannot test it now):

ORA-00600: internal error code, arguments: [kkpo_rcinfo_defstg:objnotfound], [1002287], [], [], [], [], [], [], [], [], [], []

If my assumption is right, this would have helped while troubleshooting.

3.- Oracle has a specific statement that catupgrd.sql can be rerun (Note.- ID 1100828.1), I am not so sure…

catupgrd.sql is re-runnable and the bug states the errors can be ignored

4.- We did not have the same problem on QA as those empty objects were created after we did the upgrade. This proves how difficult testing can be.

5.- With 11.2 we have something new called “Deferred Segment Creation”. It is my believe that new empty objects without segments might have been the root cause of the error during the upgrade.

This is the reason behind this new feature (From Oracle):

“When you create a non-partitioned heap table, the table segment creation is deferred to the first row insert. This functionality is enabled by default with the initialization parameter DEFERRED_SEGMENT_CREATION set to TRUE.”

The default value for this parameter is true (find more here.

The failing command was:

PARSING IN CURSOR #47580064284056 len=283 dep=2 uid=0 oct=3 lid=0 tim=1295189617113218 hv=1612870922 ad='a06c7d880' sqlid='gnkrt49h24x8a' select pctfree_stg, pctused_stg, size_stg,initial_stg, next_stg, minext_stg, maxext_stg, maxsiz_stg, lobret_stg,mintim_stg, pctinc_stg, initra_stg, maxtra_stg, optimal_stg, maxins_stg,frlins_stg, flags_stg, bfp_stg, enc_stg, cmpflag_stg, cmplvl_stg from deferred_stg$ where obj# =:1 END OF STMT

After recreating the object, the same query worked:

SYS@xxx> select object_id from dba_objects where object_name = 'INSTRUMENT_INSTRUMENTTAG'; OBJECT_ID ---------- 1063905 Elapsed: 00:00:00.00 SYS@MFE> select pctfree_stg, pctused_stg, size_stg,initial_stg, next_stg, minext_stg, maxext_stg, maxsiz_stg, lobret_stg,mintim_stg, pctinc_stg, initra_stg, maxtra_stg, optimal_stg, maxins_stg,frlins_stg, flags_stg, bfp_stg, enc_stg, cmpflag_stg, cmplvl_stg 2 from sys.deferred_stg$ 3 where obj# =1063905; PCTFREE_STG PCTUSED_STG SIZE_STG INITIAL_STG NEXT_STG MINEXT_STG MAXEXT_STG MAXSIZ_STG LOBRET_STG MINTIM_STG PCTINC_STG INITRA_STG MAXTRA_STG OPTIMAL_STG MAXINS_STG FRLINS_STG ----------- ----------- ---------- ----------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ----------- ---------- ---------- FLAGS_STG BFP_STG ENC_STG CMPFLAG_STG CMPLVL_STG ---------- ---------- ---------- ----------- ---------- 10 0 0 1 255 8 0 0 0 Elapsed: 00:00:00.02 SYS@xxx>

I am not entirely convince catupgrd.sql will perform the link between segments (tables, indexes and LOBs) and the associate entry on sys.deferred_stg$. Something to test on QA.

6.- Query to find tables without segments:

6.1.- Coskan’s version used while troubleshooting:

SELECT 'select count(*) from ' || owner || '.' || table_name || ' ;' FROM dba_tables WHERE segment_created = 'NO';

6.2.- My version to verify objects (valid for INDEX PARTITION, TABLE PARTITION, TABLE, INDEX although the version below only checks tables):

SELECT owner, object_name, object_type FROM sys.deferred_stg$, dba_objects WHERE obj# = object_id AND object_type = 'TABLE';

As always, comments are welcome.

Monitoring tablespaces with dynamic thresholds 13 December 2010

Posted by David Alejo Marcos in SQL - PL/SQL.Tags: SQL, SQL - PL/SQL

comments closed

One of the problems we face while monitoring tablespaces is the difference in size. Some of our tablespaces are 10-50 GB while other are close to TB.

10% free space on a 10GB tablespace is not much on a fast growing system, while 10% on a 1TB tablespace is enough to keep us going for 1 month.

The problem:

We need a query that performs really quick, it is not heavy on the system and is clever enough to alert us if 20% is critical, warning or can wait.

The solution:

I came up with the following query:

SELECT (CASE

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents THEN 'CRITICAL'

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20 THEN 'WARNING'

ELSE 'N/A'

END)

alert,

tablespace_name,

space_used_mb,

extend_bytes/1024/1024 extend_mb,

free_space/1024/1024 free_mb,

pct_free,

free_extents,

free_space_mb,

max_size_mb,

maxextent

FROM (SELECT c.tablespace_name,

NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) pct_free,

NVL ( ROUND ( (a.extend_bytes + b.free_space) / 1024 / 1024, 2), 0) free_space_mb,

(CASE

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 30 THEN 60

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 100 THEN 120

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 300 THEN 200

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 800 THEN 300

ELSE 340

END) free_extents,

a.extend_bytes, b.free_space,

ROUND (maxbytes / 1024 / 1024, 2) max_size_mb,

nvl (round(a.bytes - b.free_space ,2) /1024/1024, 0) space_used_mb,

c.allocation_type,

GREATEST (c.min_extlen / 1024 / 1024, 64) min_extlen,

-- (SELECT NVL(MAX (bytes) / 1024 / 1024, 64) maxextent

-- FROM dba_extents

-- WHERE tablespace_name = a.tablespace_name) maxextent

64 maxextent

FROM ( SELECT tablespace_name,

SUM(DECODE (SIGN (maxbytes - BYTES), -1, 0, maxbytes - BYTES)) AS extend_bytes,

SUM (BYTES) AS BYTES,

SUM (maxbytes) maxbytes

FROM DBA_DATA_FILES

GROUP BY tablespace_name) A,

( SELECT tablespace_name,

SUM (BYTES) AS free_space,

MAX (BYTES) largest

FROM DBA_FREE_SPACE

GROUP BY tablespace_name) b,

dba_tablespaces c

WHERE c.contents not in ('UNDO','TEMPORARY')

AND b.tablespace_name(+) = c.tablespace_name

AND a.tablespace_name = c.tablespace_name

AND NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) < 20

) A

WHERE free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20;

and this is how it works:

1.- Line 43. We do not raise alerts for Undo or Temp tablespaces.

2.- Line 44. Outer join between dba_tablespaces and dba_free_space is necessary or you will not receive alerts once you do not have any free space.

3.- Line 46. Only evaluate tablespaces with less than 20% free space.

4.- Line 30. I started monitoring the largest extent for the tablespace being monitored (query below), but we hit some bugs with dba_extents not being able to have statistics, generating a bad plan and running for up to 2 minutes. If you are using tablespaces with Extent Allocation = Automatic, the biggest extent will be 64 MB, so I decided to use a hard-coded value instead.

(SELECT NVL(MAX (bytes) / 1024 / 1024, 64) maxextent

FROM dba_extents

WHERE tablespace_name = a.tablespace_name) maxextent

5.- Lines 18 to 24. This is where the logic happens. Depending of the size of the tablespace (including max autoextend), we define thresholds under free_extents. This values might need to be adjusted to your systems.

6.- Lines 1 to 6. Depending of the results on point 5, we define alerts as N/A (Not Applicable, Warning or Critical). Worth mentioning the distinction between Extent Allocation Uniform or Automatic. I assume a max extent of 64 MB for both to have consistency.

Please, share your thoughts about this query, there is always more than one way to achieve the same goal using SQL.

As always, comments are welcome.

ORA-01548: active rollback segment ‘_SYSSMU1_xxxx’ found, terminate dropping tablespace while deleting UNDOTBS 9 November 2010

Posted by David Alejo Marcos in Oracle 11.2, SQL - PL/SQL.Tags: Oracle 11.2, SQL - PL/SQL

comments closed

Last week I had to solve a strange error on one of our production databases. Luckily for us, it was the RMAN catalog.

I said luckily for us because this database is internal to us. The same problem on a production database serving our clients could have been disastrous as we have tight deadlines.

The problem:

The UNDOTBS for our RMAN Catalog database was dropped. Any attempts to switch to a newly created UNDOTBS or removing the old UNDOTBS failed with the following error:

SQL> drop tablespace UNDOTBS01 including contents;

drop tablespace UNDOTBS01 including contents

*

ERROR at line 1:

ORA-01548: active rollback segment '_SYSSMU1_1255349037$' found, terminate dropping tablespace

The solution:

Our RMAN Catalog has a standby database for protection. The first thing I did was to stop the standby database from applying more archivelogs as the UNDOTBS01 existed. This would have allowed me to perform a failover if necessary.

As I mentioned before, I created a new UNDOTBS3 to check if I could modify the undo_tablespace parameter, but it did not work:

SQL> ALTER SYSTEM SET UNDO_TABLESPACE = undotbs3;

System altered.

SQL> drop tablespace UNDOTBS01 including contents;

drop tablespace UNDOTBS01 including contents

*

ERROR at line 1:

ORA-01548: active rollback segment '_SYSSMU1_1255349037$' found, terminate dropping tablespace

Tried to delete the rollback segment, but it did not work:

SQL> drop rollback segment '_SYSSMU1_1255349037$';

drop rollback segment '_SYSSMU1_1255349037$'

*

ERROR at line 1:

ORA-02175: invalid rollback segment name

In fact, it did not even exist:

SQL> select * from v$rollname ;

USN NAME

---------- ------------------------------

0 SYSTEM

18 _SYSSMU18_650722988$

19 _SYSSMU19_176786070$

20 _SYSSMU20_2971238361$

21 _SYSSMU21_1337078425$

22 _SYSSMU22_378998127$

23 _SYSSMU23_2828850578$

24 _SYSSMU24_3342375805$

25 _SYSSMU25_1071547613$

26 _SYSSMU26_357778769$

27 _SYSSMU27_3256159541$

So, how to fix it?

I did execute the following steps:

1.- Create a copy of the spfile:

create pfile from spfile;

So I could rename the spfile and work with this init.ora file until I fixed the problem.

2.- Stop the database:

SQL> shutdown immediate

Database closed.

Database dismounted.

ORACLE instance shut down.

SQL> exit

3.- Amended the init.ora file with the following:

*.undo_management='MANUAL'

*.undo_retention=1800

*.undo_tablespace='UNDOTBS3'

*._offline_rollback_segments=_SYSSMU1_1255349037$

4.- Start database up and try to delete the UNDOTBS01>

SQL> drop tablespace undotbs01 including contents and datafiles;

drop tablespace undotbs01 including contents and datafiles

*

ERROR at line 1:

ORA-01548: active rollback segment '_SYSSMU3_1255349037$' found, terminate dropping tablespace

As you can see, the rollback segment is different, it changed from _SYSSMU1_1255349037$ to _SYSSMU3_1255349037$ (the difference is the digit after SYSSMU).

After several tries, I realised I had to add as many entries to _offline_rollback_segments as entries I had on v$rollname where NAME LIKE ‘%SYSSMU%’ (10 in my case).

so I stopped the database again and made the necessary changes:

5.- Edit init.ora file and add as many entries as rows has V$rollname where name like ‘%SYSSMU%’:

*._offline_rollback_segments=(_SYSSMU1_1255349037$,_SYSSMU2_1255349037$,

_SYSSMU3_1255349037$,_SYSSMU4_1255349037$,

_SYSSMU5_1255349037$,_SYSSMU6_1255349037$,

_SYSSMU7_1255349037$,_SYSSMU8_1255349037$,

_SYSSMU9_1255349037$,_SYSSMU10_1255349037$)

6.- Start up the database and drop UNDOTBS01

SQL> drop tablespace undotbs01 including contents and datafiles;

Tablespace dropped.

7.- and create UNDOTBS01:

SQL> CREATE UNDO TABLESPACE "UNDOTBS01" DATAFILE '/u01/oradata/CATALOG/undo01.dbf' SIZE 1024M;

Tablespace created.

7.- Shutdown the database, rename (or delete) the init.ora file and start up the database using the spfile.

8.- Set UNDOTBS01 as the UNDO tablespace:

SQL> alter system set undo_tablespace=undotbs01 scope=both;

9.- Check parameters:

SQL> show parameter undo

NAME TYPE VALUE

———————————— ———– ——————————

undo_management string AUTO

undo_retention integer 1800

undo_tablespace string UNDOTBS01

10.- and drop UNDOTBS3:

SQL> drop tablespace undotbs3 including contents and datafiles;

Tablespace dropped.

As soon as the database was running as normal I executed a backup to ensure all was ok.

After the verification, I enabled the recovery to keep the standby database in synch with the primary.

Note.- It is not necessary to add the full name _SYSSMU1_1255349037$ to _offline_rollback_segments. Only the first part (SYSSMU1) would have been enough.

_offline_rollback_segments=(_SYSSMU1_1255349037$,_SYSSMU2_1255349037$) is the same than

_offline_rollback_segments=(_SYSSMU1,_SYSSMU2)

As always, comments are welcome.

How to retrieve the hash value for a password in Oracle 11g 20 October 2010

Posted by David Alejo Marcos in Oracle 11.2, SQL - PL/SQL.Tags: Oracle 11.2

comments closed

As we all know, Oracle has decided to do not show the hash value for the password on the dba_users view.

The problem:

Saving your passwords to a file as below does not work anymore:

SELECT 'ALTER USER ' || username || ' identified by values '''|| password || ''';' FROM dba_users;

This is necessary, in our case, for releases, DEV/QA refreshes, etc…

The solution:

I first wrote the following query:

MYUSER@mydb> SELECT substr (trim(DBMS_METADATA.get_ddl ('USER','MYUSER')),

2 REGEXP_INSTR(trim(DBMS_METADATA.get_ddl ('USER','MYUSER')),'''',1,1,0,'m'), 20

3 ) PASSWD

4 FROM DUAL;

PASSWD

--------------------------------------------------------------------------------

'2YYYYY4DXXXXXX'

Note.- The hash value has been modified for security reasons.

I was working under the assumption Oracle will not change the algorithm any time soon (currently the length is 16 characters); but I also found “dangerous” to make this assumption.

So my next query is:

select REGEXP_SUBSTR(DBMS_METADATA.get_ddl ('USER','MYUSER'), '''[^'']+''') PASSWD from dual;

which I find more elegant.

MYUSER@MYDB> select REGEXP_SUBSTR(DBMS_METADATA.get_ddl ('USER','MYUSER'), '''[^'']+''') PASSWD from dual;

PASSWD

--------------------------------------------------------------------------------

'XXXXX4YYYYYZZZZZZ'

The code we need to use from now on is:

set long 150

set linesize 150

set longchunksize 150

select 'alter user '||username||' identified by values '||REGEXP_SUBSTR(DBMS_METADATA.get_ddl ('USER',USERNAME), '''[^'']+''')||';' from dba_users

As always, comments are welcome.

ORA-06502: character string buffer too small while re/creating dbconsole 18 December 2009

Posted by David Alejo Marcos in Enterprise Manager, Grid Control, SQL - PL/SQL.Tags: Enterprise Manager, Grid Control, SQL - PL/SQL

comments closed

wow,

we are getting close to the end of this year. This may be the last post for 2009, we will have to think about next year…

The problem:

OK, the last thing we faced here was the following error while re-creating the dbconsole using the standard emca -config dbcontrol db -repos recreate command:

CONFIG: ORA-06502: PL/SQL: numeric or value error: character string buffer too small

ORA-06512: at line 259

oracle.sysman.assistants.util.sqlEngine.SQLFatalErrorException: ORA-06502: PL/SQL: numeric or value error: character string buffer too small

ORA-06512: at line 259

followed for some nasty Java alerts and:

SEVERE: Error creating the repository

….

oracle.sysman.emcp.exception.EMConfigException: Error creating the repository

The solution:

Well, the first thing to check is that you are on the right ORACLE_HOME, this is not going to solve the problem, but it will help us to modify the script on the right ORACLE_HOME the first time.

The problem is located on a variable used to store the name of the host.

Normally this variable is defined for 32 characters. This is normally right for most systems, but we had a problem with one of ours as the domain of the servers plus the name of the database had a length of 33 characters.

How can we check the length of our host_name?, quite simple:

SYS@XXXXXXX> col host_name for a40

SYS@databee> select host_name, length(host_name) length from v$instance;

HOST_NAME LENGTH

————————————————————————– ———-

hhhhhhhhhhhhhhhhh.dddddd.dddddddd 33

Elapsed: 00:00:00.09

I had to change the names for security reasons.

Ok, the problem is down to a single character, the length is 33 characters and the variable has been defined as:

l_host_name VARCHAR2(32);

so it can only store 32.

the fix is quite simple.

Steps:

1.- Check you are on the right ORACLE_HOME

echo $ORACLE_HOME

2.- cd $ORACLE_HOME/sysman/admin/emdrep/sql/core/latest/self_monitor

3.- cp self_monitor_post_creation.sql self_monitor_post_creation.sql_bk

4.- Edit self_monitor_post_creation.sql and replace

l_host_name VARCHAR2(32);

for a length that can store your host_name

l_host_name VARCHAR2(35);

NOTE.- there are two places where this variable is defined on the script, remember to modify the definition on both places.

Once the script has been changed, you can rerun the command to re/create the dbconsole:

emca -config dbcontrol db -repos recreate

Perfect.

As always, comments are welcome.

Merry Xmas.

You must be logged in to post a comment.