SQL Monitor details for later tuning. 29 March 2012

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL, Tuning.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL, Tuning

comments closed

Tuning has always being good fun and something like a challenge for me.

From time to time we are being asked to find out why something did run slow while you are sleeping; answering this question is, in most cases, a challenge.

The problem:

My batch did run slow last night, can you let us know why? Or why did this query run slow? Are questions we, as DBAs, have to answer from time to time.

The solution:

Oracle has provided us with many tools to dig out information about past operations. We have EM, AWR, ASH, dba_hist_* tables, scripts all over internet, etc.

I must admit I do use sql_monitor quite often, but on a really busy environment, Oracle will only keep, with any luck, couple of hours of SQLs.

V$SQL_MONITOR and dbms_sqltune.report_sql_monitor have become tools I use most frequently.

The only problem I have is, as mentioned earlier, the number of SQLs stored on v$sql_monitor or, rather, the length of time being kept there.

Oracle will keep a certain number of SQLs (defined by a hidden parameter) and start recycling them so, by the time I am in the office, any SQL executed during the batch is long gone.

For this reason I came up with the following. I must admit it is not rocket science, but it does help me quite a lot.

It is like my small collection of “Bad Running Queries”. And I only need another DBA or an operator with certain privileges to execute a simple procedure to allow me to try to find out what did happen.

We need the following objects:

1.- A table to store the data:

CREATE TABLE perflog ( asof DATE, userid VARCHAR2(30), sql_id VARCHAR2 (30), monitor_list CLOB, monitor CLOB, monitor_detail CLOB ); /

2.- A procedure to insert the data I need for tuning:

CREATE OR REPLACE PROCEDURE perflog_pr (p_sql_id VARCHAR2 DEFAULT 'N/A')

AS

BEGIN

IF p_sql_id = 'N/A'

THEN

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

NULL,

NULL

FROM DUAL;

ELSE

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_monitor (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_detail (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL')

FROM DUAL;

END IF;

COMMIT;

END;

/

3.- Grant necessary permissions:

grant select, insert on perflog to public / create public synonym perflog for perflog / grant execute on perflog_pr to public / create public synonym perflog_pr for perflog_pr / grant select any table, select any dictionary to <owner_code> /

The way it works is as follows:

– If business complains regarding a specific query, the DBA or operator can call the procedure with the sql_id:

exec perflog_pr ('1f52b50sq59q');

This will store the datetime, sql_id, DBA/operator name and most important the status of the instance at that time, general view of the sql and a detailed view of the sql running slow.

– If business complains regarding slowness but does not provide a specific query, we execute the following:

exec perflog_pr;

This will store the datetime, sql_id, DBA/operator name and a general view of the instance.

Please, give it a go and let me know any thoughts.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Extending Oracle Enterprise Manager (EM) monitoring. 29 January 2012

Posted by David Alejo Marcos in Grid Control, Oracle 11.2, SQL - PL/SQL.Tags: Grid Control, Oracle 11.2, SQL, SQL - PL/SQL

comments closed

I always found Oracle Enterprise Manager (EM) to be an interesting tool for different reasons. The only thing I missed was an easy way to create my own alerts.

It is very simple to create a KSH, Perl, etc script to do some customised monitoring and notify you by email, Nagios, NetCool, etc.

By integrating your scripts with OEM, you will have an easy way to enhance your monitoring and still have notification by email, SNMP traps, etc. as you would currently have if your company is using OEM for monitoring your systems.

The problem:

Develop an easy way to integrate your monitoring scripts with OEM.

The solution:

I decided to use an Oracle type and an Oracle function to accomplish this goal. Using the steps described below, we can monitor pretty much whatever aspect of the database providing you can put the logic into a function.

As example, I had added the steps to create two new User-Defined SQL Metrics, as Oracle calls them:

1.- Long Running Sessions (LRS).

2.- Tablespace Monitoring.

The reason to have my own TBS monitoring is to enhance the existing as it has “hard-coded” thresholds. I might have tablespaces in your database which are 6TBS in size and other with only 2Gb, so raising an alert at 95% for both of them is, in my opinion, not adequate.

You can find more about the query I developed here.

The steps to create a script to monitor long running sessions (LRS) are:

1.- create types

CREATE OR REPLACE TYPE lrs_obj as OBJECT ( user_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE lrs_array AS TABLE OF lrs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION lrs RETURN lrs_array IS long_running_data lrs_array := lrs_array(); ln_seconds_active number := 300; BEGIN SELECT lrs_obj(username||' Sec: '||sec_running, ', Inst_id: '||inst_id||', SID: '||sid||', Serial: '|| serial||', Logon: '||session_logon_time||', sql_id: '||sql_id) BULK COLLECT INTO long_running_data FROM (SELECT /*+ FIRST_ROWS USE_NL(S,SQ,P) */ s.inst_id inst_id, s.sid sid, s.serial# serial, s.last_call_et sec_running, NVL(s.username, '(oracle)') AS username, to_char(s.logon_time, 'DD-MM-YYYY HH24:MI:SS') session_logon_time, s.machine, NVL(s.osuser, 'n/a') AS osuser, NVL(s.program, 'n/a') AS program, s.event, s.seconds_in_wait, s.sql_id sql_id, sq.sql_text from gv$session s, gv$sqlarea sq where s.sql_id = sq.sql_id and s.inst_id = sq.inst_id and s.status = 'ACTIVE' and s.last_call_et > ln_seconds_active and s.paddr not in ( select paddr from gv$bgprocess where paddr != '00' ) and s.type != 'BACKGROUND' and s.username not in ( 'SYSTEM', 'SYS' ) AND s.event != 'SQL*Net break/reset to client' ) CUSTOMER_QUERY; RETURN long_running_data; END lrs; /

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on lrs_obj to public; grant execute on lrs_array to public; grant execute on lrs to public;

4.- create synonyms

Create public synonym lrs_obj for lrs_obj; Create public synonym lrs_array for lrs_array; Create public synonym lrs for lrs;

5.- Query to monitor

SELECT user_name, error_message FROM TABLE(CAST(lrs as lrs_array));

Once we are satisfied with the thresholds (300 seconds on the script), we are ready to add it to EM.

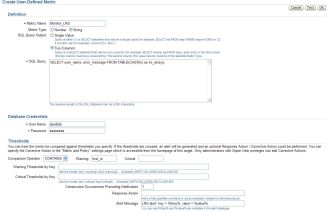

1.- Navigate to User-Defined SQL Metrics (you need to navigate to your database and you will find the link at bottom).

2.- Create new User-Defined SQL Metric and fill the gaps (I have attached some values for reference). The most important thing right now is to make sure metric_Type = String, Sql Query Output = two columns, comparison Operator = CONTAINS and warning has a value returned by the query (i did decide to go for Inst_id).

The only thing left now is to add this monitoring to your templates and to your rules so notifications are being sent.

Once all has been configure, you should start seeing alerts like this:

Target Name=lab01 Target type=Cluster Database Host=mylab Occurred At=Jan 22, 2012 14:35:47 PM GMT Message=LRS alert: key = DAVIDLAB Sec: 530, value = , Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric=StrValue Metric value=, Inst_id: 1, SID: 153, Serial: 1597, Logon: 22-01-2012 12:21:46, sql_id: 3m72fjep12w8r Metric ID=lrs Key=DAVIDLAB Sec: 530 Severity=Warning Acknowledged=No Notification Rule Name=david alerts Notification Rule Owner=DAVIDLAB

For Tablespace monitoring the steps will be the same as described above:

1.- create types

CREATE OR REPLACE TYPE tbs_obj as OBJECT ( tablespace_name VARCHAR2(256), error_message varchar(2000)); / CREATE OR REPLACE TYPE tbs_array AS TABLE OF tbs_obj; /

2.- create function.

CREATE OR REPLACE FUNCTION calc_tbs_free_mb RETURN tbs_array IS

tablespace_data tbs_array := tbs_array();

BEGIN

SELECT tbs_obj(tablespace_name, alert||', Used_MB: '||space_used_mb||', PCT_Free: '||pct_free||', FreeMB: '|| free_space_mb) BULK COLLECT

INTO tablespace_data

FROM

(SELECT (CASE

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents THEN 'CRITICAL'

WHEN free_space_mb <= DECODE (allocation_type, 'UNIFORM', min_extlen, maxextent) * free_extents + 20 THEN 'WARNING'

ELSE 'N/A'

END)

alert,

tablespace_name,

space_used_mb,

ROUND (free_space/power(1024,3), 2) free_gb,

free_space_mb,

pct_free,

ROUND (extend_bytes/power(1024,3), 2) extend_gb,

free_extents,

max_size_gb,

maxextent

FROM (SELECT c.tablespace_name,

NVL (ROUND ( (a.extend_bytes + b.free_space) / (bytes + a.extend_bytes) * 100,2), 0) pct_free,

NVL ( ROUND ( (a.extend_bytes + b.free_space) / 1024 / 1024, 2), 0) free_space_mb,

(CASE

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 30 THEN 60

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 100 THEN 120

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 300 THEN 200

WHEN NVL ( ROUND ( (maxbytes) / power(1024,3), 2), 0) <= 800 THEN 300

ELSE 340

END) free_extents,

a.extend_bytes, b.free_space,

ROUND (maxbytes /power(1024,3), 2) max_size_gb,

nvl (round(a.bytes - b.free_space ,2) /1024/1024, 0) space_used_mb,

c.allocation_type,

GREATEST (c.min_extlen / 1024 / 1024, 64) min_extlen,

64 maxextent

FROM ( SELECT tablespace_name,

SUM(DECODE (SIGN (maxbytes - BYTES), -1, 0, maxbytes - BYTES)) AS extend_bytes,

SUM (BYTES) AS BYTES,

SUM (maxbytes) maxbytes

FROM DBA_DATA_FILES

GROUP BY tablespace_name) A,

( SELECT tablespace_name,

SUM (BYTES) AS free_space,

MAX (BYTES) largest

FROM DBA_FREE_SPACE

GROUP BY tablespace_name) b,

dba_tablespaces c

WHERE c.contents not in ('UNDO','TEMPORARY') and

b.tablespace_name(+) = c.tablespace_name

AND a.tablespace_name = c.tablespace_name

)

) CUSTOMER_QUERY;

RETURN tablespace_data;

END calc_tbs_free_mb;

/

3.- Grant privileges to the users will be executing monitoring scripts:

grant execute on tbs_obj to public; grant execute on tbs_array to public; grant execute on calc_tbs_free_mb to public;

4.- create synonyms

Create public synonym tbs_obj for tbs_obj; Create public synonym tbs_array for tbs_array; Create public synonym calc_tbs_free_mb for calc_tbs_free_mb;

5.- Query to monitor

SELECT * FROM TABLE(CAST(calc_tbs_free_mb as tbs_array));

Please, remember to use comparison operator = CONTAINS, warning = WARNING and critical=CRITICAL

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

How to list files on a directory from Oracle Database. 13 September 2011

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL

comments closed

Couple of days ago I had an interesting request, “How can I see the contents of nfs_dir”?

The problem:

We were using DBFS to store our exports. This was the perfect solution as the business could “see” the files on the destination folder, but it did not meet our requirements performance wise on our Exadata.

We have decided to mount NFS and performance did improve, but we had a different problem. NFS is mounted on the database server and business do not have access for security reasons and segregation of duties.

Since then, the export jobs run, but business could not “see” what files were created, so the question was asked.

The solution:

After some research I came across with the following package:

SYS.DBMS_BACKUP_RESTORE.searchFiles

I did have to play a little bit, and I finished with the following script:

1.- Create an Oracle type

create type file_array as table of varchar2(100) /

2.- Create the function as SYS:

CREATE OR REPLACE FUNCTION LIST_FILES (lp_string IN VARCHAR2 default null) RETURN file_array pipelined AS lv_pattern VARCHAR2(1024); lv_ns VARCHAR2(1024); BEGIN SELECT directory_path INTO lv_pattern FROM dba_directories WHERE directory_name = 'NFS_DIR'; SYS.DBMS_BACKUP_RESTORE.SEARCHFILES(lv_pattern, lv_ns); FOR file_list IN (SELECT FNAME_KRBMSFT AS file_name FROM X$KRBMSFT WHERE FNAME_KRBMSFT LIKE '%'|| NVL(lp_string, FNAME_KRBMSFT)||'%' ) LOOP PIPE ROW(file_list.file_name); END LOOP; END; /

3.- Grant necessary permissions:

grant execute on LIST_FILES to public; create public synonym list_files for sys.LIST_FILES;

4.- Test without WHERE clause:

TESTDB> select * from table(list_files); COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_09092011.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_12092011.log 8 rows selected. Elapsed: 00:00:00.10

5.- Test with WHERE clause:

TESTDB> select * from table(list_files) where column_value like '%dmp%'; COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old Elapsed: 00:00:00.12

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

ORA-16072: a minimum of one standby database destination is required 27 August 2011

Posted by David Alejo Marcos in ASM, Exadata, Oracle 11.2, RAC, RMAN.Tags: ASM, backup, Dataguard, Exadata, Oracle 11.2, RAC, RMAN, Standby

comments closed

This is a quick post regarding the error on the subject. This is the second time it happens to me, so I thought I will write a bit about it.

The problem:

I am refreshing one of my UAT environments (happens to be a Full Rack Exadata) using Oracle RMAN duplicate command. Then the following happens (on both occasions).

1.- Duplicate command fails (lack of space for restoring archivelogs, or any other error). This is can be fixed quite easy.

2.- following error while trying to open the database after restore and recover has finished:

SQL> alter database open; alter database open * ERROR at line 1: ORA-03113: end-of-file on communication channel Process ID: 13710 Session ID: 1250 Serial number: 5 SQL> exit

On the alert.log file we can read the following:

Wed Aug 24 13:32:48 2011 alter database open Wed Aug 24 13:32:49 2011 LGWR: STARTING ARCH PROCESSES Wed Aug 24 13:32:49 2011 ARC0 started with pid=49, OS id=13950 ARC0: Archival started LGWR: STARTING ARCH PROCESSES COMPLETE ARC0: STARTING ARCH PROCESSES <strong>LGWR: Primary database is in MAXIMUM AVAILABILITY mode</strong> <strong>LGWR: Destination LOG_ARCHIVE_DEST_1 is not serviced by LGWR</strong> <strong>LGWR: Minimum of 1 LGWR standby database required</strong> Errors in file /apps/oracle/server/diag/rdbms/xxxx04/xxxx041/trace/xxxx041_lgwr_13465.trc: <strong>ORA-16072: a minimum of one standby database destination is required</strong> Errors in file /apps/oracle/server/diag/rdbms/xxxx04/xxxx041/trace/xxxx041_lgwr_13465.trc: ORA-16072: a minimum of one standby database destination is required LGWR (ospid: 13465): terminating the instance due to error 16072 Wed Aug 24 13:32:50 2011 ARC1 started with pid=48, OS id=13952 Wed Aug 24 13:32:50 2011 System state dump is made for local instance System State dumped to trace file /apps/oracle/server/diag/rdbms/xxxx04/xxxx041/trace/xxxx041_diag_13137.trc Trace dumping is performing id=[cdmp_20110824133250] Instance terminated by LGWR, pid = 13465

The Solution:

Quite simple:

1.- Start up database in mount mode:

SQL> startup mount ORACLE instance started. Total System Global Area 1.7103E+10 bytes Fixed Size 2230472 bytes Variable Size 4731176760 bytes Database Buffers 1.2180E+10 bytes Redo Buffers 189497344 bytes Database mounted. SQL> select open_mode, DATABASE_ROLE, guard_status, SWITCHOVER_STATUS from v$database; OPEN_MODE DATABASE_ROLE GUARD_S SWITCHOVER_STATUS -------------------- ---------------- ------- -------------------- MOUNTED PRIMARY NONE NOT ALLOWED

2.- Execute the following command:

SQL> alter database set standby database to maximize performance; Database altered. SQL> select open_mode, DATABASE_ROLE, guard_status, SWITCHOVER_STATUS from v$database; OPEN_MODE DATABASE_ROLE GUARD_S SWITCHOVER_STATUS -------------------- ---------------- ------- -------------------- MOUNTED PRIMARY NONE NOT ALLOWED

3.- Stop database:

SQL shutdown immediate ORA-01109: database not open Database dismounted. ORACLE instance shut down.

4.- Start up database mount mode:

SQL> startup mount ORACLE instance started. Total System Global Area 1.7103E+10 bytes Fixed Size 2230472 bytes Variable Size 4731176760 bytes Database Buffers 1.2180E+10 bytes Redo Buffers 189497344 bytes Database mounted.

5.- Open database:

SQL> alter database open; Database altered. SQL> select open_mode from v$database; OPEN_MODE -------------------- READ WRITE SQL> select instance_name from v$instance; INSTANCE_NAME ---------------- xxxx041 SQL>

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

How to transfer files from ASM to another ASM or filesystem or DBFS… 23 July 2011

Posted by David Alejo Marcos in ASM, Exadata, Oracle 11.2.Tags: ASM, Exadata, Oracle 11.2

comments closed

I had a requirement of transferring files from our PROD ASM to our UAT ASM as DBFS is proving to be slow.

The problem:

We are currently refreshing UAT schemas using Oracle Datapump to DBFS and then transferring those files to UAT using SCP.

DBFS does not provided us with the performance we need as datapump files are quite big. Same export onto ASM or NFS proves to be much, much faster.

We are currently testing exports to ASM, but, how to move dmp files from PROD ASM to UAT ASM?

The solution:

The answer for us is using DBMS_FILE_TRANSFER. It is very simple to set up (steps below) and it has proved to be fast.

DBMS_FILE_TRANSFER will copy files from one ORACLE DIRECTORY to another ORACLE DIRECTORY. The directory can point to a folder on ASM, DBFS, Filesystem, etc, so the transfer is “heterogeneous”.

I decided to go for GET_FILE, so most of the work will be done on UAT, the other option is PUT_FILE.

The syntax is as follows:

DBMS_FILE_TRANSFER.GET_FILE ( source_directory_object IN VARCHAR2, source_file_name IN VARCHAR2, source_database IN VARCHAR2, destination_directory_object IN VARCHAR2, destination_file_name IN VARCHAR2);

Where:

source_directory_object: The directory object from which the file is copied at the source site. This directory object must exist at the source site.

source_file_name: The name of the file that is copied in the remote file system. This file must exist in the remote file system in the directory associated with the source directory object.

source_database: The name of a database link to the remote database where the file is located.

destination_directory_object: The directory object into which the file is placed at the destination site. This directory object must exist in the local file system.

destination_file_name: The name of the file copied to the local file system. A file with the same name must not exist in the destination directory in the local file system.

These are the steps for my test:

1.- Create directory on destination:

oracle@sssss (+ASM1)$ asmcmd ASMCMD> mkdir +DATA/DPUMP ASMCMD> mkdir +DATA/DPUMP/sid ASMCMD> exit

2.- Create directory on database

SQL> create directory SID_ASM_DPUMP_DIR as '+DATA/DPUMP/sid'; Directory created. SQL> grant read, write, execute on directory SID_ASM_DPUMP_DIR to public; Grant succeeded.

3.- Transfer file

SQL> set timing on time on

17:22:13 SQL> BEGIN

17:22:17 2 dbms_file_transfer.get_file ('SID_ASM_DPUMP_DIR',

17:22:17 3 'expdp.dmp',

17:22:17 4 'TESTSRC',

17:22:17 5 'SID_ASM_DPUMP_DIR',

17:22:17 6 'expdp.dmp');

17:22:17 7 END;

17:22:17 8

17:22:17 9 /

PL/SQL procedure successfully completed.

Elapsed: 00:01:07.57

17:23:31 SQL>

4.- Check files is on destination:

ASMCMD [+DATA/DPUMP/sid] > ls -ls Type Redund Striped Time Sys Name N expdp.dmp => +DATA/sid/DUMPSET/FILE_TRANSFER_0_0.797.756840143

OK, so ls -ls does not work show us the size of the file on the destination directory. The reason is because it is an alias, you need to perform ls -s on the directory where the file is stored.

ASMCMD [+DATA/DPUMP/sid] > ls -ls +DATA/sid/DUMPSET/ Type Redund Striped Time Sys Block_Size Blocks Bytes Space Name DUMPSET MIRROR COARSE JUL 18 17:00:00 Y 4096 1784576 7309623296 14633926656 FILE_TRANSFER_0_0.797.756840143

So, we have managed to transfer 6.8GB of data between two remote servers in 1 minute 8 seconds… not bad.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Archive area +RECO has -7440384 free KB remaining (Usable_file_MB is negative) 17 July 2011

Posted by David Alejo Marcos in ASM, Exadata, Oracle 11.2, RMAN.Tags: ASM, Exadata, Oracle 11.2, RMAN

comments closed

I must say, this has been a busy weekend.

We have been promoting a release to production and a guaranteed restore point was created on Friday as rollback strategy. On Sunday I was called as we started to receive alerts.

The problem:

Our monitoring system started to send emails and SNMP Traps with the following alerts:

OEM alert for Automatic Storage Management +ASM4_ssssss4: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM2_ssssss2: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM3_ssssss3: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM7_ssssss7: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM8_ssssss8: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM6_ssssss6: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM1_ssssss1: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Automatic Storage Management +ASM5_ssssss5: Disk group RECO has used 100% of safely usable free space. (Current Disk Group Used % of Safely Usable value: 100) OEM alert for Database Instance : Archive area +RECO has -7440384 free KB remaining. #Current Free Archive Area #KB# value: -7440384# OEM alert for Database Instance : Archive area +RECO has -21725184 free KB remaining. (Current Free Archive Area (KB) value: -21725184)

Not very nice in any situation, but not when you are in the middle of a critical, high visible release.

I did have a look and this is what I found.

The solution:

The first thing I did was to check the Flash Recovery Area, as it is configured to write to our +RECO diskgroup:

NAME USED_MB LIMIT_MB PCT_USED -------------------- ---------- ---------- ---------- +RECO 1630569 2048000 79.62 Elapsed: 00:00:00.12 FILE_TYPE PERCENT_SPACE_USED PERCENT_SPACE_RECLAIMABLE NUMBER_OF_FILES -------------------- ------------------ ------------------------- --------------- CONTROL FILE 0 0 1 REDO LOG 0 0 0 ARCHIVED LOG 0 0 0 BACKUP PIECE 75.66 75.66 86 IMAGE COPY 0 0 0 FLASHBACK LOG 3.96 0 707 FOREIGN ARCHIVED LOG 0 0 0 7 rows selected. Elapsed: 00:00:01.62

Numbers did look ok, some backup files could be reclaimed (Oracle should do it automatically). Lets have a look the ASM:

oracle@ssss (+ASM1)$ asmcmd lsdg State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name MOUNTED NORMAL N 512 4096 4194304 55050240 26568696 5004567 10782064 0 N DATA/ MOUNTED NORMAL N 512 4096 4194304 35900928 3192844 3263720 -35438 0 N RECO/ MOUNTED NORMAL N 512 4096 4194304 4175360 4061640 379578 1841031 0 N SYSTEMDG/ Elapsed: 00:00:01.62

Bingo, this is where our problem is. USABLE_FILE_MB (+RECO diskgroup) indicates the amount of free space that can be utilized, including the mirroring space, and being able to restore redundancy after a disk failure. A negative number on this column, could be critical in case of disk failure for the system as we might not have enough space perform a restore of all files to the surviving of disk.

Our backups goes to ASM and we copy them to tape afterwards. Our retention policy on disk is between 2 or 3 days, depending of the systems.

When I did check the contents of the backupset on ASM I found some old backups:

Type Redund Striped Time Sys Name Y 2011_07_17/ Y 2011_07_16/ Y 2011_07_15/ Y 2011_07_14/ Y 2011_07_13/ Y 2011_07_12/ Elapsed: 00:00:01.62

To delete those old backups I executed the following script from RMAN:

RMAN> delete backup tag EOD_DLY_110712 device type disk; allocated channel: ORA_DISK_1 channel ORA_DISK_1: SID=1430 instance=<instance> device type=DISK allocated channel: ORA_DISK_2 channel ORA_DISK_2: SID=2138 instance=<instance> device type=DISK allocated channel: ORA_DISK_3 channel ORA_DISK_3: SID=8 instance=<instance> device type=DISK allocated channel: ORA_DISK_4 channel ORA_DISK_4: SID=150 instance=<instance> device type=DISK List of Backup Pieces BP Key BS Key Pc# Cp# Status Device Type Piece Name ------- ------- --- --- ----------- ----------- ---------- 8292 4020 1 1 AVAILABLE DISK +RECO//backupset/2011_07_12/sssss_eod_dly_110712_0.nnnn.nnnnn 8293 4021 1 1 AVAILABLE DISK +RECO//backupset/2011_07_12/sssss_eod_dly_110712_0.nnnn.nnnnn Do you really want to delete the above objects (enter YES or NO)? yes deleted backup piece backup piece handle=+RECO//backupset/2011_07_12/sssss_eod_dly_110712_0.nnnn.nnnnnn RECID=8292 STAMP=nnnn deleted backup piece backup piece handle=+RECO//backupset/2011_07_12/sssss_eod_dly_110712_0.nnnn.nnnnnn RECID=8293 STAMP=nnnn Deleted 2 objects Elapsed: 00:00:01.62

After deleting a two more old backups, the number looked much better:

State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name MOUNTED NORMAL N 512 4096 4194304 55050240 26568696 5004567 10782064 0 N DATA/ MOUNTED NORMAL N 512 4096 4194304 35900928 3548260 3263720 142270 0 N RECO/ MOUNTED NORMAL N 512 4096 4194304 4175360 4061640 379578 1841031 0 N SYSTEMDG/

Note.- There is another temporary fix. I could changed db_recovery_file_dest to point to +DATA instead of +RECO, but as we have a guaranteed restore point, I thought releasing space from old backups was easier.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Check status voting disk. 6 July 2011

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC.Tags: Exadata, Oracle 11.2, RAC

comments closed

This is a quick blog as to how to check the status of Voting Disks.

The problem:

You receive a call/email from you 1st line support with something similar to:

---- [cssd(9956)]CRS-1604:CSSD voting file is offline: o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin; details at (:CSSNM00058:) in /apps/oracle/grid_11.2/log/sssss/cssd/ocssd.log. ----

The solution:

Easy to check using crsctl:

oracle$ crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE a0a559213xxxxxxffa67c2df0fdc12 (o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin) [SYSTEMDG] 2. ONLINE 121231203xxxxxxfc4523c5c34d900 (o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin) [SYSTEMDG] 3. ONLINE a6b3c0281xxxxxxf3f6f9f1fd230ea (o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin) [SYSTEMDG] Located 3 voting disk(s).

As always, comments are welcome.

Testing your Oracle Standby configuration using Oracle Broker. 4 April 2011

Posted by David Alejo Marcos in Dataguard Broker, Oracle 11.2, Standby.Tags: Dataguar broker, Oracle 11.2, Standby

comments closed

Several weeks ago I wrote a post regarding testing your standby database ( https://davidalejomarcos.wordpress.com/2011/03/01/how-to-test-your-dataguard-configuration-standby-database/ ). The database I tested did not have Oracle Broker configured.

Today I will show you how I did perform a switchover with Oracle Broker configured.

The problem:

Perform a switchover using Oracle Broker.

The solution:

It proved to be quite simple and trouble-free.

Below are the steps I followed:

Note.- Primary is my production primary database during all the exercise, independently of the role the database is running. PRMRY relates to the commands to be run on the current production database.

Note.- Standby is my production standby database during all the exercise, independently of the role the database is running. STNDBY relates to the commands to be run on the current standby database.

1.- Check primary and standby database are in sync:

PRMRY> select sequence# seq,thread# thread from v$log where status = 'CURRENT' order by thread#; SEQ THREAD ---------- ---------- 33388 1 PRMRY> alter system switch logfile; System altered. PRMRY> / System altered. PRMRY> / System altered. PRMRY> select sequence# seq,thread# thread from v$log where status = 'CURRENT' order by thread#; SEQ THREAD ---------- ---------- 33391 1 PRMRY> exit STNDBY> select distinct max( sequence#) over (partition by thread# order by thread#) seq, thread# thread from gv$log_history; SEQ THREAD ---------- ---------- 33390 1 STNDBY> exit

2.- Connect to Oracle Broker from the server where PRMRY is running and:

[oracle@sssss ~]$ dgmgrl DGMGRL for Linux: Version 11.2.0.1.0 - 64bit Production Copyright (c) 2000, 2009, Oracle. All rights reserved. Welcome to DGMGRL, type "help" for information. DGMGRL> connect / Connected.

2.1.- Check the configuration for errors:

DGMGRL> show configuration Configuration - ssss_DG Protection Mode: MaxPerformance Databases: PRIMARY - Primary database STANDBY - Physical standby database Fast-Start Failover: DISABLED Configuration Status: SUCCESS

2.2.- Check primary is in “TRANSPORT-ON” state

DGMGRL> show database primary Database - primary Enterprise Manager Name: sss_primary Role: PRIMARY Intended State: TRANSPORT-ON Instance(s): sss Database Status: SUCCESS

2.3.- standby database is in “APPLY-ON” state:

DGMGRL> show database standby Database - standby Enterprise Manager Name: sss_standby Role: PHYSICAL STANDBY Intended State: APPLY-ON Transport Lag: 0 seconds Apply Lag: 0 seconds Real Time Query: OFF Instance(s): sss Database Status: SUCCESS

3.- Perform the switchover using Oracle Broker:

DGMGRL> switchover to standby Performing switchover NOW, please wait... New primary database "standby" is opening... Operation requires shutdown of instance "sss" on database "primary" Shutting down instance "sss"... ORA-01031: insufficient privileges Warning: You are no longer connected to ORACLE. Please complete the following steps to finish switchover: shut down instance "sss" of database "primary" start up and mount instance "sss" of database "primary" DGMGRL>

4.- Restart the new standby database and register it with the listener:

[oracle@ssss ~]$ sqlplus '/as sysdba' SQL*Plus: Release 11.2.0.1.0 Production on Sat Apr 2 09:02:20 2011 Copyright (c) 1982, 2009, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production With the Partitioning option SQL> select open_mode from v$database; OPEN_MODE -------------------- MOUNTED SQL> shutdown immediate ORA-01109: database not open Database dismounted. ORACLE instance shut down. SQL> startup mount Total System Global Area 1.2827E+10 bytes Fixed Size 2225336 bytes Variable Size 1207962440 bytes Database Buffers 1.1610E+10 bytes Redo Buffers 7348224 bytes Database mounted. SQL> alter system register; System altered. SQL> exit

5.- Check log files are being sent and applied on the new standby database.

As always, comments are welcome.

————————-

Appendix:

Below is the section of the primary database while switching over to standby:

Sat Apr 02 08:58:37 2011 ALTER DATABASE COMMIT TO SWITCHOVER TO PHYSICAL STANDBY WITH SESSION SHUTDOWN ALTER DATABASE COMMIT TO SWITCHOVER TO PHYSICAL STANDBY [Process Id: 8432] (sss) Sat Apr 02 08:58:37 2011 Thread 1 cannot allocate new log, sequence 33392 Private strand flush not complete Current log# 3 seq# 33391 mem# 0: /u03/oradata/sss/redo03.rdo Thread 1 advanced to log sequence 33392 (LGWR switch) Current log# 1 seq# 33392 mem# 0: /u03/oradata/sss/redo01.rdo Waiting for all non-current ORLs to be archived... Waiting for the ORL for thread 1 sequence 33391 to be archived... Sat Apr 02 08:58:44 2011 Archived Log entry 68782 added for thread 1 sequence 33391 ID 0x2a7ee921 dest 1: Sat Apr 02 08:58:51 2011 ORL for thread 1 sequence 33391 has been archived... All non-current ORLs have been archived. Waiting for all FAL entries to be archived... All FAL entries have been archived. Waiting for dest_id 2 to become synchronized... Sat Apr 02 08:59:01 2011 Active, synchronized Physical Standby switchover target has been identified Sat Apr 02 08:59:01 2011 Thread 1 cannot allocate new log, sequence 33393 Private strand flush not complete Current log# 1 seq# 33392 mem# 0: /u03/oradata/sss/redo01.rdo Thread 1 advanced to log sequence 33393 (LGWR switch) Current log# 2 seq# 33393 mem# 0: /u03/oradata/sss/redo02.rdo ARCH: Standby redo logfile selected for thread 1 sequence 33392 for destination LOG_ARCHIVE_DEST_2 Archived Log entry 68783 added for thread 1 sequence 33392 ID 0x2a7ee921 dest 1: Sat Apr 02 08:59:01 2011 Stopping background process CJQ0 Sat Apr 02 08:59:01 2011 SMON: disabling tx recovery Stopping background process QMNC CLOSE: killing server sessions. Sat Apr 02 08:59:14 2011 CLOSE: all sessions shutdown successfully. Sat Apr 02 08:59:14 2011 SMON: disabling cache recovery Sat Apr 02 08:59:15 2011 Shutting down archive processes Archiving is disabled Sat Apr 02 08:59:15 2011 ARCH shutting down Sat Apr 02 08:59:15 2011 ARCH shutting down ARC9: Archival stopped ARC7: Archival stopped Sat Apr 02 08:59:15 2011 ARCH shutting down ARC5: Archival stopped Sat Apr 02 08:59:15 2011 ARCH shutting down Sat Apr 02 08:59:15 2011 ARCH shutting down ARC3: Archival stopped ARC0: Archival stopped Sat Apr 02 08:59:15 2011 ARCH shutting down Sat Apr 02 08:59:15 2011 ARCH shutting down ARC4: Archival stopped ARC6: Archival stopped Sat Apr 02 08:59:15 2011 ARCH shutting down ARC2: Archival stopped Sat Apr 02 08:59:15 2011 ARCH shutting down ARC8: Archival stopped Sat Apr 02 08:59:15 2011 ARCH shutting down ARC1: Archival stopped Thread 1 closed at log sequence 33393 Successful close of redo thread 1 ARCH: Noswitch archival of thread 1, sequence 33393 ARCH: End-Of-Redo Branch archival of thread 1 sequence 33393 Archived Log entry 68785 added for thread 1 sequence 33393 ID 0x2a7ee921 dest 1: ARCH: Archiving is disabled due to current logfile archival Primary will check for some target standby to have received all redo Final check for a synchronized target standby. Check will be made once. LOG_ARCHIVE_DEST_2 is a potential Physical Standby switchover target Active, synchronized target has been identified Target has also applied all redo Backup controlfile written to trace file /opt/oracle/diag/rdbms/primary/sss/trace/sss_rsm0_8432.trc Clearing standby activation ID 712960289 (0x2a7ee921) The primary database controlfile was created using the 'MAXLOGFILES 12' clause. There is space for up to 9 standby redo logfiles Use the following SQL commands on the standby database to create standby redo logfiles that match the primary database: ALTER DATABASE ADD STANDBY LOGFILE 'srl1.f' SIZE 262144000; ALTER DATABASE ADD STANDBY LOGFILE 'srl2.f' SIZE 262144000; ALTER DATABASE ADD STANDBY LOGFILE 'srl3.f' SIZE 262144000; ALTER DATABASE ADD STANDBY LOGFILE 'srl4.f' SIZE 262144000; Archivelog for thread 1 sequence 33393 required for standby recovery Switchover: Primary controlfile converted to standby controlfile succesfully. Sat Apr 02 08:59:19 2011 MRP0 started with pid=18, OS id=10456 MRP0: Background Managed Standby Recovery process started (sss) Serial Media Recovery started Managed Standby Recovery not using Real Time Apply Online logfile pre-clearing operation disabled by switchover Media Recovery Log /u01/oradata/sss/arch/log1_33393_708427666.arc Identified End-Of-Redo for thread 1 sequence 33393 Resetting standby activation ID 0 (0x0) Media Recovery End-Of-Redo indicator encountered Media Recovery Applied until change 241462639865 MRP0: Media Recovery Complete: End-Of-REDO (sss) MRP0: Background Media Recovery process shutdown (sss) Sat Apr 02 08:59:25 2011 Switchover: Complete - Database shutdown required (sss) Completed: ALTER DATABASE COMMIT TO SWITCHOVER TO PHYSICAL STANDBY WITH SESSION SHUTDOWN Using STANDBY_ARCHIVE_DEST parameter default value as /u01/oradata/sss/arch ALTER SYSTEM SET log_archive_dest_2='' SCOPE=BOTH; Using STANDBY_ARCHIVE_DEST parameter default value as /u01/oradata/sss/arch ALTER SYSTEM SET log_archive_dest_state_2='ENABLE' SCOPE=BOTH; Sat Apr 02 08:59:29 2011 RFS[1]: Assigned to RFS process 11886 RFS[1]: Identified database type as 'physical standby': Client is ARCH pid 5025 RFS[1]: Opened log for thread 1 sequence 33394 dbid 666702615 branch 708427666 Sat Apr 02 08:59:29 2011 RFS[2]: Assigned to RFS process 11894 RFS[2]: Identified database type as 'physical standby': Client is ARCH pid 5021 Sat Apr 02 08:59:29 2011 RFS[3]: Assigned to RFS process 11898 RFS[3]: Identified database type as 'physical standby': Client is ARCH pid 5029 RFS[3]: Opened log for thread 1 sequence 33395 dbid 666702615 branch 708427666 Archived Log entry 68787 added for thread 1 sequence 33394 rlc 708427666 ID 0x2cbe3767 dest 2: Archived Log entry 68788 added for thread 1 sequence 33395 rlc 708427666 ID 0x2cbe3767 dest 2: Sat Apr 02 08:59:33 2011 RFS[4]: Assigned to RFS process 12348 RFS[4]: Identified database type as 'physical standby': Client is ARCH pid 5037 RFS[4]: Opened log for thread 1 sequence 33396 dbid 666702615 branch 708427666 Archived Log entry 68789 added for thread 1 sequence 33396 rlc 708427666 ID 0x2cbe3767 dest 2: Sat Apr 02 08:59:33 2011 RFS[5]: Assigned to RFS process 12342 RFS[5]: Identified database type as 'physical standby': Client is LGWR ASYNC pid 24051 Primary database is in MAXIMUM PERFORMANCE mode RFS[5]: Opened log for thread 1 sequence 33397 dbid 666702615 branch 708427666 Archived Log entry 68790 added for thread 1 sequence 33397 rlc 708427666 ID 0x2cbe3767 dest 2: RFS[5]: Opened log for thread 1 sequence 33398 dbid 666702615 branch 708427666 Sat Apr 02 09:00:17 2011 RFS[6]: Assigned to RFS process 18674 RFS[6]: Identified database type as 'physical standby': Client is ARCH pid 5021 Sat Apr 02 09:00:23 2011 Archived Log entry 68791 added for thread 1 sequence 33398 rlc 708427666 ID 0x2cbe3767 dest 2: RFS[5]: Opened log for thread 1 sequence 33399 dbid 666702615 branch 708427666

and here is the section of the alert.log file of the production standby database during the switchover:

Sat Apr 02 08:58:41 2011 Media Recovery Waiting for thread 1 sequence 33392 Sat Apr 02 08:58:41 2011 Archived Log entry 34084 added for thread 1 sequence 33391 ID 0x2a7ee921 dest 1: Sat Apr 02 08:58:51 2011 RFS[6]: Assigned to RFS process 23317 RFS[6]: Identified database type as 'physical standby': Client is ARCH pid 8452 Sat Apr 02 08:59:01 2011 RFS[7]: Assigned to RFS process 23326 RFS[7]: Identified database type as 'physical standby': Client is Foreground pid 8432 RFS[7]: Selected log 6 for thread 1 sequence 33392 dbid 666702615 branch 708427666 Sat Apr 02 08:59:01 2011 Archived Log entry 34085 added for thread 1 sequence 33392 ID 0x2a7ee921 dest 1: Sat Apr 02 08:59:02 2011 Media Recovery Log /u01/oradata/sss/arch/log1_33392_708427666.arc Media Recovery Waiting for thread 1 sequence 33393 Sat Apr 02 08:59:11 2011 RFS[8]: Assigned to RFS process 23340 RFS[8]: Identified database type as 'physical standby': Client is ARCH pid 8452 Sat Apr 02 08:59:18 2011 RFS[9]: Assigned to RFS process 24039 RFS[9]: Identified database type as 'physical standby': Client is Foreground pid 8432 RFS[9]: Opened log for thread 1 sequence 33393 dbid 666702615 branch 708427666 Archived Log entry 34086 added for thread 1 sequence 33393 rlc 708427666 ID 0x2a7ee921 dest 2: Sat Apr 02 08:59:18 2011 Media Recovery Log /u01/oradata/sss/arch/log1_33393_708427666.arc Identified End-Of-Redo for thread 1 sequence 33393 Resetting standby activation ID 712960289 (0x2a7ee921) Media Recovery End-Of-Redo indicator encountered Media Recovery Continuing Resetting standby activation ID 712960289 (0x2a7ee921) Media Recovery Waiting for thread 1 sequence 33394 Sat Apr 02 08:59:19 2011 RFS[10]: Assigned to RFS process 24045 RFS[10]: Identified database type as 'physical standby': Client is Foreground pid 8432 Sat Apr 02 08:59:25 2011 ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL MRP0: Background Media Recovery cancelled with status 16037 Errors in file /opt/oracle/diag/rdbms/standby/sss/trace/sss_pr00_6126.trc: ORA-16037: user requested cancel of managed recovery operation Managed Standby Recovery not using Real Time Apply Recovery interrupted! Waiting for MRP0 pid 6122 to terminate Errors in file /opt/oracle/diag/rdbms/standby/sss/trace/sss_pr00_6126.trc: ORA-16037: user requested cancel of managed recovery operation Sat Apr 02 08:59:26 2011 MRP0: Background Media Recovery process shutdown (sss) Managed Standby Recovery Canceled (sss) Completed: ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL ALTER DATABASE COMMIT TO SWITCHOVER TO PRIMARY WAIT WITH SESSION SHUTDOWN ALTER DATABASE SWITCHOVER TO PRIMARY (sss) Maximum wait for role transition is 15 minutes. Backup controlfile written to trace file /opt/oracle/diag/rdbms/standby/sss/trace/sss_rsm0_5074.trc SwitchOver after complete recovery through change 241462639865 Online log /u03/oradata/sss/redo01.rdo: Thread 1 Group 1 was previously cleared Online log /u03/oradata/sss/redo02.rdo: Thread 1 Group 2 was previously cleared Online log /u03/oradata/sss/redo03.rdo: Thread 1 Group 3 was previously cleared Standby became primary SCN: 241462639863 Switchover: Complete - Database mounted as primary Completed: ALTER DATABASE COMMIT TO SWITCHOVER TO PRIMARY WAIT WITH SESSION SHUTDOWN Sat Apr 02 08:59:26 2011 ARC7: Becoming the 'no SRL' ARCH Sat Apr 02 08:59:29 2011 ARC8: Becoming the 'no SRL' ARCH ALTER SYSTEM SET log_archive_dest_2='service="horvitz"','LGWR ASYNC NOAFFIRM delay=0 optional compression=disable max_failure=0 max_connections=1 reopen=300 db_unique_name="horvitz" net_timeout=30','valid_for=(all_logfiles,primary_role)' SCOPE=BOTH; ALTER DATABASE OPEN Data Guard Broker initializing... Switching redo format version from 11.1.0.0.0 to 11.2.0.0.0 at change 241462639868 Thread 1 advanced to log sequence 33395 (COMPATIBLE advance) Sat Apr 02 08:59:29 2011 Assigning activation ID 750663527 (0x2cbe3767) Thread 1 advanced to log sequence 33396 (thread open) Sat Apr 02 08:59:29 2011 ARC9: Becoming the 'no SRL' ARCH Thread 1 opened at log sequence 33396 Current log# 3 seq# 33396 mem# 0: /u03/oradata/sss/redo03.rdo Successful open of redo thread 1 Sat Apr 02 08:59:29 2011 ARC0: Becoming the 'no SRL' ARCH Archived Log entry 34087 added for thread 1 sequence 33394 ID 0x2cbe3767 dest 1: Sat Apr 02 08:59:29 2011 NSA2 started with pid=32, OS id=24051 Sat Apr 02 08:59:29 2011 ARC1: Becoming the 'no SRL' ARCH Archived Log entry 34088 added for thread 1 sequence 33395 ID 0x2cbe3767 dest 1: Sat Apr 02 08:59:29 2011 SMON: enabling cache recovery Thread 1 advanced to log sequence 33397 (LGWR switch) Current log# 1 seq# 33397 mem# 0: /u03/oradata/sss/redo01.rdo ****************************************************************** LGWR: Setting 'active' archival for destination LOG_ARCHIVE_DEST_2 ****************************************************************** Sat Apr 02 08:59:32 2011 Archived Log entry 34091 added for thread 1 sequence 33396 ID 0x2cbe3767 dest 1: Successfully onlined Undo Tablespace 1. Dictionary check beginning Dictionary check complete Verifying file header compatibility for 11g tablespace encryption.. Verifying 11g file header compatibility for tablespace encryption completed SMON: enabling tx recovery Database Characterset is AL32UTF8 No Resource Manager plan active replication_dependency_tracking turned off (no async multimaster replication found) Sat Apr 02 08:59:36 2011 Starting background process QMNC Sat Apr 02 08:59:36 2011 QMNC started with pid=33, OS id=24060 LOGSTDBY: Validating controlfile with logical metadata LOGSTDBY: Validation complete Completed: ALTER DATABASE OPEN ALTER SYSTEM SET log_archive_trace=0 SCOPE=BOTH SID='sss'; ALTER SYSTEM SET log_archive_format='log%t_%s_%r.arc' SCOPE=SPFILE SID='sss'; ALTER SYSTEM SET standby_file_management='AUTO' SCOPE=BOTH SID='*'; ALTER SYSTEM SET archive_lag_target=1800 SCOPE=BOTH SID='*'; ALTER SYSTEM SET log_archive_max_processes=10 SCOPE=BOTH SID='*'; ALTER SYSTEM SET log_archive_min_succeed_dest=1 SCOPE=BOTH SID='*'; ALTER SYSTEM ARCHIVE LOG Thread 1 advanced to log sequence 33398 (LGWR switch) Current log# 2 seq# 33398 mem# 0: /u03/oradata/sss/redo02.rdo Sat Apr 02 08:59:37 2011 ARC7: STARTING ARCH PROCESSES Archived Log entry 34094 added for thread 1 sequence 33397 ID 0x2cbe3767 dest 1: Sat Apr 02 08:59:37 2011 ARCa started with pid=34, OS id=24064 ARCa: Archival started ARC7: STARTING ARCH PROCESSES COMPLETE Sat Apr 02 08:59:43 2011 Starting background process CJQ0 Sat Apr 02 08:59:43 2011 CJQ0 started with pid=39, OS id=24099 Setting Resource Manager plan SCHEDULER[0xFD16]:DEFAULT_MAINTENANCE_PLAN via scheduler window Setting Resource Manager plan DEFAULT_MAINTENANCE_PLAN via parameter Sat Apr 02 08:59:47 2011 Starting background process VKRM Sat Apr 02 08:59:47 2011 VKRM started with pid=38, OS id=24118 Sat Apr 02 09:00:17 2011 Shutting down archive processes Sat Apr 02 09:00:17 2011 ARCH shutting down ARCa: Archival stopped Sat Apr 02 09:00:20 2011 ALTER SYSTEM ARCHIVE LOG Sat Apr 02 09:00:20 2011 Thread 1 cannot allocate new log, sequence 33399 Checkpoint not complete Current log# 2 seq# 33398 mem# 0: /u03/oradata/sss/redo02.rdo Thread 1 advanced to log sequence 33399 (LGWR switch) Current log# 3 seq# 33399 mem# 0: /u03/oradata/sss/redo03.rdo Archived Log entry 34096 added for thread 1 sequence 33398 ID 0x2cbe3767 dest 1:

How to test your Dataguard Configuration (Standby Database) 1 March 2011

Posted by David Alejo Marcos in Oracle 11.2, RAC, Standby.Tags: Oracle 11.2, RAC, Standby

comments closed

In a couple of weeks we will have to test our DR (based on standby database) configuration for our 3-node cluster.

This cluster has 3 databases running and, as part of the DR test, we will do a controlled fail over to DR, run some batches over there and fail back to production.

The problem:

Activate physical standby for testing. As part of the test, we will run some batches to ensure DR if fit to purpose, flashback any changes, and fail back to production.

The solution:

Below are the steps we will follow.

— Create restore point on standby

STANDBY> SELECT flashback_on, log_mode FROM v$database;

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

STANDBY> CREATE RESTORE POINT before_dr_test GUARANTEE FLASHBACK DATABASE;

STANDBY> SELECT scn, database_incarnation#, guarantee_flashback_database, storage_size, time, name FROM gv$restore_point;

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE USING CURRENT LOGFILE DISCONNECT;

— Perform couple of logfile switches.

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

— Next step only if you are using Dataguard Broker.

PRIMARY> ALTER SYSTEM SET DG_BROKER_START=FALSE;

PRIMARY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=DEFER;

— We will not ship any logfiles to old primary due to change of role.

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

STANDBY> ALTER SYSTEM SET LOG_ARCHVE_DEST_STATE_2=DISABLE;

— Convert standby database to a primary database.

STANDBY> ALTER DATABASE ACTIVATE STANDBY DATABASE;

STANDBY> STARTUP MOUNT FORCE;

— OPTIONAL. change dataguard protection mode to maximum performance if needed

STANDBY> ALTER DATABASE SET STANDBY DATABASE TO MAXIMIZE PERFORMANCE;

— Open database

STANDBY> ALTER DATABASE OPEN;

— Standby database is open for testing. No changes should be sent to primary database as destination is disabled.

— After testing, we perform Flashback to undo any changes and activate database as physical standby.

STANDBY> SHUTDOWN IMMEDIATE;

STANDBY> STARTUP MOUNT;

STANDBY> FLASHBACK DATABASE TO RESTORE POINT before_dr_test;

STANDBY> ALTER DATABASE CONVERT TO PHYSICAL STANDBY;

STANDBY> SHUTDOWN IMMEDIATE;

STANDBY> STARTUP MOUNT;

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE USING CURRENT LOGFILE DISCONNECT;

— Enable logfile transfer on both, primary and standby.

PRIMARY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

STANDBY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

— Perform couple of logfile switches to verify configuration is working.

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> SELECT DEST_ID, DEST_NAME, STATUS, PROTECTION_MODE, DESTINATION, ERROR, APPLIED_THREAD#, APPLIED_SEQ#, SYNCHRONIZED, GAP_STATUS FROM V$ARCHIVE_DEST_STATUS;

— Drop restore point on DR (this will avoid to run out of space on your FRA).

STANDBY> DROP RESTORE POINT before_dr_test;

As always, comments are welcome.

Sysdate returns “wrong” time (time with timezone). 25 February 2011

Posted by David Alejo Marcos in Oracle 11.2, SQL - PL/SQL.Tags: Oracle 11.2, SQL, SQL - PL/SQL

comments closed

We are all used to execute sysdate to have the date + time of the database, what we are not so used to is to retrieve different values (depending of your timezone) from Oracle.

Sysdate, per Oracle documentation will do the following:

“SYSDATE returns the current date and time set for the operating system on which the database server resides. The data type of the returned value is DATE, and the format returned depends on the value of the NLS_DATE_FORMAT initialization parameter. The function requires no arguments. In distributed SQL statements, this function returns the date and time set for the operating system of your local database.”

Most people only reads the first line, where it says, it will return the date and time set for the operating system on which the database server resides; but right at the end, there is something we are all very familiar with, distributed SQL statements.

It is very hard to find a database not being accessed from different timezones than the server hosting it. Either because hosting is cheaper or because we have business in different countries.

The Problem:

If I execute a simple query to return the date and time from our US server:

SQL> alter session set nls_date_format='dd/mm/yyyy hh24:mi:ss'; Session altered. SQL> select sysdate from dual; SYSDATE ------------------- 24/02/2011 14:12:18 SQL> exit Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production With the Partitioning and Automatic Storage Management options

I receive the date and time in UTC:

[oracle@sssssssss ~]$ date Thu Feb 24 14:14:18 GMT 2011

From sqlplus in the UK:

C:\Documents and Settings\david.marcos>sqlplus daviddba@dddd SQL*Plus: Release 10.2.0.4.0 - Production on Thu Feb 24 14:14:30 2011 Copyright (c) 1982, 2007, Oracle. All Rights Reserved. Enter password: Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.2.0 - 64bit Production With the Partitioning and Automatic Storage Management options DAVIDDBA@dddd> DAVIDDBA@dddd> alter session set nls_date_format='dd/mm/yyyy hh24:mi:ss'; Session altered. Elapsed: 00:00:00.14 DAVIDDBA@dddd> select sysdate from dual; SYSDATE ------------------- 24/02/2011 09:14:55 Elapsed: 00:00:00.20 DAVIDDBA@dddd>

It returns the date and time in NYC.

The Solution:

Quite simple, we have a file on the server called /etc/sysconfig/clock. This file configures the system clock to Universal or Local time.

[oracle@ssss ~]$ cat /etc/sysconfig/clock ZONE="America/New_York" UTC=true ARC=false

Zone should be :

[oracle@sssssss ~]$ cat /etc/sysconfig/clock ZONE="UTC" UTC=true ARC=false

So clients also uses UTC. After amending the value we were back to business.

There is another quick way to fix the problem, providing you do not have local_listener configured on your system as you can manipulate the timezone for your sessions using your listeners. Let me explain, if you connect from the server, you will probably not use the listener, so sysdate should be the same than your servers date and time.

Starting the listener with different timezone (for example, using TZ OS environment variable) you will be able to have two different sysdate results (one if you select from the server and a different one if you connect using the listener).

So, to fix your problem you will need to do the following:

1.- Configure TZ variable on your server (i.e. export TZ=”UTC”)

2.- Stop listener

3.- Start listener

Done.

If you have local_listener, you will need to stop/start your database with the right TZ environment variable to fix the issue.

As always, questions are welcome.

You must be logged in to post a comment.