SQL Monitor details for later tuning. 29 March 2012

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL, Tuning.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL, Tuning

comments closed

Tuning has always being good fun and something like a challenge for me.

From time to time we are being asked to find out why something did run slow while you are sleeping; answering this question is, in most cases, a challenge.

The problem:

My batch did run slow last night, can you let us know why? Or why did this query run slow? Are questions we, as DBAs, have to answer from time to time.

The solution:

Oracle has provided us with many tools to dig out information about past operations. We have EM, AWR, ASH, dba_hist_* tables, scripts all over internet, etc.

I must admit I do use sql_monitor quite often, but on a really busy environment, Oracle will only keep, with any luck, couple of hours of SQLs.

V$SQL_MONITOR and dbms_sqltune.report_sql_monitor have become tools I use most frequently.

The only problem I have is, as mentioned earlier, the number of SQLs stored on v$sql_monitor or, rather, the length of time being kept there.

Oracle will keep a certain number of SQLs (defined by a hidden parameter) and start recycling them so, by the time I am in the office, any SQL executed during the batch is long gone.

For this reason I came up with the following. I must admit it is not rocket science, but it does help me quite a lot.

It is like my small collection of “Bad Running Queries”. And I only need another DBA or an operator with certain privileges to execute a simple procedure to allow me to try to find out what did happen.

We need the following objects:

1.- A table to store the data:

CREATE TABLE perflog ( asof DATE, userid VARCHAR2(30), sql_id VARCHAR2 (30), monitor_list CLOB, monitor CLOB, monitor_detail CLOB ); /

2.- A procedure to insert the data I need for tuning:

CREATE OR REPLACE PROCEDURE perflog_pr (p_sql_id VARCHAR2 DEFAULT 'N/A')

AS

BEGIN

IF p_sql_id = 'N/A'

THEN

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

NULL,

NULL

FROM DUAL;

ELSE

INSERT INTO perflog

SELECT SYSDATE,

sys_context('USERENV', 'SESSION_USER'),

p_sql_id,

sys.DBMS_SQLTUNE.report_sql_monitor_list (TYPE => 'HTML',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_monitor (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL'),

sys.DBMS_SQLTUNE.report_sql_detail (sql_id => p_sql_id,

TYPE => 'ACTIVE',

report_level => 'ALL')

FROM DUAL;

END IF;

COMMIT;

END;

/

3.- Grant necessary permissions:

grant select, insert on perflog to public / create public synonym perflog for perflog / grant execute on perflog_pr to public / create public synonym perflog_pr for perflog_pr / grant select any table, select any dictionary to <owner_code> /

The way it works is as follows:

– If business complains regarding a specific query, the DBA or operator can call the procedure with the sql_id:

exec perflog_pr ('1f52b50sq59q');

This will store the datetime, sql_id, DBA/operator name and most important the status of the instance at that time, general view of the sql and a detailed view of the sql running slow.

– If business complains regarding slowness but does not provide a specific query, we execute the following:

exec perflog_pr;

This will store the datetime, sql_id, DBA/operator name and a general view of the instance.

Please, give it a go and let me know any thoughts.

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

How to list files on a directory from Oracle Database. 13 September 2011

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC, SQL - PL/SQL.Tags: Exadata, Oracle 11.2, RAC, SQL, SQL - PL/SQL

comments closed

Couple of days ago I had an interesting request, “How can I see the contents of nfs_dir”?

The problem:

We were using DBFS to store our exports. This was the perfect solution as the business could “see” the files on the destination folder, but it did not meet our requirements performance wise on our Exadata.

We have decided to mount NFS and performance did improve, but we had a different problem. NFS is mounted on the database server and business do not have access for security reasons and segregation of duties.

Since then, the export jobs run, but business could not “see” what files were created, so the question was asked.

The solution:

After some research I came across with the following package:

SYS.DBMS_BACKUP_RESTORE.searchFiles

I did have to play a little bit, and I finished with the following script:

1.- Create an Oracle type

create type file_array as table of varchar2(100) /

2.- Create the function as SYS:

CREATE OR REPLACE FUNCTION LIST_FILES (lp_string IN VARCHAR2 default null) RETURN file_array pipelined AS lv_pattern VARCHAR2(1024); lv_ns VARCHAR2(1024); BEGIN SELECT directory_path INTO lv_pattern FROM dba_directories WHERE directory_name = 'NFS_DIR'; SYS.DBMS_BACKUP_RESTORE.SEARCHFILES(lv_pattern, lv_ns); FOR file_list IN (SELECT FNAME_KRBMSFT AS file_name FROM X$KRBMSFT WHERE FNAME_KRBMSFT LIKE '%'|| NVL(lp_string, FNAME_KRBMSFT)||'%' ) LOOP PIPE ROW(file_list.file_name); END LOOP; END; /

3.- Grant necessary permissions:

grant execute on LIST_FILES to public; create public synonym list_files for sys.LIST_FILES;

4.- Test without WHERE clause:

TESTDB> select * from table(list_files); COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_09092011.log /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old /nfs/oracle/TESTDB/pump_dir/imdp_piece_TESTDB_12092011.log 8 rows selected. Elapsed: 00:00:00.10

5.- Test with WHERE clause:

TESTDB> select * from table(list_files) where column_value like '%dmp%'; COLUMN_VALUE ---------------------------------------------------------------------------------------------------- /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece2.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece3.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece4.dmp /nfs/oracle/TESTDB/pump_dir/expdp_TESTDB_piece1.dmp_old Elapsed: 00:00:00.12

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

ORA-16072: a minimum of one standby database destination is required 27 August 2011

Posted by David Alejo Marcos in ASM, Exadata, Oracle 11.2, RAC, RMAN.Tags: ASM, backup, Dataguard, Exadata, Oracle 11.2, RAC, RMAN, Standby

comments closed

This is a quick post regarding the error on the subject. This is the second time it happens to me, so I thought I will write a bit about it.

The problem:

I am refreshing one of my UAT environments (happens to be a Full Rack Exadata) using Oracle RMAN duplicate command. Then the following happens (on both occasions).

1.- Duplicate command fails (lack of space for restoring archivelogs, or any other error). This is can be fixed quite easy.

2.- following error while trying to open the database after restore and recover has finished:

SQL> alter database open; alter database open * ERROR at line 1: ORA-03113: end-of-file on communication channel Process ID: 13710 Session ID: 1250 Serial number: 5 SQL> exit

On the alert.log file we can read the following:

Wed Aug 24 13:32:48 2011 alter database open Wed Aug 24 13:32:49 2011 LGWR: STARTING ARCH PROCESSES Wed Aug 24 13:32:49 2011 ARC0 started with pid=49, OS id=13950 ARC0: Archival started LGWR: STARTING ARCH PROCESSES COMPLETE ARC0: STARTING ARCH PROCESSES <strong>LGWR: Primary database is in MAXIMUM AVAILABILITY mode</strong> <strong>LGWR: Destination LOG_ARCHIVE_DEST_1 is not serviced by LGWR</strong> <strong>LGWR: Minimum of 1 LGWR standby database required</strong> Errors in file /apps/oracle/server/diag/rdbms/xxxx04/xxxx041/trace/xxxx041_lgwr_13465.trc: <strong>ORA-16072: a minimum of one standby database destination is required</strong> Errors in file /apps/oracle/server/diag/rdbms/xxxx04/xxxx041/trace/xxxx041_lgwr_13465.trc: ORA-16072: a minimum of one standby database destination is required LGWR (ospid: 13465): terminating the instance due to error 16072 Wed Aug 24 13:32:50 2011 ARC1 started with pid=48, OS id=13952 Wed Aug 24 13:32:50 2011 System state dump is made for local instance System State dumped to trace file /apps/oracle/server/diag/rdbms/xxxx04/xxxx041/trace/xxxx041_diag_13137.trc Trace dumping is performing id=[cdmp_20110824133250] Instance terminated by LGWR, pid = 13465

The Solution:

Quite simple:

1.- Start up database in mount mode:

SQL> startup mount ORACLE instance started. Total System Global Area 1.7103E+10 bytes Fixed Size 2230472 bytes Variable Size 4731176760 bytes Database Buffers 1.2180E+10 bytes Redo Buffers 189497344 bytes Database mounted. SQL> select open_mode, DATABASE_ROLE, guard_status, SWITCHOVER_STATUS from v$database; OPEN_MODE DATABASE_ROLE GUARD_S SWITCHOVER_STATUS -------------------- ---------------- ------- -------------------- MOUNTED PRIMARY NONE NOT ALLOWED

2.- Execute the following command:

SQL> alter database set standby database to maximize performance; Database altered. SQL> select open_mode, DATABASE_ROLE, guard_status, SWITCHOVER_STATUS from v$database; OPEN_MODE DATABASE_ROLE GUARD_S SWITCHOVER_STATUS -------------------- ---------------- ------- -------------------- MOUNTED PRIMARY NONE NOT ALLOWED

3.- Stop database:

SQL shutdown immediate ORA-01109: database not open Database dismounted. ORACLE instance shut down.

4.- Start up database mount mode:

SQL> startup mount ORACLE instance started. Total System Global Area 1.7103E+10 bytes Fixed Size 2230472 bytes Variable Size 4731176760 bytes Database Buffers 1.2180E+10 bytes Redo Buffers 189497344 bytes Database mounted.

5.- Open database:

SQL> alter database open; Database altered. SQL> select open_mode from v$database; OPEN_MODE -------------------- READ WRITE SQL> select instance_name from v$instance; INSTANCE_NAME ---------------- xxxx041 SQL>

As always, comments are welcome.

David Alejo-Marcos.

David Marcos Consulting Ltd.

Check status voting disk. 6 July 2011

Posted by David Alejo Marcos in Exadata, Oracle 11.2, RAC.Tags: Exadata, Oracle 11.2, RAC

comments closed

This is a quick blog as to how to check the status of Voting Disks.

The problem:

You receive a call/email from you 1st line support with something similar to:

---- [cssd(9956)]CRS-1604:CSSD voting file is offline: o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin; details at (:CSSNM00058:) in /apps/oracle/grid_11.2/log/sssss/cssd/ocssd.log. ----

The solution:

Easy to check using crsctl:

oracle$ crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE a0a559213xxxxxxffa67c2df0fdc12 (o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin) [SYSTEMDG] 2. ONLINE 121231203xxxxxxfc4523c5c34d900 (o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin) [SYSTEMDG] 3. ONLINE a6b3c0281xxxxxxf3f6f9f1fd230ea (o/xxx.xxx.xx.xx/SYSTEMDG_CD_05_SSSSSin) [SYSTEMDG] Located 3 voting disk(s).

As always, comments are welcome.

How to test your Dataguard Configuration (Standby Database) 1 March 2011

Posted by David Alejo Marcos in Oracle 11.2, RAC, Standby.Tags: Oracle 11.2, RAC, Standby

comments closed

In a couple of weeks we will have to test our DR (based on standby database) configuration for our 3-node cluster.

This cluster has 3 databases running and, as part of the DR test, we will do a controlled fail over to DR, run some batches over there and fail back to production.

The problem:

Activate physical standby for testing. As part of the test, we will run some batches to ensure DR if fit to purpose, flashback any changes, and fail back to production.

The solution:

Below are the steps we will follow.

— Create restore point on standby

STANDBY> SELECT flashback_on, log_mode FROM v$database;

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

STANDBY> CREATE RESTORE POINT before_dr_test GUARANTEE FLASHBACK DATABASE;

STANDBY> SELECT scn, database_incarnation#, guarantee_flashback_database, storage_size, time, name FROM gv$restore_point;

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE USING CURRENT LOGFILE DISCONNECT;

— Perform couple of logfile switches.

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

— Next step only if you are using Dataguard Broker.

PRIMARY> ALTER SYSTEM SET DG_BROKER_START=FALSE;

PRIMARY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=DEFER;

— We will not ship any logfiles to old primary due to change of role.

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL;

STANDBY> ALTER SYSTEM SET LOG_ARCHVE_DEST_STATE_2=DISABLE;

— Convert standby database to a primary database.

STANDBY> ALTER DATABASE ACTIVATE STANDBY DATABASE;

STANDBY> STARTUP MOUNT FORCE;

— OPTIONAL. change dataguard protection mode to maximum performance if needed

STANDBY> ALTER DATABASE SET STANDBY DATABASE TO MAXIMIZE PERFORMANCE;

— Open database

STANDBY> ALTER DATABASE OPEN;

— Standby database is open for testing. No changes should be sent to primary database as destination is disabled.

— After testing, we perform Flashback to undo any changes and activate database as physical standby.

STANDBY> SHUTDOWN IMMEDIATE;

STANDBY> STARTUP MOUNT;

STANDBY> FLASHBACK DATABASE TO RESTORE POINT before_dr_test;

STANDBY> ALTER DATABASE CONVERT TO PHYSICAL STANDBY;

STANDBY> SHUTDOWN IMMEDIATE;

STANDBY> STARTUP MOUNT;

STANDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE USING CURRENT LOGFILE DISCONNECT;

— Enable logfile transfer on both, primary and standby.

PRIMARY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

STANDBY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

— Perform couple of logfile switches to verify configuration is working.

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> ALTER SYSTEM SWITCH LOGFILE;

PRIMARY> SELECT DEST_ID, DEST_NAME, STATUS, PROTECTION_MODE, DESTINATION, ERROR, APPLIED_THREAD#, APPLIED_SEQ#, SYNCHRONIZED, GAP_STATUS FROM V$ARCHIVE_DEST_STATUS;

— Drop restore point on DR (this will avoid to run out of space on your FRA).

STANDBY> DROP RESTORE POINT before_dr_test;

As always, comments are welcome.

appagent.bin + unable to fork new process 19 October 2010

Posted by David Alejo Marcos in Oracle 11.2, RAC.Tags: Oracle 11.2, RAC

comments closed

Yesterday (18/10/2010) we had a problem on our QA cluster.

After refreshing the database from production, several of our DBAs noticed they could not connect to the first node from time to time.

At this point, I did have a look.

The problem:

We had intermittent disconnections from node a (we have a 3-node cluster). I was told the error being raised was “unable to fork new process”.

I did have a look on Metalink, but I could not find any entry related to appagent.bin.

The solution:

We did have the same problem some time ago and we fixing by stopping the server. This type of fix is not our prefer option as we have 3 databases running on the same clusterware.

To identify the process with more threads we used the following command (Thanks to Coskan (Josh) Gundogar):

[oracle@ssssa trace]$ ps -eLf | grep -i appagent | wc -l

30793

we compared this number with node 2:

[oracle@ssssb trace]$ ps -eLf | grep appagent | wc -l

24

and it proved something was not right with the process.

Something interesting about this process on the first node was the date:

[oracle@ssssa trace]$ ps -eLf | grep appagent

oracle 14768 1 9159 0 30792 Oct15 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 14768 1 9223 0 30792 Oct15 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 14768 1 9317 0 30792 Oct15 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

so all of them (30793) were 3 days old.

I found some errors on the alert.log file regarding this process:

[oracle@ssssssa]$ cd /u01/crs/oracle/product/11.2.0/grid/log/sssssssa

[oracle@sssssssa]$ tail -100f alertssssssssa.log

[client(17908)]CRS-1013:The OCR location in an ASM disk group is inaccessible. Details in /u01/crs/oracle/product/11.2.0/grid/log/ssssssa/client/css.log.

2010-10-18 14:16:25.223

[/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin(9413)]CRS-5013:Agent "/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin" failed to start process "/u01/crs/oracle/product/11.2.0/grid/bin/lsnrctl" for action "check": details at "(:CLSN00008:)" in "/u01/crs/oracle/product/11.2.0/grid/log/ssssssa/agent/crsd/oraagent_oracle/oraagent_oracle.log"

2010-10-18 14:16:37.512

[client(10936)]CRS-2313:Fatal error: failed to initialize GIPC communication layer. GIPC error: gipcretInitialization (2).

2010-10-18 14:16:37.512

[client(10936)]CRS-2316:Fatal error: cannot initialize GPnP, CLSGPNP_ERR (Generic GPnP error).

2010-10-18 14:16:37.539

[client(10936)]CRS-1013:The OCR location in an ASM disk group is inaccessible. Details in /u01/crs/oracle/product/11.2.0/grid/log/ssssssa/client/css.log.

2010-10-18 14:16:38.920

[/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin(9413)]CRS-5013:Agent "/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin" failed to start process "/u01/crs/oracle/product/11.2.0/grid/opmn/bin/onsctli" for action "check": details at "(:CLSN00008:)" in "/u01/crs/oracle/product/11.2.0/grid/log/ssssssa/agent/crsd/oraagent_oracle/oraagent_oracle.log"

2010-10-18 14:16:53.100

[/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin(9413)]CRS-5013:Agent "/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin" failed to start process "/u01/crs/oracle/product/11.2.0/grid/bin/lsnrctl" for action "check": details at "(:CLSN00008:)" in "/u01/crs/oracle/product/11.2.0/grid/log/ssssssa/agent/crsd/oraagent_oracle/oraagent_oracle.log"

2010-10-18 14:17:25.227

[/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin(9413)]CRS-5013:Agent "/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin" failed to start process "/u01/crs/oracle/product/11.2.0/grid/bin/lsnrctl" for action "check": details at "(:CLSN00008:)" in "/u01/crs/oracle/product/11.2.0/grid/log/sssssa/agent/crsd/oraagent_oracle/oraagent_oracle.log"

2010-10-18 14:17:38.921

[/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin(9413)]CRS-5013:Agent "/u01/crs/oracle/product/11.2.0/grid/bin/oraagent.bin" failed to start process "/u01/crs/oracle/product/11.2.0/grid/opmn/bin/onsctli" for action "check": details at "(:CLSN00008:)" in "/u01/crs/oracle/product/11.2.0/grid/log/sssssa/agent/crsd/oraagent_oracle/oraagent_oracle.log"

and I was told one of the filesystem filled on Friday October 15th.

As we had an agreement with all 3 businesses to have a downtime I decided to kill the process:

[oracle@ssssssa trace]$ kill -9 14768

and it fixed the problem:

[oracle@ssssa trace]$ ps -eLf | grep appagent | wc -l

14

[oracle@ssssssa trace]$ ps -eLf | grep appagent

oracle 18655 1 18655 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18657 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18658 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18659 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18660 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18661 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18662 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18663 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18664 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18665 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18670 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18673 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 18655 1 18694 0 13 15:17 ? 00:00:00 /u01/crs/oracle/product/11.2.0/grid/bin/appagent.bin

oracle 19230 22249 19230 0 1 15:18 pts/4 00:00:00 grep appagent

Conclusions:

I am not sure if the problem with filesystems getting full is related to this issue or “The OCR location in an ASM disk group is inaccessible” logged on the alert.log.

For the above, we have an SR with Oracle.

As always, comments are welcome.

Upgrade clusterware from 10gR2 to 11gR2 7 September 2010

Posted by David Alejo Marcos in ASM, Oracle 11.2, RAC.Tags: ASM, Oracle 11.2, RAC

comments closed

With Oracle 10g becoming unsupported soon, more and more business will need to upgrade to 11g. My suggestion is to go for 11gR2 as it is more stable than 11gR1.

Most of our databases are running RAC. With this type of configuration, we need to upgrade the Clusterware before upgrading the databases.

The problem:

Upgrade Oracle Clusterware from 10.2.0.4 to 11.2.0.1.

The solution:

I was in charge of doing the upgrade on our DR environment (3-node RAC database). The same steps applies to production clusterware.

As we are running Linux the software we need is:

1.- linux.x64_11gR2_grid.zip

2.- p6880880_112000_Linux-x86-64.zip

3.- p9343627_112010_Linux-x86-64.zip

Note.- Please, make sure the patches listed above are still valid. I am aware Oracle release a new PSU1.

The steps detailed below were followed:

1.- Check the environment:

cd /u01/software/gridInfstructureUpgrade/grid

./runcluvfy.sh stage -pre crsinst -n server001a,server001b,server001c -r 11gR2 -verbose 2>&1 | tee /tmp/cluvfy.pre.crsinst_100807

2.- Make sure you have working public IP address for SCAN.

IP Addresses for SCAN:

scancrssav.

xx.xx.x.218, xx.xx.x.219, xx.xx.x.220 mask 255.255.255.192.

Checks resolves:

[oracle@server001a ~]$ host scancrssav.

scancrssav.. has address xx.xx.x.220

scancrssav.. has address xx.xx.x.219

scancrssav.. has address xx.xx.x.218

3.- Stop services and databases:

srvctl stop service -d

srvctl stop database -d

4.- Stop ASM on all nodes:

srvctl stop asm -n server001a

srvctl stop asm -n server001b

srvctl stop asm -n server001c

5.- If you are running dbconsole or Oracle Grid Agent, it should be stopped:

emctl stop dbconsole

or

emctl stop agent

6.- Create new CRS home on all nodes:

mkdir -p /u01/crs/oracle/product/11.2.0/grid

7.- backup OCR and Voting Disks:

cd /u01/crs/oracle/product/crs/bin

To find out location of OCR:

[oracle@server001a bin]$ ./ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 2

Total space (kbytes) : 505808

Used space (kbytes) : 28548

Available space (kbytes) : 477260

ID : 1254611171

Device/File Name : /dev/mapper/voting-ocrp6

Device/File integrity check succeeded

Device/File Name : /dev/mapper/voting-ocrp5

Device/File integrity check succeeded

Cluster registry integrity check succeeded

To find out location voting disks:

[oracle@server001a bin]$ ./crsctl query css votedisk

0. 0 /dev/mapper/voting-ocrp2

1. 0 /dev/mapper/voting-ocrp3

2. 0 /dev/mapper/voting-ocrp1

located 3 votedisk(s).

Backup OCR:

Connect as Root.

cd /u01/crs/oracle/product/crs/bin

[root@server001a bin]# ./ocrconfig -export /u01/crs/backup/ocr_backup_100807

[root@server001a bin]# ls -lrt /u01/crs/backup/ocr_backup_100807

-rw-r--r-- 1 root root 752851 Aug 7 10:14 /u01/crs/backup/ocr_backup_100807

Backup Voting Disks:

[oracle@server001a bin]$ dd if=/dev/mapper/voting-ocrp1 of=/u01/crs/backup/voting-ocrp1_4k_100807

1012095+0 records in

1012095+0 records out

518192640 bytes (518 MB) copied, 9.39839 seconds, 55.1 MB/s

[oracle@server001a bin]$ dd if=/dev/mapper/voting-ocrp2 of=/u01/crs/backup/voting-ocrp2_4k_100807

1012095+0 records in

1012095+0 records out

518192640 bytes (518 MB) copied, 10.4548 seconds, 49.6 MB/s

[oracle@server001a bin]$ dd if=/dev/mapper/voting-ocrp3 of=/u01/crs/backup/voting-ocrp3_4k_100807

1012095+0 records in

1012095+0 records out

518192640 bytes (518 MB) copied, 9.85683 seconds, 52.6 MB/s

8.- Prepare environment

unset ORA_CRS_HOME

unset ORACLE_BASE

unset ORACLE_HOME

unset ORACLE_SID

set TEMP to a location with, at least 1GB free space:

export TEMP=/u01/software/temp

export TMP=$TEMP

Set the new ORACLE_HOME environment:

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=/u01/crs/oracle/product/11.2.0/grid

9.- Execute runInstaller.

10.- Upgrade Grid Infrastructure:

11.- yes to Existing ASM Instance detected. Are you sure you want to continue?:

12.- Choose language (English is the default language):

13.- Select nodes on the Cluster (all). Leave the tick on Upgrade Cluster Automatic Storage Manager (ASM):

14.- choose SCAN name (scancrssav.):

16.- Choose groups for ASM authentication (we decide to use the same to avoid problems. This decision will be reviewed as part of our Internal Audit and Segregation of duties):

17.- if you choose the same group oracle complains (you will need to acknowledge the alert):

18.- Location for the software. Note, the image shows ORACLE_HOME as /u01/app, this should be /u01/crs

as Clusterware cannot share the same path than ORACLE_BASE:

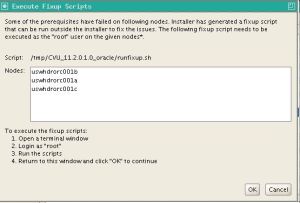

21.- Oracle gives you a script to fix:

This was the output in my case:

output:

[root@server001b CVU_11.2.0.1.0_oracle]# ./runfixup.sh

Response file being used is :./fixup.response

Enable file being used is :./fixup.enable

Log file location: ./orarun.log

Setting Kernel Parameters...

fs.file-max = 327679

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.wmem_max = 262144

net.core.wmem_max = 1048576

22.- Oracle will provide you with a summary of the installation.

23.- Running:

24.- Execute Rootupgrade.sh on all nodes:

This is the output I had:

[root@server001a ~]# /u01/crs/oracle/product/11.2.0/grid/rootupgrade.sh

Running Oracle 11g root.sh script…

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/crs/oracle/product/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file “dbhome” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file “oraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file “coraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

2010-08-07 11:08:17: Parsing the host name

2010-08-07 11:08:17: Checking for super user privileges

2010-08-07 11:08:17: User has super user privileges

Using configuration parameter file: /u01/crs/oracle/product/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

CSS appears healthy

EVM appears healthy

CRS appears healthy

Shutting down Oracle Cluster Ready Services (CRS):

Aug 07 11:08:46.458 | INF | daemon shutting down

Stopping resources. This could take several minutes.

Successfully stopped CRS resources.

Stopping CSSD.

Shutting down CSS daemon.

Shutdown request successfully issued.

Shutdown has begun. The daemons should exit soon.

LOCAL ADD MODE

Creating OCR keys for user ‘root’, privgrp ‘root’..

Operation successful.

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding daemon to inittab

CRS-4123: Oracle High Availability Services has been started.

ohasd is starting

CRS-2672: Attempting to start ‘ora.mdnsd’ on ‘server001a’

CRS-2676: Start of ‘ora.mdnsd’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.gipcd’ on ‘server001a’

CRS-2676: Start of ‘ora.gipcd’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.gpnpd’ on ‘server001a’

CRS-2676: Start of ‘ora.gpnpd’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ‘server001a’

CRS-2676: Start of ‘ora.cssdmonitor’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.cssd’ on ‘server001a’

CRS-2672: Attempting to start ‘ora.diskmon’ on ‘server001a’

CRS-2676: Start of ‘ora.diskmon’ on ‘server001a’ succeeded

CRS-2676: Start of ‘ora.cssd’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.ctssd’ on ‘server001a’

CRS-2676: Start of ‘ora.ctssd’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.crsd’ on ‘server001a’

CRS-2676: Start of ‘ora.crsd’ on ‘server001a’ succeeded

CRS-2672: Attempting to start ‘ora.evmd’ on ‘server001a’

CRS-2676: Start of ‘ora.evmd’ on ‘server001a’ succeeded

clscfg: EXISTING configuration version 3 detected.

clscfg: version 3 is 10G Release 2.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user ‘root’, privgrp ‘root’..

Operation successful.

server001a 2010/08/07 11:12:45 /u01/crs/oracle/product/11.2.0/grid/cdata/server001a/backup_20100807_111245.olr

Preparing packages for installation…

cvuqdisk-1.0.7-1

Configure Oracle Grid Infrastructure for a Cluster … succeeded

Updating inventory properties for clusterware

Starting Oracle Universal Installer…

Checking swap space: must be greater than 500 MB. Actual 7997 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oracle/oraInventory

‘UpdateNodeList’ was successful.

Starting Oracle Universal Installer…

Checking swap space: must be greater than 500 MB. Actual 7997 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oracle/oraInventory

‘UpdateNodeList’ was successful.

[root@server001a ~]#

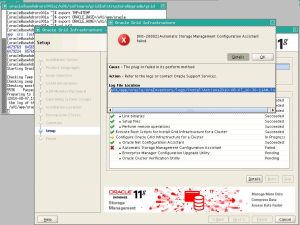

25.- After executing rootupgrade.sh, Oracle proceeds to upgrade the ASM (if we left the tick on the previous step):

26.- We had the following error while upgrading ASM:

This is what I could see from the logfile:

INFO: Found associated job

INFO: Starting 'Automatic Storage Management Configuration Assistant'

INFO: Starting 'Automatic Storage Management Configuration Assistant'

INFO: Executing ASMCA

INFO: Command /u01/crs/oracle/product/11.2.0/grid/bin/asmca -silent -upgradeASM -oui_internal

INFO: ... GenericInternalPlugIn.handleProcess() entered.

INFO: ... GenericInternalPlugIn: getting configAssistantParmas.

INFO: ... GenericInternalPlugIn: checking secretArguments.

INFO: ... GenericInternalPlugIn: starting read loop.

INFO: Read: ASMSNMP_PASSWORD_PROMPT

INFO: Processing: ASMSNMP_PASSWORD_PROMPT for argument tag -asmMonitorPassword

INFO: End of argument passing to stdin

INFO: Read:

INFO: Read: Failed to stop databases currently using ASM.

INFO: Read:

INFO: Completed Plugin named: Automatic Storage Management Configuration Assistant

This is because we have configured Clusterware to start databases automatically, and the rootupgrade.sh stops and starts CRS.

The solution in our case was to stop databases and ASM manually using srvctl.

After fixing the problem with ASM, we had an error with OCR:

INFO: ERROR:

INFO: PRVF-4178 : Check for OCR location "/dev/mapper/voting-ocrp6" failed on the following nodes:

INFO: server001a:Owner of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "root" ; Found = "oracle"];Permissions of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: server001c:Owner of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "root" ; Found = "oracle"];Permissions of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: server001b:Owner of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "root" ; Found = "oracle"];Permissions of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: Checking OCR location "/dev/mapper/voting-ocrp5"...

INFO: ERROR:

There is a solution provided in Metalink: Solution as per PRVF-4178 Reported from CLUVFY [ID 1058576.1]:

The group must be one of the groups assigned for ASM

chown root:oinstall /dev/mapper/voting-ocrp6

chown root:oinstall /dev/mapper/voting-ocrp5

chmod 0660 /dev/mapper/voting-ocrp6

chmod 0660 /dev/mapper/voting-ocrp5

but then we started to see a different error:

INFO: PRVF-4178 : Check for OCR location "/dev/mapper/voting-ocrp6" failed on the following nodes:

INFO: server001a:Permissions of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: server001c:Permissions of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: server001b:Permissions of file "/dev/mapper/voting-ocrp6" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: Checking OCR location "/dev/mapper/voting-ocrp5"...

INFO: ERROR:

INFO: PRVF-4178 : Check for OCR location "/dev/mapper/voting-ocrp5" failed on the following nodes:

INFO: server001a:Permissions of file "/dev/mapper/voting-ocrp5" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: server001c:Permissions of file "/dev/mapper/voting-ocrp5" did not match the expected value. [Expected = "0640" ; Found = "0660"]

INFO: server001b:Permissions of file "/dev/mapper/voting-ocrp5" did not match the expected value. [Expected = "0640" ; Found = "0660"]

so I decided to reach a compromise and leave it with 0660 as we will be moving it to ASM.

click “Skip” to move on.

Applying patches in Grid Infrastructure has changed completely and some security has been implemented (having to unlock and lock the environment).

I will post a new entry on how I did patch the environment.

As always, comments are welcome.

Securing passwords on scripts using Oracle Wallet 21 August 2010

Posted by David Alejo Marcos in Oracle Wallet, RMAN.Tags: Oracle Wallet, RAC

comments closed

One thing I like about being an Oracle DBA is the never-ending possibilities of improving your systems.

I have been working on our backup strategy for some time, the main goal being to have a standard script to be used in all our system.

While working on it I decided to try to secure our backup scripts (and scripts in general) as the password is hard-coded (never a good idea, specially because the user to perform the backup needs to have sysdba privileges).

I decided to use Oracle Wallet. Below are the steps and the test I performed.

The problem:

Having the password hardcoded on any script is not a good idea, specially for RMAN backup scripts as the account being used needs sysdba privileges.

The solution:

The steps I followed to secure our scripts is Oracle Wallet. The steps I performed are:

1.- Create the wallet:

mkstore -wrl -create

I will recommend to store the wallet on a different directory rather than storing it on Oracle home. For example $ORACLE_BASE/wallet:

mkstore -wrl $ORACLE_BASE/wallet -create

The command above will create an Oracle wallet with auto login feature enabled. from now on, only the operating system user who created the wallet will be able to manage it.

You will be prompted to provide a password. This password will have to be provided any time a maintenance operation is performed on the wallet.

2.- Add database connection (including connetion_string, username and password):

mkstore -wrl -createCredential <db_connection_string> <username> <password>

db_connection_string is an entry on your tnsnames.ora or any service name to connect to the desired database.

An example:

mkstore -wrl $ORACLE_BASE/wallet -createCredential prod sys mypassword

mkstore -wrl $ORACLE_BASE/wallet -createCredential catalog rman myrmanpassword

3.- add the following code to your sqlnet.ora:

SQLNET.WALLET_OVERRIDE = TRUE

WALLET_LOCATION =

(SOURCE=

(METHOD = FILE)

(METHOD_DATA = (DIRECTORY=/u01/app/oracle/wallet)

)

)

where:

WALLET_LOCATION: points to the directory where the wallet resides.

SQLNET.WALLET_OVERRIDE: will force all connections as /@db_connection_string to use the information being stored on the wallet to authenticate to databases.

4.- Test the connection:

[oracle@xxxxx]rman

RMAN> connect TARGET /@<db_connection_string>;

connected to target database: xxxx (DBID=yyyyyyyyyyy)

RMAN> connect catalog /@<rman_connection_string>;

connected to recovery catalog database

At this point, we can replace all connections:

<username>/<password>@<db_connection_string>;

by

/@<db_connection_string>;

What options does Wallet offer?

mkstore offers you the following:

1.- list the content being stored on the wallet:

mkstore -wrl -listCredential

2.- Add credentials:

mkstore -wrl -createCredential <db_connection_string> <username> <password>

3.- Modify credentials:

mkstore -wrl -modigyCredential <db_connection_string> <username> <password>

4.- Delete credentials:

mkstore -wrl -deleteCredential <db_connection_string>

Note: if you need to store more than one combination of /

for the same database you may have to create different entries on your tnsnames.ora.

Note.- If you are using the Wallet, you will probably face the following error sooner or later:

startup failed: ORA-00000: normal, successful completion

this can be due to startup force nomount or to duplicate database using RMAN.

The reason is an Oracle Bug (Bug 7258404 currently under development). The workaround is to remove SQLNET.WALLET_OVERRIDE=TRUE from your sqlnet.ora.

We are using one sqlnet.ora when using Wallet, and the default one without wallet configuration, on our script we just configure TNS_ADMIN variable.

As always, comments are welcome.

Creating a 3-node standby database. Part III 29 April 2010

Posted by David Alejo Marcos in ASM, RAC, Standby.Tags: ASM, RAC, Standby

comments closed

Time has arrived for us to test the results of our hard work by performing a switch between our single instance database to our three-node database.

I always recommend to start any test with a full backup if the space and time to do it does not jeopardize the deadlines.

Below is a list of some pre-checks worth doing:

1.- Check the full backup finished without errors.

2.- Make sure the init.ora file is the same on all 3 nodes (or pointing to the same spfile).

3.- The password file is correct (or recreate it) on all 3 nodes.

4.- check tnsnames.ora and listener.ora files are correct and the same on all 3 nodes.

5.- services are correct and running.

6.- peform a tnsping to and fro everywere to test connectivity and latency.

Ok, so we are ready for the switchover…., let’s do it:

1.- check the status of primary and standby databases:

SELECT switchover_status, database_role, open_mode FROM v$database;

2.- check the standby database is in synch ( I normally perfomr couple of switch logs and see that are being applied).

alter system switch logfile;

alter system switch logfile;

then you can check alert.log, v$log_history, v$archived_log, etc…

3.- on Primary:

Disable job queue before the switchover on Primary Database (only in memory):

alter system set job_queue_processes=0;

4.- On primary:

Check for any active sessions appart from your own:

SELECT SID, PROCESS, PROGRAM

FROM GV$SESSION

WHERE TYPE = 'USER'

AND SID <> (SELECT DISTINCT SID FROM GV$MYSTAT);

5.- On primary

Perform switchover:

alter database commit to switchover to physical standby with session shutdown;

shutdown immediate;

startup mount;

On the alert.log of the new primary database you should see something like:

Sat Apr 24 12:13:17 2010

Media Recovery Log +EVB_RECOVERY/bvmprodsh/archivelog/2010_04_24/thread_1_seq_28879.314.717163985

Identified End-Of-Redo for thread 1 sequence 28879

Sat Apr 24 12:13:17 2010

Media Recovery End-Of-Redo indicator encountered

Sat Apr 24 12:13:17 2010

Media Recovery Applied until change 202225032180

Sat Apr 24 12:13:17 2010

MRP0: Media Recovery Complete: End-Of-REDO (bvmprod3)

Resetting standby activation ID 3970545885 (0xeca9b8dd)

6.- On all standby databases, check the status:

SELECT switchover_status, database_role, open_mode FROM v$database;

7.- Complete the switchover on the NEW Primary Database:

alter database commit to switchover to primary with session shutdown;

srvctl stop database -d bvmprodsh

srvctl start database -d bvmprodsh -i bvmprod1

8.- Enable block Change Tracking if you are using incremental backups is recommended:

alter database enable block change tracking using '+EVB_RECOVERY';

9.- On all standby databases (including the old production database), enable recovery:

alter database recover managed standby database disconnect;

10.- Perform several log switch and verify are being shipped and applied on standby databases:

alter system switch logfile;

alter system switch logfile;

Now you are on a cluster database… but there is some work to be done:

1.- Create undo tablespaces for all instances (this only has to be done the first time you open the RAC database):

create undo tablespace undotbs2 datafile size 5g;

create undo tablespace undotbs3 datafile size 5g;

alter system set undo_tablespace=’undotbs1′ scope=spfile sid=’bvmprod1′;

alter system set undo_tablespace=’undotbs2′ scope=spfile sid=’bvmprod2′;

alter system set undo_tablespace=’undotbs3′ scope=spfile sid=’bvmprod3′;

2.- Add logfiles to threads 2 and 3:

ALTER DATABASE ADD LOGFILE THREAD 2

GROUP 21 '+EVB_REDO1' SIZE 250M,

GROUP 22 '+EVB_REDO1' SIZE 250M,

GROUP 23 '+EVB_REDO1' SIZE 250M,

GROUP 24 '+EVB_REDO1' SIZE 250M,

GROUP 25 '+EVB_REDO1' SIZE 250M;

ALTER DATABASE ADD LOGFILE THREAD 3

GROUP 31 ‘+EVB_REDO1’ SIZE 250M,

GROUP 32 ‘+EVB_REDO1’ SIZE 250M,

GROUP 33 ‘+EVB_REDO1’ SIZE 250M,

GROUP 34 ‘+EVB_REDO1’ SIZE 250M,

GROUP 35 ‘+EVB_REDO1′ SIZE 250M;

alter system set thread=1 scope=spfile sid=’bvmprod1′;

alter system set thread=2 scope=spfile sid=’bvmprod2′;

alter system set thread=3 scope=spfile sid=’bvmprod3’;

3.- Define local_listeners (those entries must exists on the listener.ora on all 3 nodes):

alter system set local_listener='LISTENER_BVMPROD1' scope=spfile sid='bvmprod1';

alter system set local_listener='LISTENER_BVMPROD2' scope=spfile sid='bvmprod2';

alter system set local_listener='LISTENER_BVMPROD3' scope=spfile sid='bvmprod3';

4.- Defile remote_listener (entry must exists on the listener.ora on all 3 nodes):

alter system set remote_listener='listeners_bvmprod' scope=spfile sid='*';

5.- Some RAC parameters…

alter system set instance_number=1 scope=spfile sid='bvmprod1';

alter system set instance_number=2 scope=spfile sid='bvmprod2';

alter system set instance_number=3 scope=spfile sid='bvmprod3';

alter system set cluster_database=true scope=spfile sid=’*’;

alter system set cluster_database_instances=3 scope=spfile sid=’bvmprod3′;

alter database enable instance ‘bvmprod2’;

alter database enable instance ‘bvmprod3’;

alter database enable public thread 2;

alter database enable public thread 3;

6.- Stop the instance and start it as RAC:

srvctl stop database -d bvmprodsh -i bvmprod1

srvctl start database -d bvmprodsh

srvctl start service -d bvmprodsh

srvctl status database -d bvmprodsh

srvctl status service -d bvmprodsh

Thas it pretty much all, the only bit left is to test connectivity and keep monitoring archivelogs (or redo logs) are being transferred to all standby databases and applied.

As always, comments are welcome.

Creating a 3-node standby database. Part II 15 March 2010

Posted by David Alejo Marcos in ASM, RAC, RMAN, Standby.Tags: ASM, RAC, RMAN

comments closed

On Part I we installed the software and restored the database on a single node or a 3-node RAC Clusterware.

I will explain how I did configure the database on that CRS and kept it in sync with the current production database. We have to bear in mind that the objective of this exercise is to move from the current production (single node) to an existing 3-node RAC using ASM.

Now we have a database that is an exact copy of our production database. We need to configure it as standby so we can keep it up to date until the migration.

1.- The first step is to create a copy of the current controlfile as standby:

$ rman target /

RMAN> backup current controlfile for standby format '/tmp/stdbyctl.bkp';

Once we have the controlfile for standby we copy it to the destination box using scp.

2.- We stop the database with shutdown immediate. It is important to check that LOG_FILE_NAME_CONVERT is defined using the correct directories to avoid problems in the following steps.

3.- Start the standby database as nomount.

4.- Restore the controlfile for standby:

$ rman nocatalog target /

RMAN> restore standby controlfile from '/tmp/stdbyctl.bkp';

5.- Mount standby database.

RMAN> alter database mount;

6.- Catalog datafiles of the standby database:

the following command will list all files and ask if you want them to be catalog. It is important to review the list and say YES if the list is correct. In our ASM configuration, the path for this database was ‘+EVB_DATA/BVMPRODSH/DATAFILE/’

RMAN> catalog start with '+EVB_DATA/BVMPRODSH/DATAFILE/';

7.- Once the command has finished, we have to “commit” those changes on the controlfile:

RMAN> switch database to copy;

RMAN> EXIT;

8.- If you were using Flashback, it is highly recommended to re-enable it again to avoid problems:

$ sqlplus / as sysdba

SQL> alter database flashback off;

Database altered.

SQL> alter database flashback on;

9.- Now we have to perform some housekeeping… Starting with the redo logs:

9.1.- Find out how many groups we have on the database and clear them:

SQL> select group# from v$log;

GROUP#

———-

1

2

3

SQL> alter database clear logfile group 1;

Database altered.

SQL> alter database clear logfile group 2;

Database altered.

SQL> alter database clear logfile group 3;

Database altered.

9.2.- Now we have to do the same for the standby redo logs:

SQL> select group# from v$standby_log;

GROUP#

———-

4

5

6

SQL> alter database clear logfile group 4;

Database altered.

SQL> alter database clear logfile group 5;

Database altered.

SQL> alter database clear logfile group 6;

Database altered.

OR

9.3.- Create standby redo logs if there are no present:

SQL> select group# from v$standby_log;

no row selected

SQL> alter database add standby logfile group 4 size 250m;

Database altered.

SQL> alter database add standby logfile group 5 size 250m;

Database altered.

SQL> alter database add standby logfile group 6 size 250m;

Database altered.

9.4.- Create spfile in ASM.

This step is not a must, but it is a good idea to use spfiles due to the flexibility that provides:

SQL> create spfile='+EVB_DATA' from pfile;

SQL> shutdown immediate

Edit init.ora file and paste the location of the spfile. This can be found using asmcmd under the diskgroup +EVB_DATA/BVMPRODSH/parameterfile

$ vi $ORACLE_HOME/dbms/init.ora

spfile='+EVB_DATA/BVMPRODSH/parameterfile/spfile.309.712071991'

SQL> startup mount;

10.- Configure the standby parameters, tnsnames.ora,…

10.1.- create entries on the TNSNames file to point to the current production on the standby server. Check you can connect as sys and you can tnsping to the standby server.

10.2.- create entries on the TNSNames file to point to the standby server on the current production server. Check you can connect as sys and you can tnsping to the production server.

10.3.- modify log_archive_config to have the primary database and ALL standby databases on all databases:

SQL> alter system set log_archive_config='DG_CONFIG=(<primary>,<standby1>,<standby2>...)' scope=both sid = '*';

10.4.- configure log_archive_dest_x to point to the new standby database on the primary database. Check log_archive_dest_state_x is enabled:

SQL> alter system set log_archive_dest_3='SERVICE= LGWR ASYNC VALID_FOR=(ALL_LOGFILES,PRIMARY_ROLE) DB_UNIQUE_NAME=' scope=both sid = '*';

10.5.- configure log_archive_dest_x to point to the production database on the new standby database. Check log_archive_dest_state_x is enabled:

SQL> alter system set log_archive_dest_3='SERVICE=<db_unique_name> LGWR ASYNC VALID_FOR=(ALL_LOGFILES,PRIMARY_ROLE) DB_UNIQUE_NAME=<db_unique_name>' scope=both sid = '*';

10.6.- Configure FAL_CLIENT and FAL_SERVER for gap resolution for primary and standby databases:

SQL> alter system set fal_client=<db_unique_name> scope=both sid='*';

SQL> alter system set fal_server=<db_unique_name for primary and standby databases> scope=both sid='*';

11.- We are good to start the recovery process on this standby database:

SQL> alter database recover managed standby database disconnect from session;

Database altered.

SQL> exit;

12.- Check the primary and standby databases are working properly by switching couple of logfiles and checking are being transferred and applied on all standby databases:

On primary database:

SQL> alter system switch logfile;

Now we have to register the database on the CRS…

1.- Add database

srvctl add database -d bvmprodsh -o -n evbprod

2.- Add instances

srvctl add instance -d bvmprodsh -i bvmprod1 -n

srvctl add instance -d bvmprodsh -i bvmprod2 -n

srvctl add instance -d bvmprodsh -i bvmprod3 -n

3.- Modify instance to add ASM

srvctl modify instance -d bvmprodsh -i bvmprod1 -s +ASM1

srvctl modify instance -d bvmprodsh -i bvmprod2 -s +ASM2

srvctl modify instance -d bvmprodsh -i bvmprod3 -s +ASM3

4.- Create and start services:

$ srvctl add service -d bvmprodsh -s evbprod -r <primary_node1>,<primary_node2>,... -a <available_node1><available_node2>,...

$ srvctl start service -d bvmprodsh -s evbprod

$ srvctl status service -d bvmprodsh

On part III, I will explain how we tested the configuration by doing a switchover from the current production single instance database to the 3-node RAC standby database.

As always, comments are welcome.

You must be logged in to post a comment.